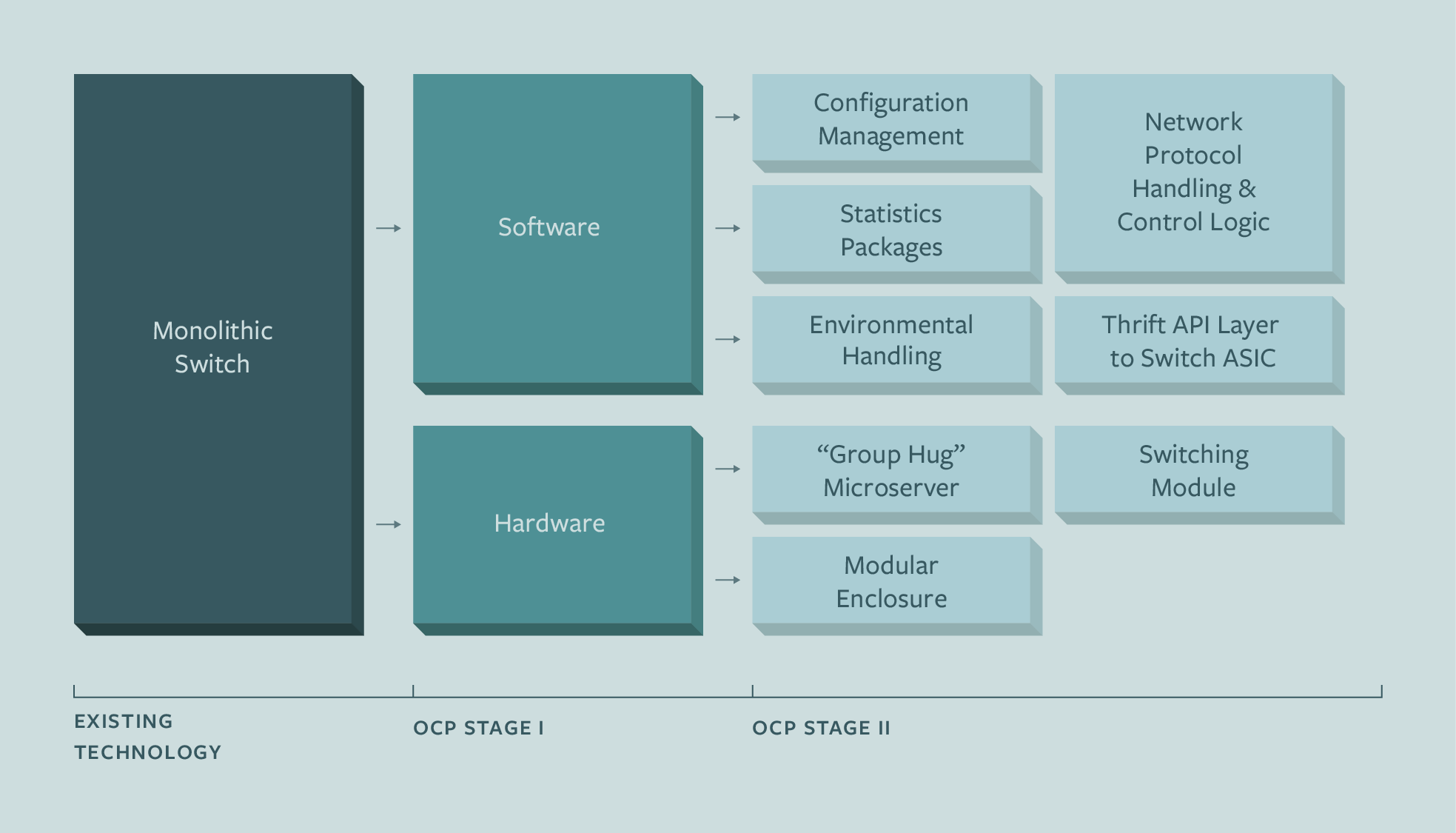

We’re big believers in the value of disaggregation – of breaking down traditional data center technologies into their core components so we can build new systems that are more flexible, more scalable, and more efficient. This approach has guided Facebook from the beginning, as we’ve grown and expanded our infrastructure to connect more than 1.28 billion people around the world.

Over the last three years, we’ve been working within the Open Compute Project (OCP) to apply this principle to open designs for racks, servers, storage boxes, and motherboards. And last year, OCP kicked off a new networking project with a goal of developing designs for OS-agnostic top-of-rack (TOR) switches. This was the first step toward disaggregating the network – separating hardware from software, so we can spur the development of more choices for each – and our progress so far has exceeded our expectations: Broadcom, Intel, Mellanox, and Accton have already contributed designs for open switches; Cumulus Networks and Big Switch Networks have made software contributions; and the development work and discussions in the project group have been highly productive.

Today we’re pleased to unveil the next step: a new top-of-rack network switch, code-named “Wedge,” and a new Linux-based operating system for that switch, code-named “FBOSS.” These projects break down the hardware and software components of the network stack even further, to provide a new level of visibility, automation, and control in the operation of the network. By combining the hardware and software modules together in new ways, “Wedge” and “FBOSS” depart from current networking design paradigms to leverage our experience in operating hundreds of thousands of servers in our data centers. In other words, our goal with these projects was to make our network look, feel, and operate more like the OCP servers we’ve already deployed, both in terms of hardware and software.

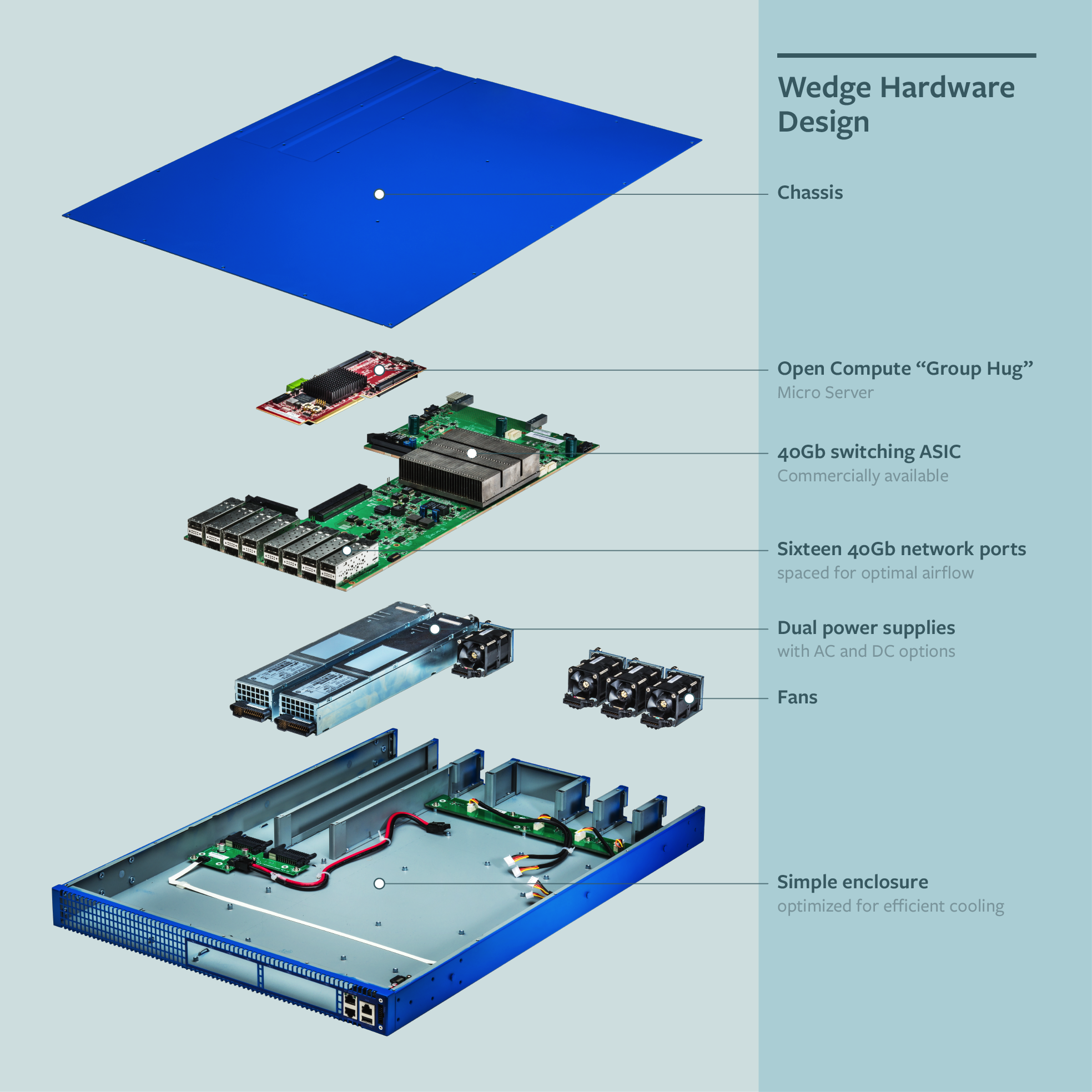

The hardware: more powerful and more modular

One of the big changes we made in designing “Wedge” was to give the switch the same power and flexibility as a server. Traditional network switches often use fixed hardware configurations and non-standard control interfaces, limiting the capabilities of the device and complicating deployments. We chose to leverage our existing “Group Hug” architecture for modular microservers, which enables us to use a wide range of microservers from across the open hardware ecosystem. For our own deployment, we’ve started with a microserver that we’re using elsewhere in our infrastructure. But the open form factor will allow us to use a range of processors, including products from Intel, AMD, or ARM.

Why does this matter? By using a real server module in the switch, we’re able to bring switches into our distributed fleet management systems and provision them with our standard Linux-based operating environment. This enables us to deploy, monitor, and control these systems alongside our servers and storage — which in turn allows our engineers to focus more on bringing new capabilities to our network and less on managing the existing systems.

The diagram below highlights the modular approach we’ve taken to the hardware design. Unlike with traditional closed-hardware switches, with “Wedge” anyone can modify or replace any of the components in our design to better meet their needs. For example, you could use an ARM-based microserver rather than the Intel-based microserver we’ve selected. Or you could take the electronics and repackage them in a new enclosure, perhaps to solve a different set of problems outside the rack. We’re excited to see where the community will take this design in the future.

The software: greater accessibility and better control

On the software side, we wanted “FBOSS” to help us move more quickly. It was designed to allow us to leverage the software libraries and systems we currently use for managing our server fleet, including initial turn-up and decommissioning, upgrades and downgrades, and draining and undraining. By controlling the programming of the switch hardware, we can implement our own forwarding software much faster. We also added a Thrift-based abstraction layer on top of the switch ASIC APIs, which will enable our engineers to treat “Wedge” like any other service in Facebook. With “FBOSS,” all our infrastructure software engineers instantly become network engineers.

The service layer in “FBOSS” allows us to implement a hybrid of distributed and centralized control. We ultimately want the flexibility to optimize where the control logic resides, which in turn will allow us to get higher utilization on our links, troubleshoot easier, recover from failure faster, and respond more quickly to sudden changes in global traffic. For example, using a central controller, we can now find the optimal network path for data at the edges of the network. By doing so, we’ve managed to boost the utilization of edge network resources to more than 90 percent, while serving Facebook traffic without a backlog of packets.

With “FBOSS,” we can also leverage existing Facebook tools for environmental monitoring that give us insight into the systems’ performance, like cooling fan behavior, internal temperatures, and voltage levels. These details allow us to accurately determine power usage and efficiency, performance, and predictive maintenance.

What’s next?

“Wedge” and “FBOSS” are currently being tested in our network. We plan to propose the designs for “Wedge” and the central pieces of “FBOSS” as contributions to OCP, so others can start consuming the designs and building on them.

We will also continue to work with the OCP community on the existing networking project, and we welcome other network operators; hardware and software vendors; open source advocates; and industry and research teams to join us in accelerating innovation in the networking hardware space.

Thanks to the entire Facebook team who have contributed to the development of “Wedge” and “FBOSS.”