With people watching more than 100 million hours of video every day on Facebook, 95-plus million photos and videos being posted to Instagram every day, and 400 million people now using voice and video chat on Messenger every month, we continue to innovate on our server hardware fleet to scale and improve the performance of our apps and services.

Today, at the OCP Summit 2017, we are announcing an end-to-end refresh of our server hardware fleet. The design specifications are available through the Open Compute Project site, and a comprehensive set of hardware design files for all systems will be released shortly.

Bryce Canyon

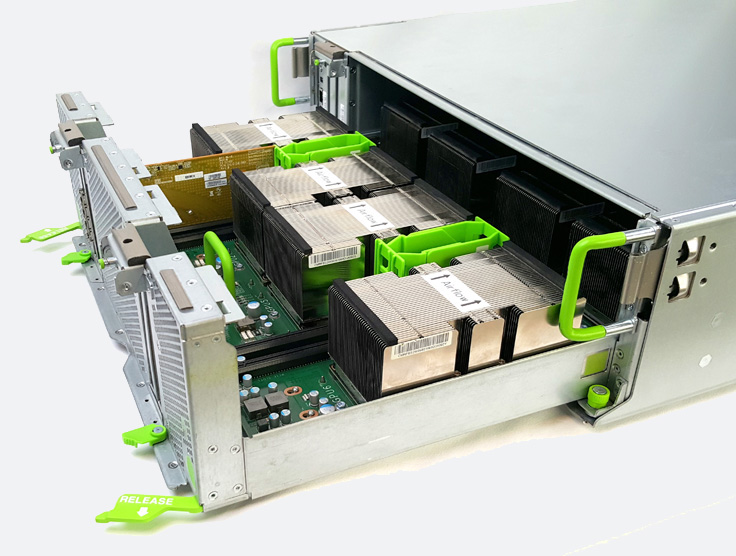

Bryce Canyon is our first major storage chassis designed from the ground up since Open Vault (Knox) was released in 2013. This new storage platform will be used primarily for high-density storage, including for photos and videos, and will provide increased efficiency and performance.

This new storage chassis supports 72 HDDs in 4 OU (Open Rack units), an HDD density 20 percent higher than that of Open Vault. Its modular design allows multiple configurations, from JBOD to a powerful storage server. The platform supports more powerful processors and a memory footprint up to 4x larger than its predecessor. Bryce Canyon also improves thermal and power efficiency by using larger, more efficient 92 mm fans that simultaneously cool the front three rows of HDDs and pull air beneath the chassis, providing cool air to the rear three rows of HDDs. It also is compatible with the Open Rack v2 standard.

By leveraging Mono Lake, the Open Compute single-socket (1S) server card, as the compute module, a Bryce Canyon storage server provides a 4x increase in compute capability over the Honey Badger storage server based on Open Vault. Bryce Canyon also leverages OpenBMC to manage thermals and power, employing the common management framework for most new hardware in Facebook data centers.

You can read more about Bryce Canyon here, and find the preliminary specification on the Open Compute Project site.

Big Basin

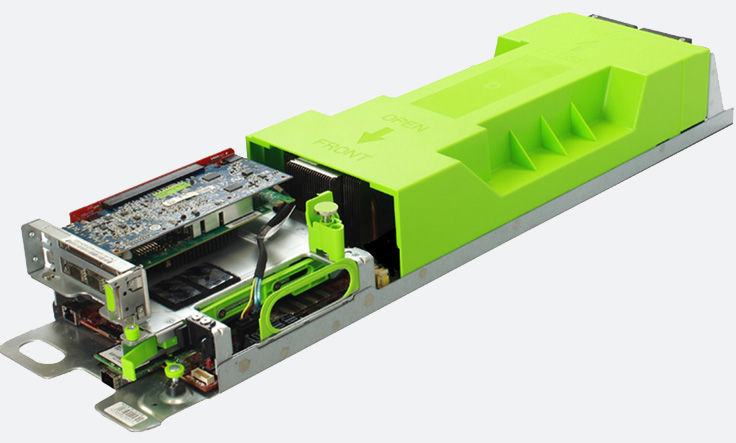

Big Basin is the successor to our Big Sur GPU server, our first widely deployed, high-performance compute platform that helps us train larger and deeper neural networks. We learned a lot from the deployment of Big Sur into our infrastructure, and incorporated improvements in serviceability, reliability, performance, and cluster management into Big Basin.

Big Basin can train models that are 30 percent larger because of the availability of greater arithmetic throughput and a memory size increase from 12 GB to 16 GB. In tests with popular image classification models like ResNet-50, we were able to reach almost 100 percent improvement in throughput compared with Big Sur, allowing us to experiment faster and work with more complex models than before.

Big Basin is designed as a JBOG (just a bunch of GPUs) to allow for the complete disaggregation of the CPU compute from the GPUs. It does not have compute and networking built in, so it requires an external server head node, similar to our Open Vault JBOD and Lightning JBOF. By designing it this way, we can connect our Open Compute servers as a separate building block from the Big Basin unit and scale each block independently as new CPUs and GPUs are released. This modular design enabled us to leverage a modified version of the retimer card being used with Lightning. The server also supports eight high-performance GPUs (specifically, eight NVIDIA Tesla P100 GPU accelerators), it’s compatible with Open Rack v2, and it occupies 3 OU of space.

You can read more about Big Basin here and find its preliminary specification on the Open Compute Project site.

Tioga Pass

Tioga Pass is the successor to Leopard, which is used for a variety of compute services at Facebook. Tioga Pass has a dual-socket motherboard, which uses the same 6.5” by 20” form factor and supports single-sided and double-sided designs. The double-sided design, with DIMMs on both PCB sides, allows us to maximize the memory configuration. The onboard mSATA connector on Leopard has been replaced with an M.2 slot to support M.2 NVMe SSDs. The chassis is also compatible with Open Rack v2.

Tioga Pass upgrades the PCIe slot from x24 to x32, which allows for two x16 slots, or one x16 slot and two x8 slots, to make the server more flexible as the head node for both the Big Basin JBOG and Lightning JBOF. This doubles the available PCIe bandwidth when accessing either GPUs or flash. The addition of a 100G network interface controller (NIC) also enables higher-bandwidth access to flash storage when used as a head node for Lightning. This is also Facebook’s first dual-CPU server to use OpenBMC after it was introduced with our Mono Lake server last year.

The preliminary specification for Tioga Pass can be found at the Open Compute Project site.

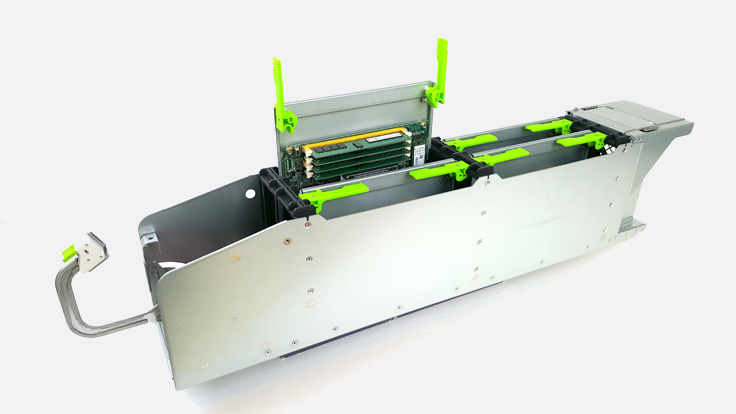

Yosemite v2 and Twin Lakes

Yosemite v2 is a refresh of Yosemite, our first-generation multi-node compute platform that holds four 1S server cards, and provides the flexibility and power efficiency for high-density, scale-out data centers. Although Yosemite v2 uses a new 4 OU vCubby chassis design, it is still compatible with Open Rack v2. Each cubby supports four 1S server cards, or two servers plus two device cards. Each of the four servers can connect to either a 50G or 100G multi-host NIC.

Unlike Yosemite, the new power design supports hot service — servers can continue to operate and don’t need to be powered down when the sled is pulled out of the chassis for components to be serviced. With the previous design, repairing a single server prevents access to the other three servers since all four servers lose power.

The Yosemite v2 chassis supports both Mono Lake as well as the next-generation Twin Lakes 1S server. It also supports device cards such as the Glacier Point SSD carrier card and Crane Flat device carrier card. This enables us to add increased flash storage as well as support for standard PCIe add-in cards. Like the previous generation, Yosemite v2 also supports OpenBMC.

The preliminary specification for Yosemite v2 can be found at the Open Compute Project site.

Learn more

As our infrastructure has scaled, we’ve had to continue to innovate. By designing and building our own servers, we’ve been able to break down the traditional computing components and rebuild them into modular disaggregated systems. This allows us to replace the hardware or software as soon as better technology becomes available, and provides us with the flexibility and efficiency we need. We’re thrilled to bring this flexibility and efficiency to the industry through the Open Compute Project.

More information on the hardware designs, including preliminary specifications, are available on the Open Compute Project website.