In hardware design, there are two approaches to solving the vast computing needs of a site like Facebook. There’s the approach of “scale up” — building ever-increasing amounts of computing power in a given system. Or you can “scale out,” building an ever-increasing fleet of simple systems, each with a moderate amount of computing power.

This especially applies to two-socket (2S) computing platforms, which have become scale-up systems. 2S has been the mainstream server architecture for a long time for good reason. With multiple high-performance processors, it’s strong and versatile, but it’s also bulky and power-hungry. In other words, it’s not optimized for scale-out uses. As we continued to evolve our infrastructure, we realized 2S was the wrong tool for some of our needs. To provide our infrastructure with capacity that scales out with the demand, we designed a modular chassis that contains high-powered system-on-a-chip (SoC) processor cards, code-named “Yosemite.” Today, we’re proposing the Yosemite design as a contribution to the Open Compute Project for all members of the community to build on and deploy.

We started experimenting with SoCs about two years ago. At that time, the SoC products on the market were mostly lightweight, focusing on small cores and low power. Most of them were less than 30W. Our first approach was to pack up to 36 SoCs into a 2U enclosure, which could become up to 540 SoCs per rack. But that solution didn’t work well because the single-thread performance was too low, resulting in higher latency for our web platform. Based on that experiment, we set our sights on higher-power processors while maintaining the modular SoC approach.

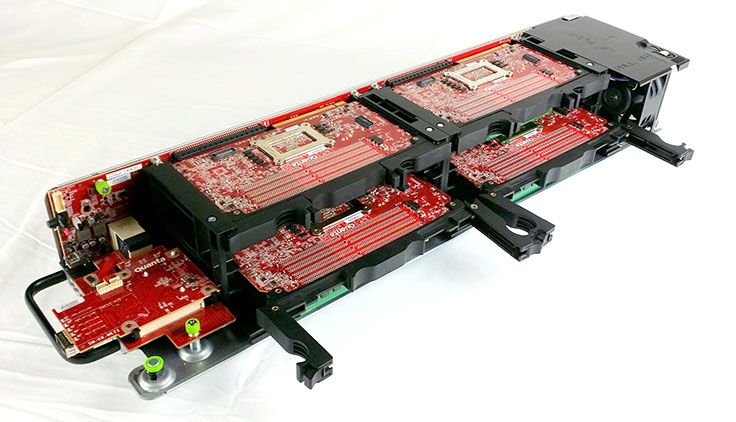

For Yosemite, we defined each server node as a pluggable module. Each module holds one SoC targeting up to 65W TDP, multiple memory channels with standard DDR DIMM slots, at least one local SSD interface, and a local management controller. We also standardized the module interface such that compliant cards and systems can interoperate. This interface is an extension from the original “Group Hug” OCP microserver interface, extended to provide more I/O through an additional PCI-E x16 connector. The Yosemite system holds four SoC cards consuming up to 400W total power, which provides about 90W for each SoC card. In order to simplify the external connectivity for this modular server system, we specify one shared network connection providing both data and management traffic.

Diving into the Yosemite design, we consider the following design elements to be important to the system:

- A server-class SoC with multiple memory channels, which provides high-performance computing in 65W TDP for SoC and 90W for the whole server card.

- A standard SoC card interface to provide a CPU-agnostic system interface.

- A platform-agnostic system management solution to manage the system and these 4 SoC server cards, regardless of vendor.

- A multi-host network interconnect card following OCP Mezzanine Card 2.0 specification, which connects up to 4 SoC server cards through a single Ethernet port.

- A cost-effective, flexible, and easy-to-service system structure.

This system will be fully compatible with Open Rack, which can accommodate up to 192 SoC server cards in a single rack. We’re happy to say that Mellanox has already enabled the multi-host support in its next generation ConnectX®-4 OCP Mezzanine Card. With the design proposed as a contribution for OCP, we’re excited to see what the rest of the community builds and deploys based on this submission.