- We’ve partnered with Voltron Data and the Arrow community to align and converge Apache Arrow with Velox, Meta’s open source execution engine.

- Apache Arrow 15 includes three new format layouts developed through this partnership: StringView, ListView, and Run-End-Encoding (REE).

- This new convergence helps Meta and the larger community build data management systems that are unified, more efficient, and composable.

Meta’s Data Infrastructure teams have been rethinking how data management systems are designed. We want to make our data management systems more composable – meaning that instead of individually developing systems as monoliths we identify common components, factor them out as reusable libraries, and leverage common APIs and standards to increase the interoperability between them.

As we decompose our large, monolithic systems into a more modular stack of reusable components, open standards, such as Apache Arrow, play an important role for interoperability of these components. To further our efforts in creating a more unified data landscape for our systems as well as those in the larger community, we’ve partnered with Voltron Data and the Arrow community to converge Apache Arrow’s open source columnar layouts with Velox, Meta’s open source execution engine.

The result combines the efficiency and agility offered by Velox with the widely-used Apache standard.

Why we need a composable data management system

Meta’s data engines support large-scale workloads that include processing large datasets offline (ETL), interactive dashboard generation, ad hoc data exploration, and stream processing. More recently, a variety of feature engineering, data preprocessing, and training systems were built to support our rapidly expanding AI/ML infrastructure. To ensure our engineering teams can efficiently maintain and enhance these engines as our products evolve, Meta has started a series of projects aimed at increasing our engineering efficiency by minimizing the duplication of work, improving the experience of internal data users through more consistent semantics across these engines, and, ultimately, accelerating the pace of innovation in data management.

An introduction to Velox

Velox is the first project in our composable data management system program. It’s a unified execution engine, implemented as a C++ library, aimed at replacing the very processing core of many of these data management systems – their execution engine.

Velox improves the efficiency of these systems by providing a unified, state-of-the-art implementation of features and optimizations that were previously only available in individual engines. It also improves the engineering efficiency of our organization since these features can now be written once, in a single library, and be (re-)used everywhere.

Velox is currently in different stages of integration in more than 10 of Meta’s data systems. We have observed 3-10x efficiency improvements in integrations with well-known systems in the industry like Apache Spark and Presto.

We open-sourced Velox in 2022. Today, it is developed in collaboration with more than 200 individual contributors around the world from more than 20 companies.

Open standards and Apache Arrow

In order to enable interoperability with other components, a composable data management system has to understand common storage (file) formats, network serialization protocols, table APIs, and have a unified way of expressing computation. Oftentimes these components have to directly share in-memory datasets with each other, for example, when transferring data across language boundaries (C++ to Java or Python) for efficient UDF support.

Our focus is to use open standards in these APIs as often as possible. Apache Arrow is an open source in-memory layout standard for columnar data that has been widely adopted in the industry. In a way, Arrow can be seen as the layer underneath Velox: Arrow describes how columnar data is represented in memory; Velox provides a series of execution and resource management primitives to process this data.

Although the Arrow format predates Velox, we made a conscious design decision while creating Velox to extend and deviate from the Arrow format, creating a layout we call Velox Vectors. The purpose was to accelerate the data processing operations commonly found in our workloads in ways that were not possible using Arrow. Velox Vectors provided the efficiency and agility we need to move fast, but in return created a fragmented space with limited component interoperability.

To bridge this gap and create a more unified data landscape for our systems and the community, we partnered with Voltron Data and the Arrow community to align and converge these two formats. After a year of work, the new Apache Arrow release, Apache Arrow 15.0.0, includes three new format layouts inspired by Velox Vectors: StringView, ListView, and Run-End-Encoding (REE).

Arrow 15 not only enables efficient (zero-copy) in-memory communication across components using Velox and Arrow, but also increases Arrow’s applicability in modern execution engines, unlocking a variety of use cases across the industry.

Details of the Arrow and Velox layout

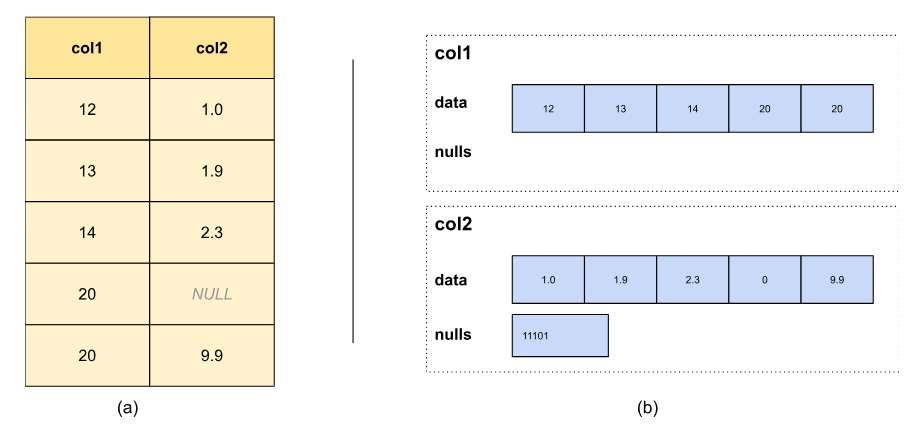

Both Arrow and Velox Vectors are columnar layouts whose purpose is to represent batches of data in memory. A column is usually composed of a sequential buffer where row values are stored contiguously and an optional bitmask to represent the nullability/validity of each value:

The Arrow and Velox Vectors formats already had compatible layout representations for scalar fixed-size data types (such as integers, floats, and booleans) and dictionary-encoded data. However, there were incompatibilities in string representation and container types such as arrays and maps, and a lack of support for constant and run-length-encoded (RLE) data.

StringView – strings

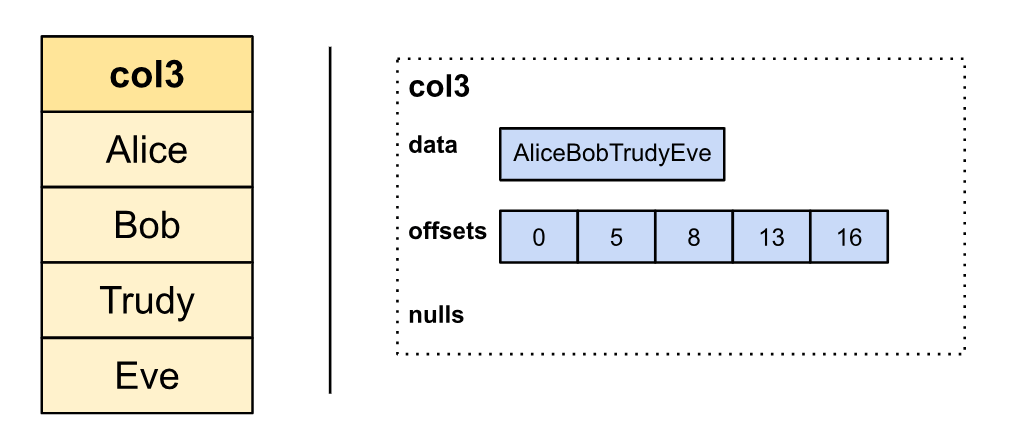

Arrow’s typical string representation uses the variable-sized element layout, which consists of one contiguous buffer containing the string contents (the data), and one buffer marking where each string starts (the offsets). The size of a string i can be obtained by subtracting offsets[i+1] by offsets[i]. This is equivalent to representing strings as an array of characters:

While Arrow’s representation stands out in simplicity, we found through a series of experiments that the following alternate string representation (which is now referred to as StringView) provides compelling properties that are important for efficient string processing:

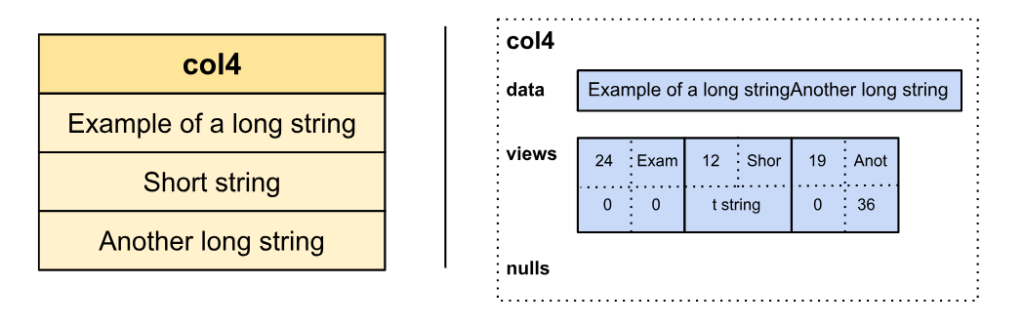

In the new representation, the first four bytes of the view object always contain the string size. If the string is short (up to 12 characters), the contents are stored inline in the view structure. Otherwise, a prefix of the string is stored in the next four bytes, followed by the buffer ID (StringViews can contain multiple data buffers) and the offset in that data buffer.

The benefits of this layout are:

- Small strings of up to 12 bytes are fully inlined within the views buffer and can be read without dereferencing the data buffer. This increases memory locality as the typical cache miss of accessing the data buffer is avoided, increasing performance.

- Since StringViews store a small (four bytes) prefix with the view object, string comparisons can fail-fast and, in many cases, avoid accessing the data buffer. This property speeds up common operations such as highly selective filters and sorting.

- StringView gives developers more flexibility on how string data is laid out in memory. For example, it allows for certain common string operations, such as 𝑡𝑟𝑖𝑚() and 𝑠𝑢𝑏𝑠𝑡𝑟(), to be executed zero-copy by only updating the view object.

- Since StringView’s view object has a fixed size (16 bytes), StringViews can be written out of order (e.g., first writing StringView at position 2, then 0 and 1).

Besides these properties, we have found that other modern processing engines and libraries like Umbra and DuckDB follow a similar string representation approach, and, consequently, also used to deviate from Arrow. In Arrow 15, StringView has been added as a supported layout and can now be used to efficiently transfer string batches across these systems.

ListView – variable-sized containers

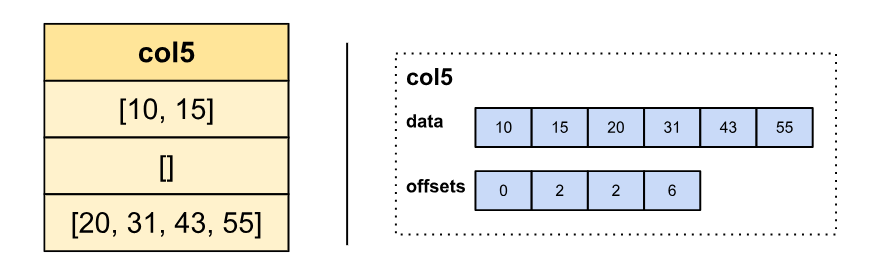

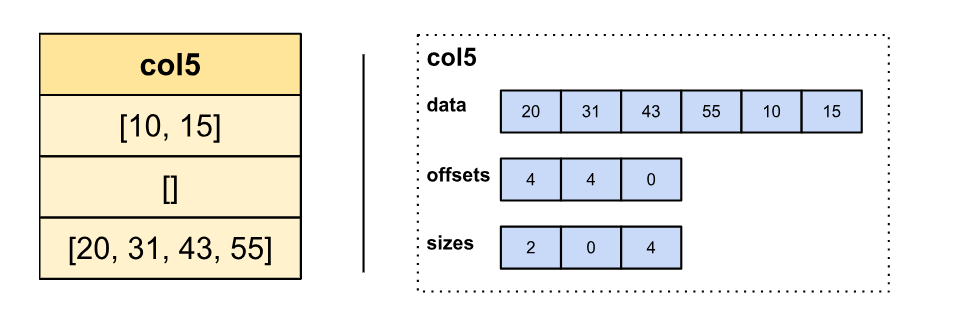

Variable-size containers like arrays and maps are represented in Arrow using one buffer containing the flattened elements from all rows, and one offsets buffer marking where the container on each row starts, similar to the original string representation. The number of elements a container on row i stores can be obtained by subtracting offsets[i+1] by offsets[i]:

To efficiently support execution of vectorized conditionals (e.g., IF and SWITCH operations), the Velox Vectors layout has to allow developers to write columns out of order. This means that developers can, for example, first write all even row records then all odd row records without having to reorganize elements that have already been written.

Primitive types can always be written out of order since the element size is constant and known beforehand. Likewise, strings can also be written out of order using StringView because the string metadata objects have a constant size (16 bytes), and string contents do not need to be written contiguously. To increase flexibility and support out-of-order writes for the remaining variable-sized types in Velox, we decided to keep both lengths and offsets buffers:

To bridge the gap, a new format called ListView has been added to Arrow 15. It allows the representation of variable-sized elements that have both lengths and offsets buffers.

Beyond allowing for efficient execution of conditionals, ListView gives developers more flexibility to slice and rearrange containers (e.g., operations like slice() and trim_array() can be implemented zero-copy), other than allowing for containers with overlapping ranges of elements.

REE – more encodings

We have also added two additional encoding formats commonly found in data warehouse workloads into Velox: constant encoding, to represent that all values in a column are the same, typically used to represent literals and partition keys; and RLE, to compactly represent consecutive runs of the same element.

Upon discussion with the community, it was decided to add the REE format to Arrow. The REE format is a slight variation of RLE that, instead of storing the lengths of each run, stores the offset in which each run ends, providing better random-access support. With REEs it is also possible to represent constant encoded values by encoding them as a single run whose size is the entire batch.

Composability is the future of data management

Converging Arrow and Velox’s memory layout is an important step towards making data management systems more composable. It enables systems to combine the power of Velox’s state-of-the-art execution with the widespread industry adoption of Arrow’s standard, resulting in a more efficient and seamless cooperation. The new extensions are already seeing adoption in libraries like PyArrow and Polars and within Meta. In the future, it will allow more efficient interplay between projects like Apache Gluten (which uses Velox internally) and PySpark (which consumes Arrow), for example.

We envision that fragmentation and duplication of work can be reduced by decomposing data systems into reusable components which are open source and built based on open standards and APIs. Ultimately, we hope this work will help provide the foundation required to accelerate the pace of innovation in data management.

Acknowledgments

This format alignment was only possible due to a broad collaboration across different groups. A special thank you to Masha Basmanova, Orri Erling, Xiaoxuan Meng, Krishna Pai, Jimmy Lu, Kevin Wilfong, Laith Sakka, Wei He, Bikramjeet Vig, and Sridhar Anumandla from the Velox team at Meta; Felipe Carvalho, Ben Kietzman, Jacob Wujciak-Jens, Srikanth Nadukudy, Wes McKinney, and Keith Kraus from Voltron Data; and the entire Apache Arrow community for the insightful discussions, feedback, and receptivity to new ideas.