- We are rolling out a new video calling library to all the relevant products across our apps and services, including Instagram, Messenger, Portal, Workplace chat, etc.

- To create a library generic enough to support all these different use cases, we needed to rewrite our existing library from scratch using the latest version of the open source WebRTC library, an incredibly rare undertaking that involved engineers from across the company.

- Compared with the previous library, rsys is capable of supporting multiple platforms, including Android, iOS, MacOS, Windows, and Linux. It is approximately 20 percent smaller, which makes it easy to integrate into size-constrained platforms, such as Messenger Lite. Rsys has approximately 90 percent unit test coverage and a thorough integration testing framework that covers all our major calling scenarios.

- We accomplished this by optimizing libraries and architecture for binary size wherever possible by separating the pieces needed for calling into isolated, standalone modules, and leveraging cross-platform solutions that aren’t reliant on the operating system and environment.

Facebook’s initial version of video calling was written on top of a seven-year-old fork of WebRTC specifically to enable native audio calling in Messenger. At that time, our goal was to build the most feature-rich experience possible for our users. Since then, we’ve added video calling, group calling, video chat heads, and interactive AR effects. With millions of people using video calling every month, the full-featured library that looked simple on the surface had become far more complex behind the scenes. We had a large amount of Messenger-specific code, which made it hard to support apps like Portal and Instagram. We had separate signaling protocols for group calling and peer-to-peer calling, which required us to write features twice and created a large divide in the codebase. We were also spending much more time keeping our fork of WebRTC updated with the latest improvements from open source. Finally, we were falling behind in our desire to provide reliable service for low-powered devices and in low-bandwidth scenarios.

In looking at how we could make video calling more efficient and easier to scale for the future, we realized that our best way forward was to redesign the library from the ground up and rewrite the entire library. The result is rsys, a video calling library that allows us to make use of significant advancements made in the video calling space since the original library was written in 2014. Compared to the previous version, rsys is approximately 20 percent smaller and available across all development platforms. With this new iteration, we’re reimagining how we think about our video calling platform and starting from the ground up with a new client core and extensibility framework. This helps us advance our own state-of-the-art technologies and the new codebase is designed to be sustainable and scalable for the next decade, laying the foundation for remote presence and interoperability across apps.

Smaller and faster

A smaller library loads, updates, and starts faster for the person using it, regardless of the device type or network conditions. A small library is also easier to manage, update, test, and optimize. When we started thinking about this new version, our peak binary size had reached as high as 20 MB. We were able to get some reduction by editing a few sections of code but to get where we wanted, we realized we’d need to rewrite the entire library from scratch.

The simplest way to get a smaller library would have been to strip away many of the features we’ve added over the years, but it was important to us to keep all the most used features, like AR effects. So we stepped back and looked at how we could apply what we’ve learned over the past decade and what we know about the needs of the people using our apps today. After exploring our options, we decided we needed to look past the interface and dig into the infrastructure of the library itself.

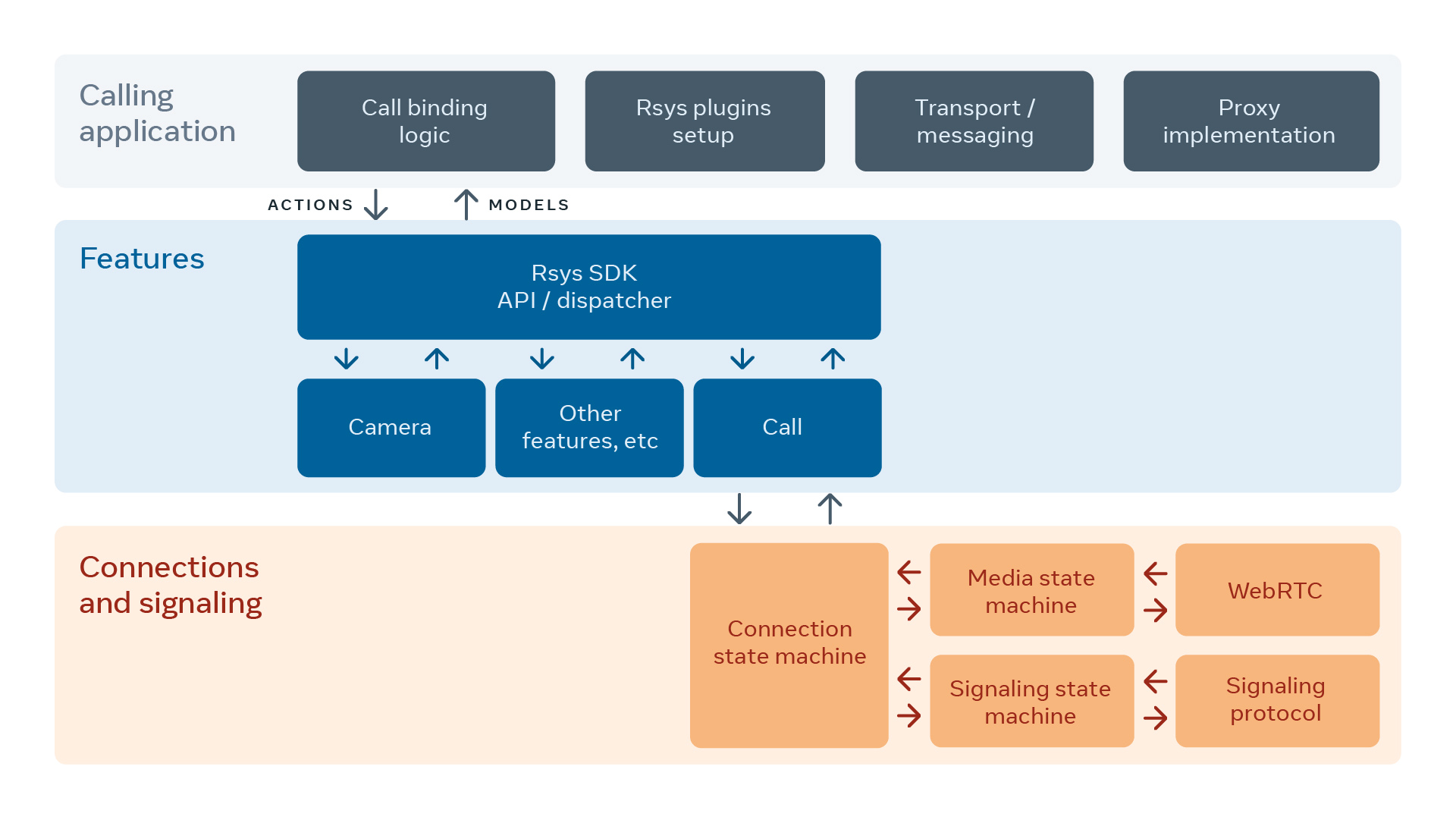

We made several architectural choices to optimize for size, introduced a plug-and-play framework using selects to compile features selectively into apps that need them, and introduced a generic framework for writing new features based on Flux architecture. We also moved away from heavily templated generic libraries like Folly and towards more optimally sized libraries like Boost.SML to realize size gains throughout all apps.

In the end, we reduced core binary size by around 20 percent, from approximately 9 MB to approximately 7 MB. We accomplished this by rebuilding our features to fit a simplified architecture and design. While we kept most of the features, we will continue to introduce more pluggable features over time. Fewer lines of code makes the library lighter, faster, and more reliable, and a streamlined code base means engineers can innovate more quickly.

One of our main goals with this work was to minimize code complexity and eliminate redundancies. We knew a unified architecture would allow for global optimization (instead of having each feature focused on local optimizations) and allow code to be reused in smart ways. To build this unified architecture, we made a few major changes:

- Signaling: We came up with a state machine architecture for the signaling stack that could unify the protocol semantics for both peer-to-peer and group calling. We were able to abstract out any protocol-specific details away from the rest of the library and provide a signaling component with the sole responsibility of negotiating shared state between call participants. By cutting duplicate code, we are able to write features once, allow making protocol changes easily as well as provide a unified user experience for peer-to-peer and group calling.

- Media: We decided to re-use our state machine architecture and apply it to the media stack as well, but this time we captured the semantics of open source WebRTC APIs. In parallel, we also worked on replacing our forked version of WebRTC with the latest, keeping any product-specific optimizations. This gave us the ability to change the WebRTC version underneath the state machine as long as the semantics of the APIs themselves did not change significantly and we could set up regular pulls from the open source codebase. This allows us to easily update to the latest features without any downtime or delays.

- SDK: In order to have feature-specific states, we used Flux architecture to manage data and provide an API for calling products that worked similarly to the React JS–based applications familiar to web developers. Each API call results in specific actions being routed through a central dispatcher. These actions are then handled by specific reducer classes and emit out model objects based on the type of action. These model objects are sent to bridges that contain all the feature-specific business logic and result in subsequent actions to change the model. Finally, all model updates are sent to the UI, where they are converted into platform-specific view objects for rendering. This allows us to clearly define a feature comprising a reducer, bridge, actions, and models, which in turn allows us to make features configurable at runtime for different apps.

- OS: To make our platform generic and scalable across various products, we decided to abstract away any functionality that directly depends on the OS. We knew that having platform-specific code for Android, iOS, etc. was necessary for certain functions like creating HW encoders, decoders, threading abstractions, etc., but we tried to create generic interfaces for these so that platforms such as MacOS and Windows could easily plug in by providing different implementations through proxy objects. We also heavily used cxx_library in Buck to configure platform-specific libraries in an easy way for compiler flags, linker arguments, etc.

What’s next

Today, our calling platform is significantly smaller and able to scale across many different use cases and platforms. We support calls that millions of people use every day. Our library is part of all our major calling apps, including Messenger, Instagram, Portal and Workplace chat. Building rsys has been a long journey, and yet, for the people using these apps, it won’t look or feel much different. It will continue to be the same great calling experience that people have come to expect and love. But this is just the beginning.

The work we’ve put into rsys will allow us to continue innovating and scaling our calling experiences as we head into the future. In addition to building a library that’s sustainable for the next decade or more, this work has laid the foundation for cross-app calling across all of our apps. It has also built the foundation we’ll need for an environment centered around remote presence.

This work was made possible in collaboration with the client platform teams. We’d like to thank everyone who contributed to rsys, particularly Ed Munoz, Hani Atassi, Alice Meng, Shelly Willard, Val Kozina, Adam Hill, Matt Hammerly, Ondrej Lehecka, Eugene Agafonov, Michael Stella, Cameron Pickett, Ian Petersen, and Mudit Goel for their help with implementation and continued guidance and support.