Many people think of Facebook as just the big blue app, or even as the website, but in recent years we’ve been building a family of apps and services that provide a wide range of ways for people to connect and share. From text to photos, through video and soon VR, the amount of information being generated in the world is only increasing. In fact, the amount of data we need to consider when we serve your News Feed has been growing by about 50 percent year over year — and from what I can tell, our waking hours aren’t keeping up with that growth rate. The best way I can think of to keep pace with this growth is to build intelligent systems that will help us sort through the deluge of content.

To tackle this, Facebook AI Research (FAIR) has been conducting ambitious research in areas like image recognition and natural language understanding. They’ve already published a series of groundbreaking papers in these areas, and today we’re announcing a few more milestones.

Object detection and memory networks

The first of these is in a subset of computer vision known as object detection.

Object detection is hard. Take this photo, for example:

How many zebras do you see in the photo? Hard to tell, right? Imagine how hard this is for a machine, which doesn’t even see the stripes — it sees only pixels. Our researchers have been working to train systems to recognize patterns in the pixels so they can be as good as or better than humans at distinguishing objects in a photo from one another — known in the field as “segmentation” — and then identifying each object. Our latest system, which we’ll be presenting at NIPS next month, can segment images 30 percent faster than most other systems, using 10x less training data.

Our next milestone is in natural language understanding, with new developments in a new technology called Memory Networks (aka MemNets). MemNets add a type of short-term memory to the convolutional neural networks that power our deep-learning systems, allowing those systems to understand language more like a human would. Earlier this year, I showed you this demo of MemNets at work, reading and then answering questions about a short synopsis of The Lord of the Rings. Now we’ve scaled this system from being able to read and answer questions on tens of lines of text to being able to perform the same task on data sets exceeding 100K questions, an order of magnitude larger than previous benchmarks.

These advancements in computer vision and natural language understanding are exciting on their own, but where it gets really exciting is when you begin to combine them. Take a look:

In this demo of the system we call VQA, or visual Q&A, you can see the promise of what happens when you combine MemNets with image recognition: We’re able to give people the ability to ask questions about what’s in a photo. Think of what this might mean to the hundreds of millions of people around the world who are visually impaired in some way. Instead of being left out of the experience when friends share photos, they’ll be able to participate. This is still very early in its development, but the promise of this technology is clear.

Prediction and planning

There are also some bigger, longer-term challenges we’re working on in AI. Some of these include unsupervised and predictive learning, where the systems can learn through observation (instead of through direct instruction, which is known as supervised learning) and then begin to make predictions based on those observations. This is something you and I do naturally — for example, none of us had to go to a university to learn that a pen will fall to the ground if you push it off your desk — and it’s how humans do most of their learning. But computers still can’t do this — our advances in computer vision and natural language understanding are still being driven by supervised learning.

The FAIR team recently started to explore these models, and you can see some of our early progress demonstrated below. The team has developed a system that can “watch” a series of visual tests — in this case, sets of precariously stacked blocks that may or may not fall — and predict the outcome. After just a few months’ work, the system can now predict correctly 90 percent of the time, which is better than most humans.

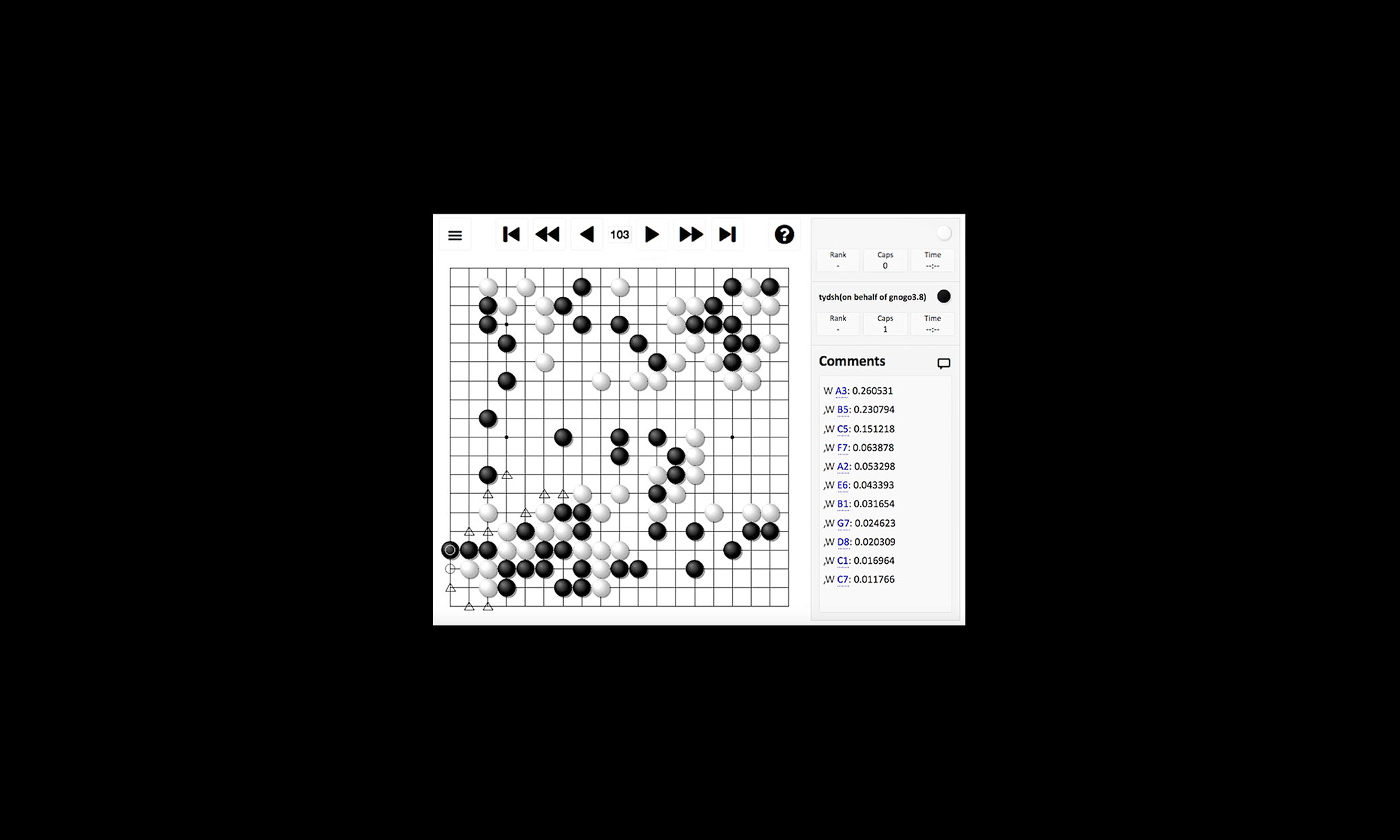

Another area of longer-term research is teaching our systems to plan. One of the things we’ve built to help do this is an AI player for the board game Go. Using games to train machines is a pretty common approach in AI research. In the last couple of decades, AI systems have become stronger than humans at games like checkers, chess, and even Jeopardy. But despite close to five decades of work on AI Go players, the best humans are still better than the best AI players. This is due in part to the number of different variations in Go. After the first two moves in a chess game, for example, there are 400 possible next moves. In Go, there are close to 130,000.

We’ve been working on our Go player for only a few months, but it’s already on par with the other AI-powered systems that have been published, and it’s already as good as a very strong human player. We’ve achieved this by combining the traditional search-based approach — modeling out each possible move as the game progresses — with a pattern-matching system built by our computer vision team. The best human Go players often take advantage of their ability to recognize patterns on the board as the game evolves, and with this approach our AI player is able to mimic that ability — with very strong early results.

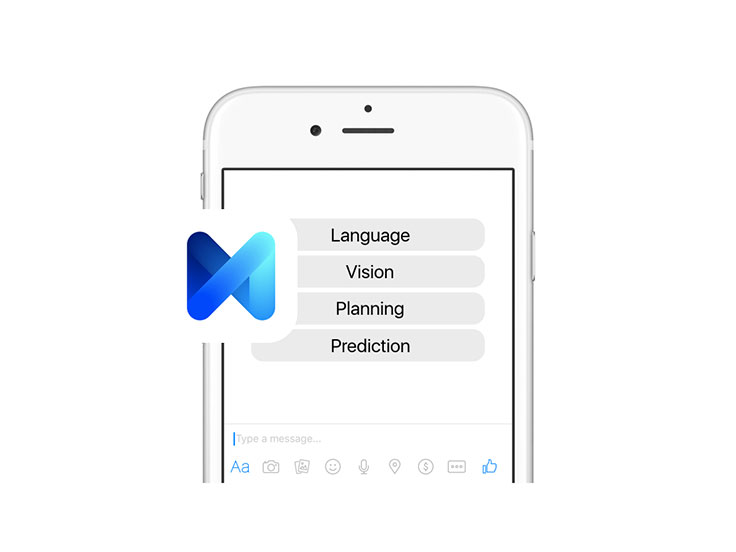

So what happens when you start to put all this together? Facebook is currently running a small test of a new AI assistant called M. Unlike other machine-driven services, M takes things further: It can actually complete tasks on your behalf. It can purchase items; arrange for gifts to be delivered to your loved ones; and book restaurant reservations, travel arrangements, appointments, and more. This is a huge technology challenge — it’s so hard that, starting out, M is a human-trained system: Human operators evaluate the AI’s suggested responses, and then they produce responses while the AI observes and learns from them.

We’d ultimately like to scale this service to billions of people around the world, but for that to be possible, the AI will need to be able to handle the majority of requests itself, with no human assistance. And to do that, we need to build all the different capabilities described above — language, vision, prediction, and planning — into M, so it can understand the context behind each request and plan ahead at every step of the way. This is a really big challenge, and we’re just getting started. But the early results are promising. For example, we recently deployed our new MemNets system into M, and it has accelerated M’s learning: When someone asks M for help ordering flowers, M now knows that the first two questions to ask are “What’s your budget?” and “Where are you sending them?”

One last point here: Some of you may look at this and say, “So what? A human could do all of those things.” And you’re right, of course — but most of us don’t have dedicated personal assistants. And that’s the “superpower” offered by a service like M: We could give every one of the billions of people in the world their own digital assistants so they can focus less on day-to-day tasks and more on the things that really matter to them.

Our AI research — along with our work to explore radical new approaches to connectivity and to enable immersive new shared experiences with Oculus VR — is a long-term endeavor. It will take a lot of years of hard work to see all this through, but if we can get these new technologies right, we will be that much closer to connecting the world.

To learn more about how we’re approaching AI research and the impact it’s already having, check out this video.