Facebook switched all its production servers to HipHop in early 2010, also releasing the project’s source code at that time. At the time of the switch, HipHop reduced our average CPU usage by 50%, the six months after its release saw an additional 1.8x performance improvement, and in the past six months the team in conjunction with the open source community has made an additional 1.7x improvement.

Facebook switched all its production servers to HipHop in early 2010, also releasing the project’s source code at that time. At the time of the switch, HipHop reduced our average CPU usage by 50%, the six months after its release saw an additional 1.8x performance improvement, and in the past six months the team in conjunction with the open source community has made an additional 1.7x improvement.

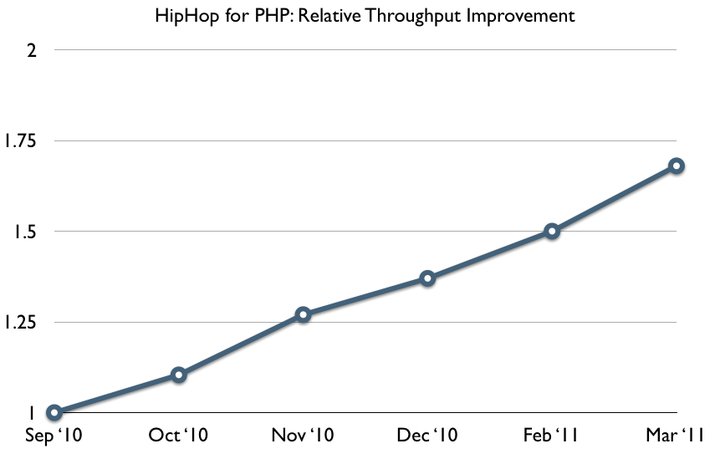

The following chart shows the relative throughput improvements on our Web servers over the past several months (excluding the impact of our product changes):

Throughput is one of the most important metrics of server efficiency. It is defined as the average rate of Web requests successfully served over a given period of time. Therefore, the work over the past several months has resulted in HipHop being able to serve roughly 70% more traffic on the exact same hardware infrastructure.

The performance improvement is achieved through various optimization techniques in the HipHop compiler and the run-time system including:

Almost-Serialization-Free APC

HipHop comes with an implementation of Alternative PHP Cache (APC), which uses Intel Threading Building Blocks for a low lock contention. Semantically, an object is serialized and unserialized when it is stored into and fetched from APC. However, serialization and unserialization are costly operations, commonly dominating the cost of APC data fetching itself. Thus, we reworked the APC implementation in HipHop, getting rid of almost all the serialization/unserialization operations, while keeping the semantics equivalent to before.

Faster Serialization and JSON Encoding

Although we got rid of most serialization cost in APC, objects still need to be serialized or JSON-encoded in order to transfer them over the wire. To make things faster, we optimized various aspects of these operations, including UTF8/UTF16 conversions, object property accesses, number parsing, and so forth.

Less Reference Counting

In PHP, every string, array, or object has a reference count. The count is incremented when a variable is bound to the value, and decremented when the variable goes out of scope or gets bound to something else. It may sound surprising that such simple operations account for a sizable chunk of the CPU time, but they actually do as they are exercised a lot. We implemented several compiler and run-time optimizations to avoid reference counting when we can infer it is not necessary.

More Compact Binary Code

Facebook has a huge amount of PHP code, which is compiled by HipHop into a large executable, and deployed to our Web servers. Because of the sheer size of the executable, instruction cache misses become a significant factor in Web server performance. Therefore, reducing the size of the generated binary, especially on hot execution paths, could often lead to improved performance. We consolidated and even uninlined various parts of the generated code, including stack frame management, memory allocation, and method invocation, to make the executable smaller and faster.

Improved Memory Allocation

jemalloc, a more performant malloc implementation, has helped improve our ability to monitor and to profile memory usage in HipHop. With its help we identified and optimized several places where large chunks of memory were frequently allocated and deallocated.

Faster Compilation

Besides optimizing the compiled code, a lot of effort has been spent on improving the compiler itself. Several phases in the compiler, including parsing, optimization, and code generation, are now parallelized. Hyvescontributed changes to the generated C++ code to make it compile faster without losing any run-time efficiency. We can build a binary that is more than 1GB (after stripping debug information) in about 15 min, with the help of distcc. Although faster compilation does not directly contribute to run-time efficiency, it helps make the deployment process better.

It has been a wonderful and rewarding experience working on improving server efficiency. It makes Facebook and everyone else using HipHop scale better with more responsive user experiences.