Streaming 360 video at 6k — twice the pixels than the industry-standard 4k — is not a trivial task, especially under the tight bandwidth and computing power budgets of mobile headset users. Last year, we developed dynamic streaming, a view-dependent adaptive bit rate streaming technology to help stream 360 video — including 6k — at a consistent high quality. Dynamic streaming optimizes video quality by serving the highest-quality video in the active field of view and reducing the quality in the periphery. It was a successful tactic, reducing the bit rate by double-digit percentages when it was first launched.

Over the past year, we worked to optimize the experience — rethinking every stage of the pipeline — looking for places where new technology could make both image quality and stream quality even better. From exploring new geometries to being more selective in stream choice, we were able to decrease bit rates by up to 92% in some cases and decrease interruptions by more than 80%. This blog will discuss the technology behind those improvements — including new variable offset cubemaps for encoding, new calculations for gauging effective quality a 360 stream — researched over the last year and announced today.

Encoding with variable offsets

The first optimization leverages a familiar concept — geometry. Over the past year, we tested offset cubemaps, a new video layout that brings even more clarity to the field of view with smooth quality distribution. With offset cubemaps, the 360 image is mapped to a cube with the camera offset toward the back of the cube. By doing this, we can allocate more pixels in the direction of the view (viewport) when appropriate, decreasing the overall resolution of the video without decreasing the quality of the viewport.

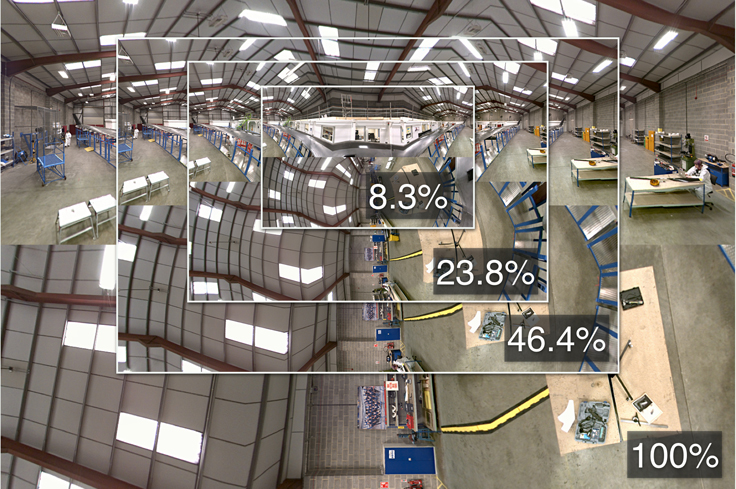

In effect, this allows us to switch the resolution makeup of the stream on the fly. We use a different offset depending on the viewing and network conditions. In the image below, each photo has been optimized to various degrees. They all have the same quality in the viewport, but the rest of the peripheral quality goes down, giving us options to not only keep streaming as the viewport moves around, but keep the view quality high even in the face of changing network conditions or movements.

Here's how it plays out in real life: If the input video is 4k and we can stream 4k, we'll stream it with zero offset. But in poor network conditions, we can still stream the same quality in the viewport because we optimized that view and not the rest of the video. If the network improves when viewports are switched, the user wouldn't see as a dramatic change in quality between viewports. The offset cube map gives a more seamless experience.

Improving reliability with careful stream selection

With the amount of data that is flowing in a 360 video, buffering is a regular concern. With a series of tweaks we were able to decrease the number of interruptions by more than 80%, making the overall experience smoother.

The biggest change is that we now look at six factors instead of four for computing stream quality. These include resolution, video quality, current view orientation, stream viewport direction, length of the offset, and field of view. For the next video chunk, we have to consider hundreds of different possible streams to serve the viewer. We take all those factors and calculate effective quality of the potential streams and then compare them to pick the best to serve under a specific network condition.

Some of the other optimizations involve how we consider and treat chunks of video. First, we estimate bandwidth on shorter time intervals and measure CDN response time and network staleness. For shorter chunks of video streamed, we want more frequent updates on the network conditions — e.g., we want to know whether there is a decreasing trend in the bandwidth. When we don't receive any data on this for some time we adjust future plans to prevent buffering delays.

We also play the currently fetching chunk. It's probably obvious, but we don't have to wait for all the data to download for the chunk to start playing. When fetching a new chunk there are two cases: When there is a fallback video chunk and when there is no fallback chunk. We decided to play the currently fetching chunk only in the cases where we don't have a fallback chunk, so we can continue playing without interruptions. But in the cases where we have a fully available fallback chunk and we're still fetching a chunk that we planned to use a replacement, we would use the fallback instead and cancel the replacement chunk.

Prefetching initialization and index chunks has helped as well. Each DASH, Dynamice Adaptive Streaming over HTTP, stream usually contains two specific chunks that are different from normal media chunks: the initialization chunk and the index chunk. Initialization chunks contain initialization data for the codec that is prepended for every media chunk, and index chunks contain the seek map and the exact byte range data for every chunk in the representation. To switch to a new stream, we need both of these chunks. We decided to prefetch them in background for all dynamic streaming streams in a DASH manifest — so whenever the player needs to switch to the new stream, it doesn't need to spend time fetching them.

Boosting player performance

To make sure the changes in stream reliability come across for the viewer, we also had to optimize the player. We did a lot of that by adjusting the computational needs of the player. Between filtering out some viewport stream candidates early, pre-computing intensive computations, and using lookup tables when possible, we were able to spread out the workload or parallelize in an efficient manner.

What's next

We're currently working on putting the end-to-end dynamic streaming optimizations into production, and many of the benefits have already landed. We're really passionate about finding new ways to make 360 content seamless and easily accessible. Keep up with the our latest efforts here on code.facebook.com or on the Facebook Engineering page.