We were excited to roll out 360 video for News Feed recently. With 360 video, you can choose which angle you want to view the video from. It’s like turning your head to look around a room — you control the perspective.

It’s an immersive addition to the ways that people can share and connect on Facebook, but it was a launch with an abundance of technical challenges to overcome. From mammoth incoming raw file sizes to warped, stitched-together imagery, 360 video is a generous playground for an engineer looking to solve a broad set of challenges. Here’s how we approached building this experience.

The problem with equirectangular layouts

The first thing we wanted to tackle was a drawback of the traditional layout used for 360 videos, called an equirectangular layout. The problem is that this layout can contain redundant information at either end. Think of it in terms of a map of the globe. Antarctica is really a circular landmass, not a drawn-out linear one. How you display the map affects how much extra Antarctica there is in the image.

In 360 video, instead of a warped landmass, there are either same-color pixels nearby or pixels that are indistinguishable at the render time, like in this image:

We found our solution to this problem by remapping equirectangular projection layouts to cube maps.

Cube map solution

Cube map projection is a combination of six faces of the cube. Cube maps have been used in computer graphics for a long time, mostly to create skyboxes (six-sided cubes that are drawn behind all graphics) and reflections. There are a few benefits of using cube maps for videos:

- Cube maps don’t have geometry distortion within the faces. So each face looks exactly as if you were looking at the object head-on with a perspective camera, which warps or transforms an object and its surrounding area. This is important because video codecs assume motion vectors as straight lines. And that’s why it encodes better than with bended motions in equirectangular.

- Cube maps’ pixels are well-distributed — each face is equally important. There are no poles as in equirectangular projection, which contains redundant information.

- Cube maps are easier to project. Each face is mapped only on the corresponding face of the cube.

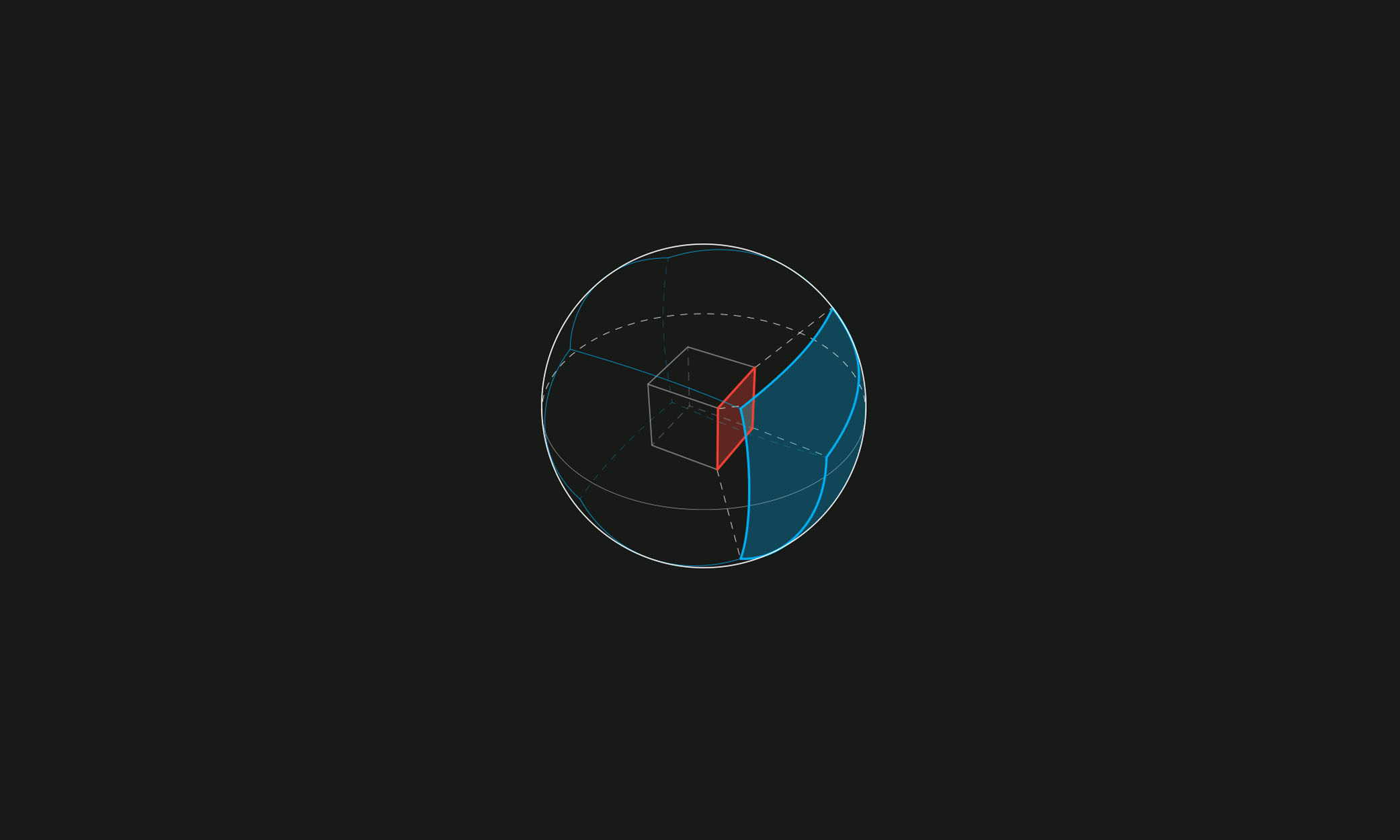

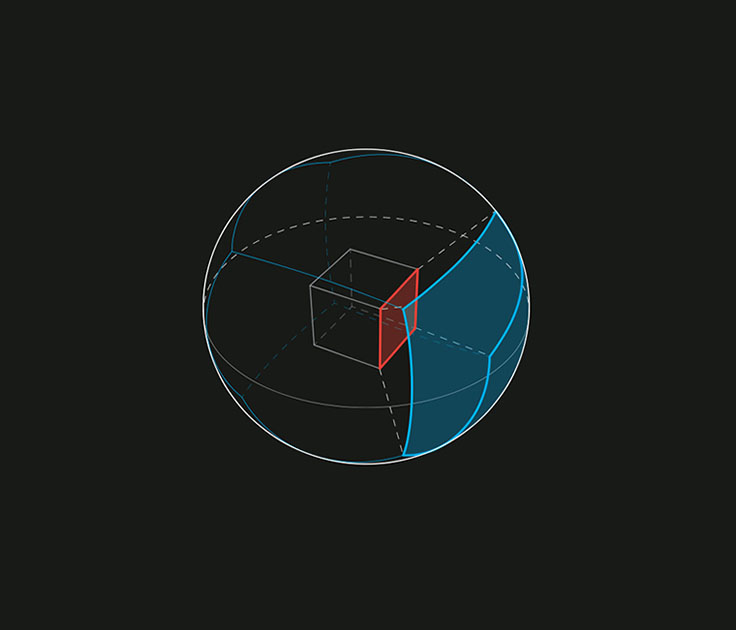

You can see the process for creating a cube map in the slide show below. The shark images used to demonstrate the concept are taken from a 360 video from Discovery, one of the first publishers to share 360 video on Facebook:

In order to implement our transformation from equirectangular layout to cube map, we created a custom video filter that uses multiple-point projection on every equirectangular pixel to calculate the appropriate value for every cube map pixel. That’s a lot of calculations. As an example, a 10-minute 360 video has 300 billion pixels that have to be mapped and stretched all over the cube.

We do this by transforming the top 25 percent of the equirectangular video to one cube face and the bottom 25 percent to another cube face. The middle 50 percent is converted to four cube faces. Later, we realign cube faces and put them in two rows (but the actual order is not important). The cube map output contains the same information as the equirectangular input, but it contains 25 percent fewer pixels per frame, an efficiency that matters when you’re working at Facebook’s scale.

Simply put, to convert videos, for each frame, we:

- Put a sphere inside a cube.

- Wrap an equirectangular image of this frame on the sphere.

- Expand this sphere in every direction until it fills in the cube.

Dealing with bit rate, file size, and encoding

Working with 360 videos at Facebook’s scale proved to be quite challenging on the backend.

Let’s take a moment to think about how big these files can be. To create a 360 video, either you use a special set of cameras to record all 360 degrees of a scene simultaneously or you have to stitch together angles from, say, four GoPros on a stick. Incoming 360 video files are 4K and higher, at bit rates that can be over 50 Mb per second — that’s 22 GB per hour of footage. And 3D 360 Stereo videos are twice that — 44 GB for an hour of footage. We tried to do a few things when working with file size: We wanted to decrease the bit rate and save storage, but we wanted to do it quickly so people wouldn’t have to wait for the video, and we didn’t want to compromise the video quality or resolution.

Part of this work was accomplished in the custom video filter we applied; we also attacked it during the encoding process. Videos are sent to us in every format imaginable. Chopping a video up, processing it on multiple machines, and stitching it back together without any glitches or loss of audiovisual synchronization is tricky. This is conceptually simple but difficult in practice. In order to process large 360 videos in a reasonable length of time, we use distributed encoding to split the encoding process across many machines.

We use a dedicated tier of machines for this work and are lucky to be able to leverage Facebook’s extensible infrastructure to distribute the workload. We created a custom video filter to process the 360 videos because a typical video-processing job is very different from this workload. These processing jobs have a long duration and are CPU and memory intensive, but they aren’t very I/O intensive, so we optimized our task-loading and hardware for this work.

Looking ahead

This launch was a lot of fun — we’ve made great strides in a lot of areas in the stack. For example, we knew we needed to be able to process 360 video in a reasonable amount of time. While there are hundreds of ways this could fail, especially at Facebook’s scale, we’re proud to say the team has worked hard to help eliminate many of the key challenges.

Of course, hurdles remain. We haven’t yet cracked automatic detection of 360 video upload — right now the false-positive rate inhibits our ability to fully implement this. Facebook’s scale is so large that even a 0.1 percent false-positive rate would mean we would incorrectly designate thousands of regular videos as 360 video. That’s a specific example, but there are a lot more broad, exciting challenges to tackle as well. Higher resolutions, 3D video, and 360 video optimized for virtual reality are all part of the near future of this space. It’s an exciting time to be working on video. We hope you enjoy the experience we built today, and we look forward to launching more in the future.