Energy efficiency in the data center has both environmental and economic payoffs. Lately at Facebook we’ve been implementing strategies and industry best practices that allow us to run the most energy efficient data centers. We also made an interesting discovery along the way: you can realize greater efficiency gains from newer data centers, which are already much more energy efficient than their predecessors.

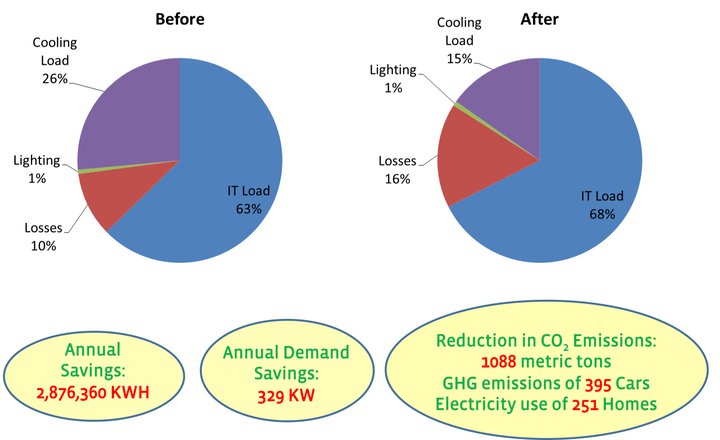

Recently we shared the energy efficiency strategies we deployed at one of our leased data centers. Today we’re sharing the energy efficiency strategies we applied at a second, smaller data center that resulted in an annual electricity savings of 2,876,360 kWh, reducing annual demand by 329 kW. These savings translate into a reduction of 1,088 metric tons of carbon dioxide emissions. According to EPA calculations, the energy savings gained from this project are equivalent to the emissions of 395 cars or the electricity usage of 251 homes. Furthermore, we reduced our annual electricity costs for this data center by about $273,000.

Note: The increase in losses is due to a drop in the overall IT load at the site during the before and after periods of the project as well as metering data available at the time we retrieved the baseline numbers.

We realized these savings by matching the cooling demand load with the data center IT demand load. We did this by:

- Extending air-side economizer use.

- Elevating rack inlet temperatures.

- Reducing excess air supply.

- Implementing cold aisle containment.

- Optimizing server fan speeds.

This data center is cooled by rooftop air-conditioning units (RTUs). They’re equipped with variable frequency drives (VFD), which offer the opportunity for energy savings by modulating the fan motors. They also produce cold air via an air-cooled direct expansion (DX) refrigeration cycle, with the option for outside air economization by bringing in cool outside air.

Although the cooling infrastructure of this data center differs from the first energy efficiency retrofit site, the underlying reasons for inefficient operation are the same: inefficient airflow distribution, excessive cooling, and low rack inlet temperatures. We applied many of the same strategies to this data center as we did to our first energy efficiency effort. The cold aisles were contained to eliminate the cold air bypass and hot air infiltration into the cold aisles. We worked with the server manufacturer to optimize the fan speed control algorithm, requiring less airflow (up to 8 CFM per server) and lower fan power (up to 3 watts less per server). The lower fan speeds elevate the air temperature flowing across the server; however, the temperature remains within the recommended range while minimizing the volume of air required to cool the server.

We observed that the supply fans of the operational RTUs were running at 100% capacity, resulting in excessive amounts of cold air being delivered into the data center. We attributed this operating condition to a very high number of perforated tiles that were installed in the data center floors. The high perforated floor tile count lead to a lower underfloor static pressure than the design set point, causing the supply fans to run at full capacity. We replaced many unnecessary perforated tiles with solid tiles, attaining the desired underfloor pressure and running the supply fans at 80% capacity. This measure alone reduced the supply fan motor demand by 69 kW.

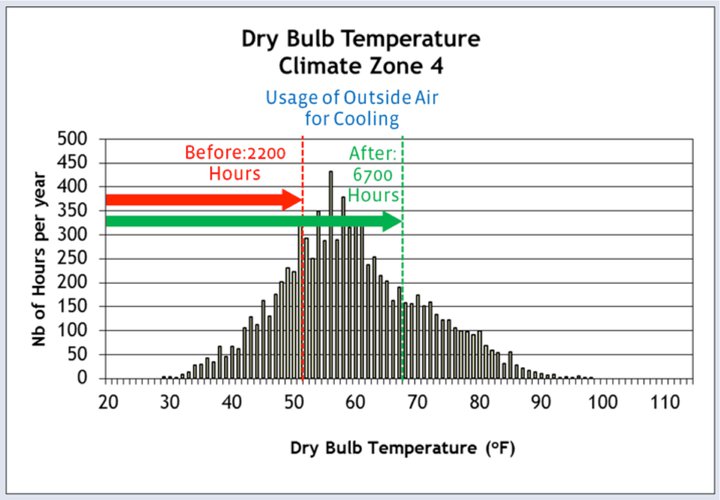

We addressed the low rack inlet temperature issue by raising the supply temperature set point of the RTUs from 51 degrees Fahrenheit to 67 degrees. Outside air is always the cheapest cooling solution, and we realized large gains from lowering the electricity usage by expanding the time window for air-side economization — using outside air to cool the data center. This minimizes the energy required to mechanically cool the air down to the design temperature. When the supply temperature set point was 51 degrees, free cooling was achieved for only 2,200 hours during the year. In this situation, the DX system must work to cool the air to 51 degrees for the remaining 6,560 hours in the year. By raising the supply temperature set point to 67 degrees Fahrenheit, we could now use outside air for 6,800 hours per year, more than tripling our free cooling hours. The DX cooling system now runs for a much a shorter time period, reducing the demand by 260 kW.

Modernity Allows for Greater Potential Savings

What surprised us about these efficiency gains is that this data center was built much more recently, and is a more efficient building. By implementing energy efficiency strategies correctly, we’re releasing less greenhouse gases at this data center than the larger one we discussed previously.

You can implement these strategies in your own data center, even one that’s already served by an energy efficient infrastructure. In the meantime, we’ll continue to implement these strategies at more data centers while we explore new ways in which we can improve their energy efficiency. We will also continue to share our findings with the industry.

Dan Lee, P.E., and Veerendra Mulay, Ph.D, also contributed to this research.