Facebook has one of the largest MySQL installations in the world, with thousands of database servers in multiple regions, so it’s no surprise that we have unique challenges when we take backups. Our job is to keep every piece of information you add safe, while ensuring that anything that’s been deleted is purged in a timely manner.

To accomplish this, we’ve built a highly automated, extremely effective backup system that moves many petabytes a week. Rather than extensive front-loaded testing, we emphasize rapid detection of failures and quick, automated correction. Deploying hundreds of new database servers requires very little human effort and lets us grow at the pace and flexibility required to support more than a billion active users. Here’s a bit about how it works.

Stage 1: Binary logs and mysqldump

The first line of protection is called “stage 1” or “rack” backups (RBUs). In each rack of databases, no matter what type, there are two RBU storage servers. Having RBUs in the same rack as the database servers allows for maximum bandwidth and minimum latency, and also allows them to act as a buffer for the next stage of backups (more on that later).

One of these servers’ jobs is to collect what are known as binary logs. These logs are second-by-second records of database updates. Binary logs are constantly streamed to the RBU hosts by means of a simulated secondary process. Effectively, the RBU receives the same updates as a replica without running mysqld.

Keeping binary logs current on the RBU is important: If a primary database server were to go offline, any users on that server would be unable to make status updates, upload photos, etc. Failures happen, but we need to make the repair time as short as possible to keep Facebook up for everybody. Having the binary logs available allows us to promote another database to primary in mere seconds. Since up-to-the-second binary logs are available on the RBU, we can still do this even if the old primary is completely unavailable. Having binary logs also allows for point-in-time recovery by applying the recorded transactions to a previous backup.

The servers’ second job is to take traditional backups. There are two ways to back up a MySQL server: binary and logical (mysqldump). Facebook uses logical backups because they are version-independent, provide better data integrity, are more compact, and take much less effort to restore. (Binary copies are still used when building new replicas for a given database, but that’s a whole other post.)

One major advantage of mysqldump is that on-disk data corruption is not propagated to the backup. If a sector goes bad on disk, or is written incorrectly, the InnoDB page checksum will be incorrect. When assembling the backup stream, MySQL will either read the correct block from memory, or go to disk and encounter the bad checksum, halting the backup (and the database process). A disadvantage of mysqldump is pollution of the LRU cache, used for InnoDB block caching; fortunately, newer versions of MySQL include code to move LRU insertions from scans to the end of the cache.

Each RBU takes a nightly backup of all databases under its purview. Despite the size of our environment, we are able to finish a backup of everything in just a few hours.

If an RBU fails, automated software redistributes its responsibility to other systems in the same cluster. When it’s brought back online, this responsibility is returned to the original host RBU. We are not overly concerned about data retention on individual systems because of the architecture of stage 2.

Stage 2: Hadoop DFS

After each backup and binary log is collected, we immediately copy it to one of our large, customized Hadoop clusters. These are a highly stable, replicated data stores with fixed retention times. Because disk sizes grow quickly, older generations of RBUs may be too small to store more than a day or two of backups, but we can grow the Hadoop clusters as large as required without regard to the underlying hardware. The distributed nature of Hadoop also gives us plenty of bandwidth should we ever need to recover data quickly.

Soon we will shift non-real-time data analysis to these Hadoop clusters. This will reduce the number of non-critical reads on database servers, leaving more room to make Facebook as fast and responsive as we can make it.

Stage 3: Long-term storage

Once a week, we copy the backups from Hadoop to discrete storage in a separate region; this is known as “stage 3.” These systems are modern, secure storage outside the flow of our normal management tools.

Monitoring

In addition to the usual system monitoring, we capture many specific statistics, such as binlog collection lag, system capacity, etc.

The most valuable tool we have is scoring the severity of a backup failure. Because it’s normal to miss some backups due to the size of the environment and the amount of ongoing maintenance (rebuilding replicas, etc.), we need a way to catch both widespread failures and individual backups that have not succeeded for several days. For this reason, the score of a missing backup increases exponentially with age, and various aggregations of these scores give us a useful, quick indicator of backup health.

As an example, one database missing for one day is worth one point. 50 missing for a day would be 50 points. But one database missing for 3 days would be 27 (3^3), and 50 missing for 3 days–a serious problem–would be 1350 (50 * 3^3). This would cause a huge spike on our graphs and trigger immediate action.

Restores

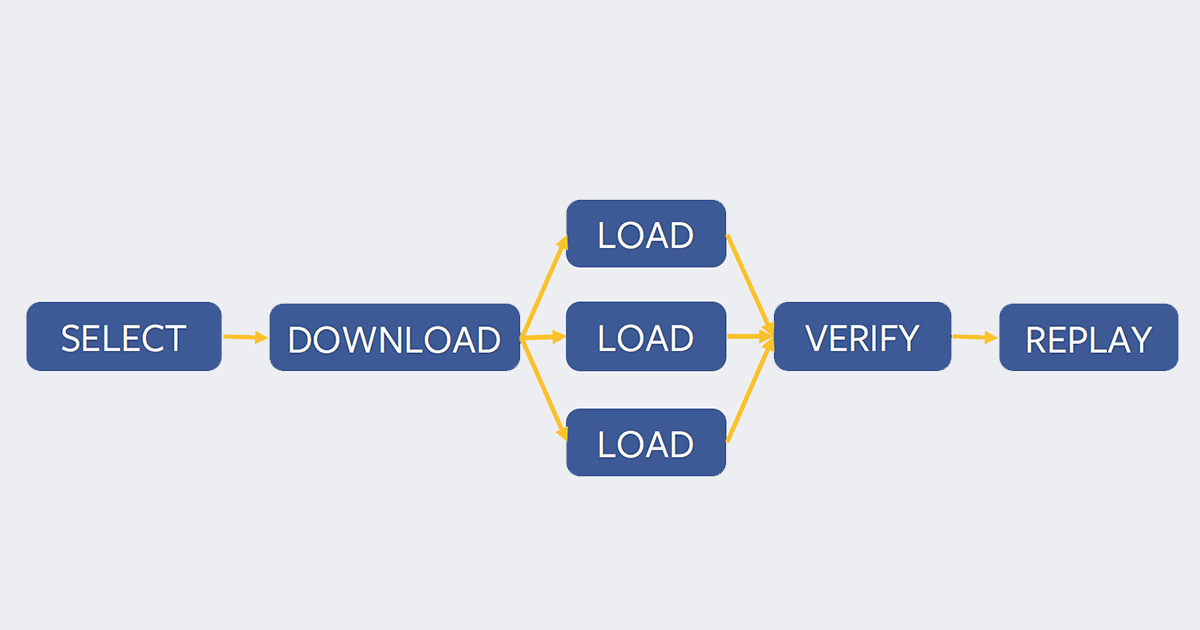

There’s an old saying in the world of system administrators: “If you haven’t tested your backups, you don’t have them.” We wholeheartedly agree, so we’ve built a system that continuously restores from stage 2 to test servers. After the restore, we run various sanity checks on the data. If there are any repeated problems, we raise an alarm for human review. This system can catch everything from bugs in MySQL to flaws in our backup process, and allows us to be more flexible with changes to the backup environment.

We have also developed a system called ORC (a recursive acronym for “ORC Restore Coordinator”) that will allow self-service restores for engineers who may want to recover older versions of their tools’ databases. This is especially handy for rapid development.

Backups are not the most glamorous type of engineering. They are technical, repetitive, and when everything works, nobody notices. They are also cross-discipline, requiring systems, network, and software expertise from multiple teams. But ensuring your memories and connections are safe is incredibly important, and at the end of the day, incredibly rewarding.

In an effort to be more inclusive in our language, we have edited this post to replace the terms “master” and “slave” with “primary” and “secondary”.