This year at CVPR, the annual computer vision conference, Oculus scientist Richard Newcombe and partners at the University of Washington in Seattle won the Best Paper Award for their paper “DynamicFusion: Reconstruction and Tracking of Non-rigid Scenes in Real-Time.”

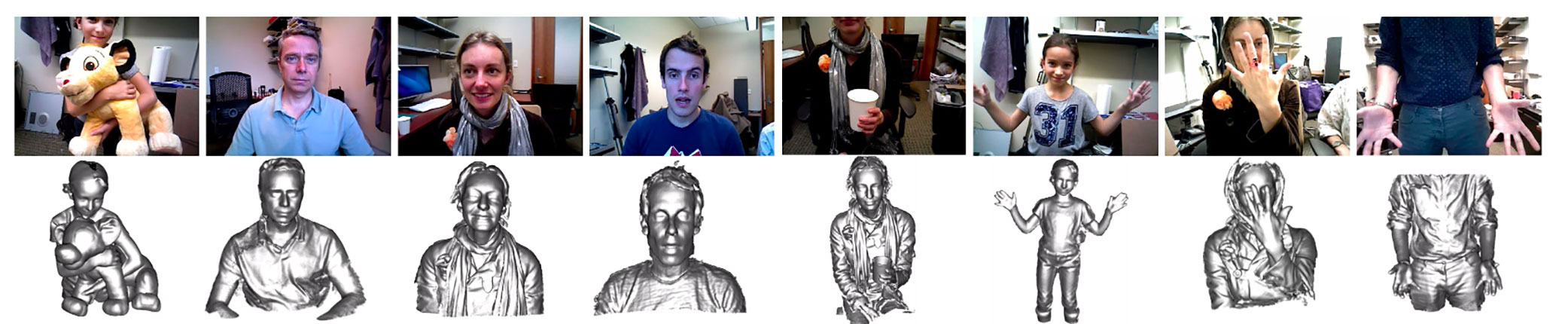

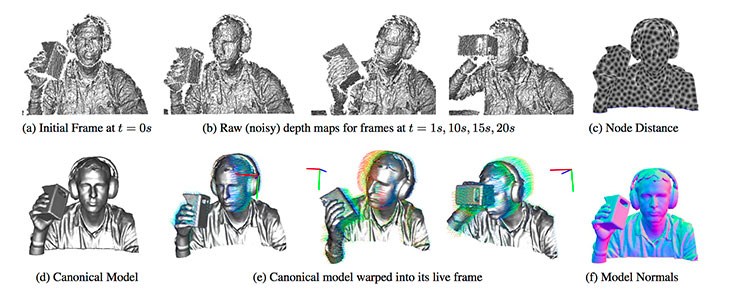

The paper introduces DynamicFusion, the “first real-time dense dynamic scene reconstruction system.” As described in the paper, real-time reconstruction of moving scenes removes the need for people and objects in a scene to remain still while being scanned. This opens up a whole host of applications in which real-time 3-D scanning wasn’t possible before, and it presents the chance to bring more of the real world into virtual and augmented realities.

Read the award-winning paper here, and then visit UW’s CS and Engineering department to learn more about CSE research at the university. You can see DynamicFusion in action in the video below.

Congrats to Richard and the DynamicFusion team!