Facebook is making it possible for people to create and share new immersive experiences using video and VR, and by some estimates video will make up 75 percent of the world’s mobile data traffic by 2020. With the onset of these new services, we need to make sure our global infrastructure is designed to handle richer content at faster speeds. To meet these current requirements and any future bandwidth demands, we’re working toward the 100G data center.

Today we’re excited to introduce Backpack, our second-generation modular switch platform. Together with our recently announced Wedge 100, this completes our 100G data center pod.

We’ve already begun putting Backpack in production at Facebook, and we’ve submitted the specification to the Open Compute Project for review. Going forward, we are excited to work with the community to develop an ecosystem around Backpack and help others build on this platform.

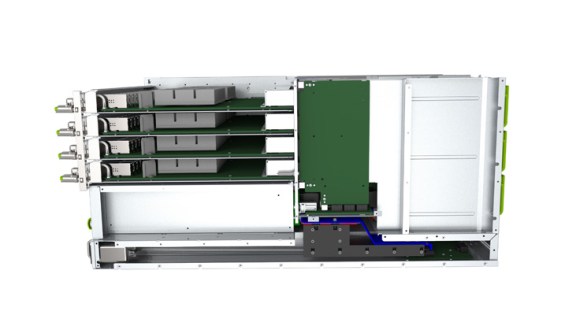

Backpack modular system

As we migrate our infrastructure from 40G to 100G, we’re faced with several hardware design challenges. Not only does our network need to run at a higher speed, but we also need a better cooling system to support 100G ASIC chips and optics, which consume significantly more power than previous generation components. Backpack is designed to meet both of these requirements while keeping our fabric design simple and scalable.

Backpack has a fully disaggregated architecture that uses simple building blocks called switch elements, and it has a clear separation of the data, control, and management planes. It has an orthogonal direct chassis architecture, which enables better signal integrity and opens up more air channel space for a better thermal performance. Finally, it has a sophisticated thermal design to support low-cost 55C optics.

Software

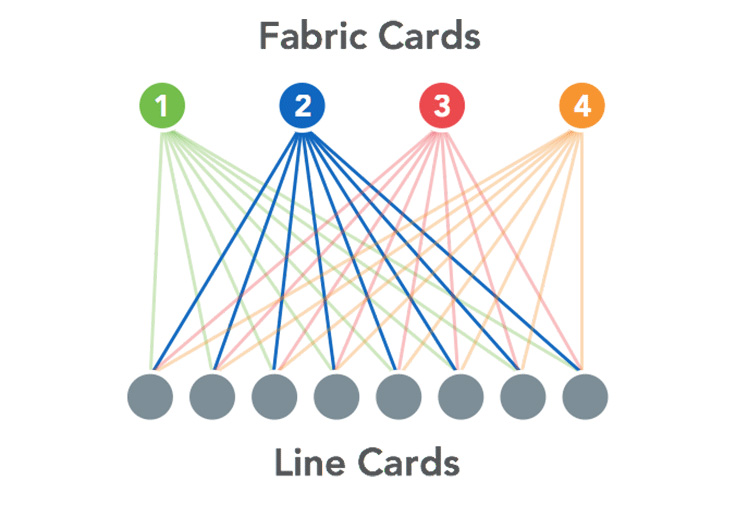

On the software side, we continue to use FBOSS and OpenBMC, our own open-source networking stack and baseboard management implementations. To support the new chassis we were able to leverage a lot of the work we did for 6-pack, our first generation switch. Architecturally, we kept the no-supervisor model when building these bigger switches. The Backpack chassis is equivalent to a set of 12 Wedge 100 switches connected together. The internal topology is a two-stage Clos similar to the following:

We continue to use the BGP routing protocol for the distribution of routes between the different line cards in the chassis. To that end, we have fabric cards set up as route reflectors and line cards set up as route reflector clients.

We are integrating the switch’s software into the existing BGP monitoring infrastructure at Facebook, where we log all BGP route updates to our centralized in-memory data store, Scuba. For our particular simultaneous update case, if we had had the ability to zoom in, we would have seen a number of BGP withdraw messages where none were expected. Analogously, while our BGP monitor gave us a control plane view, we realized we were lacking the data plane view. To that end, we also built a FIB monitor that now can log FIB updates. This ability to debug the deepest internals of FBOSS switches with sophisticated tools like Scuba has proven to be very effective.

With this design, the load of the control plane is spread across all the cards and not centralized on a single one. This allows us to move to different form factors without requiring serious per-chassis tweaks to the software. In addition, the fact that the software remains largely unchanged across our different hardware platforms means that any improvements we make to scale out for bigger switches flow down naturally to rack switches as well.

Testing and production

Our testing approach at Facebook is methodologically segmented by phases. There are three primary phases that a new platform, such as Backpack, goes through before it can be deployed in our production network:

Our testing approach at Facebook is methodologically segmented by phases. There are three primary phases that a new platform, such as Backpack, goes through before it can be deployed in our production network:

- Engineering validation testing: This is the phase for early prototypes of the hardware. As part of this phase, we perform basic testing around the mechanical, thermal, power, and electrical engineering characteristics of the platform. We also run a basic snake test in which traffic passes through all ports of the modular chassis in a standalone state.

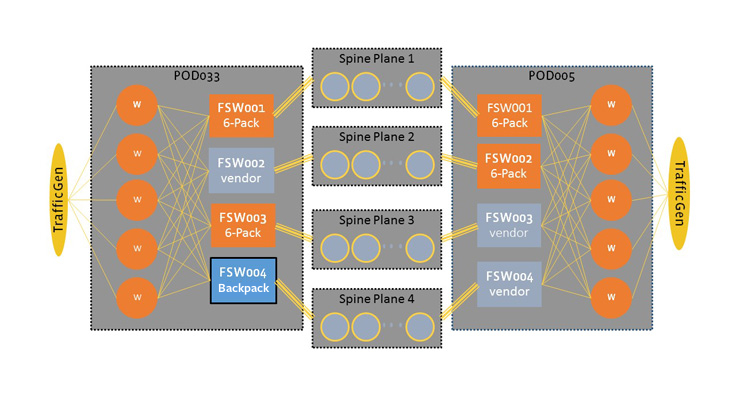

- Design validation testing: This is the phase where we perform a comprehensive functional testing of the platform while it’s integrated with other network devices. The testing typically takes place in a mini-fabric that was put together in our labs.

- Production validation testing: This is the last stage before the hardware can exit to mass production status. In this phase, we place the platform in a test pod inside our production network and monitor its performance at real production scale.

When we ran 6-pack through these testing phases, we uncovered bugs and performance deficiencies. Because Backpack relies on the same software code base, the performance gains from fixing these issues in 6-pack carried over automatically. For example, removing BGP Next Hop from the ECMP groups improved slow reactions to failure conditions. We also switched to a new port striping method in which separate line cards were dedicated for rack switch connectivity and spine switch connectivity. With this new configuration, traffic was load-balanced properly through the chassis whether it had to traverse the fabric spine or egress through another front-panel interface.

Incorporating these fixes into Backpack from the start helped us achieve all our design goals within a year, and gave us a head start on optimizing Backpack for a production environment.

The Backpack system-level testing took place in a testing pod at our Altoona Data Center. The testing covered the following areas:

- Functionality of BGP (peerings, policy, backup groups, VIP prefix processing)

- Traffic forwarding and load balancing (Intra-Pod and Inter-Pod flows)

- Recovery from failures (interface shut, link down, reseating & rebooting LC, FC and SCM, bgpd process kill)

- Performance (measurement of traffic loss in reaction to the failures listed in the bullet above)

- Integration with Facebook DC Tools for performing Drain/Undrain, Code Upgrades and Statistics Collection

- Route Scale (local generation of 16K prefixes: 8K V4 + 8K V6)

- Longevity (traffic run for an extended period of time)

In the next phase, we replaced one fabric switch with a Backpack chassis in a production pod, which was a seamless and successful integration with the production environment. Our plan forward is to continue deploying Backpack in production pods hosting various frontend and backend services to ensure that it can meet the demands of a wide range of applications served by our data centers. Over the next few months, we will work toward pods that are fully built with Backpack fabric switches.

Working in the open

Backpack provides the bandwidth capacity that Facebook needs to meet the rapid growth in our traffic patterns. By running FBOSS, Backpack’s integration with Wedge 40 and Wedge 100 in the TOR tier gives us more flexibility to explore new features, such as new routing protocols, that we couldn’t do with TORs alone. This approach also maintains consistency in our automated provisioning and monitoring tools, which allows us to simplify the manageability of our data centers. The experience that we acquired writing and deploying our own network stack allowed us to get Backpack into production in a matter of months.

As we have already done for our Wedge and 6-pack devices, we are contributing Backpack’s design to the Open Compute Project. We are committed to our efforts in the open hardware space and will continue working with the OCP community to develop open network technologies that are more flexible, scalable, and efficient.

Thanks to all the teams that have made Backpack possible: Net HW Eng, NetSystems, DNE, NIE, ENS, IPP, SOE, and CEA.