As Facebook’s infrastructure has scaled, we’ve frequently run up against the limits of traditional networking technologies, which tend to be too closed, too monolithic, and too iterative for the scale at which we operate and the pace at which we move. Over the last few years we’ve been building our own network, breaking down traditional network components and rebuilding them into modular disaggregated systems that provide us with the flexibility, efficiency, and scale we need.

We started by designing a new top-of-rack network switch (code-named “Wedge”) and a Linux-based operating system for that switch (code-named “FBOSS”). Next, we built a data center fabric, a modular network architecture that allows us to scale faster and easier. For both of these projects, we broke apart the hardware and software layers of the stack and opened up greater visibility, automation, and control in the operation of our network.

But even with all that progress, we still had one more step to take. We had a TOR, a fabric, and the software to make it run, but we still lacked a scalable solution for all the modular switches in our fabric. So we built the first open modular switch platform. We call it “6-pack.”

Narration by Omar Baldonado.

The platform

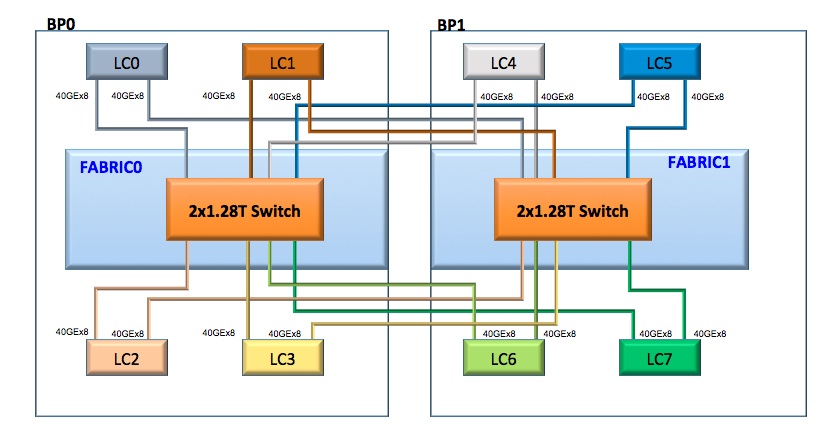

The “6-pack” platform is the core of our new fabric, and it uses “Wedge” as its basic building block. It is a full mesh non-blocking two-stage switch that includes 12 independent switching elements. Each independent element can switch 1.28Tbps. We have two configurations: One configuration exposes 16x40GE ports to the front and 640G (16x40GE) to the back, and the other is used for aggregation and exposes all 1.28T to the back. Each element runs its own operating system on the local server and is completely independent, from the switching aspects to the low-level board control and cooling system. This means we can modify any part of the system with no system-level impact, software or hardware. We created a unique dual backplane solution that enabled us to create a non-blocking topology.

We run our networks in a split control configuration. Each switching element contains a full local control plane on a microserver that communicates with a centralized controller. This configuration, often called hybrid SDN, provides us with a simple and flexible way to manage and operate the network, leading to great stability and high availability.

The only common elements in the system are the sheet metal shell, the backplanes, and the power supplies, which make it very easy for us to change the shell to create a system of any radix with the same building blocks.

Below you can see the high-level “6-pack” block diagram and the internal network data path topology we picked for the “6-pack” system.

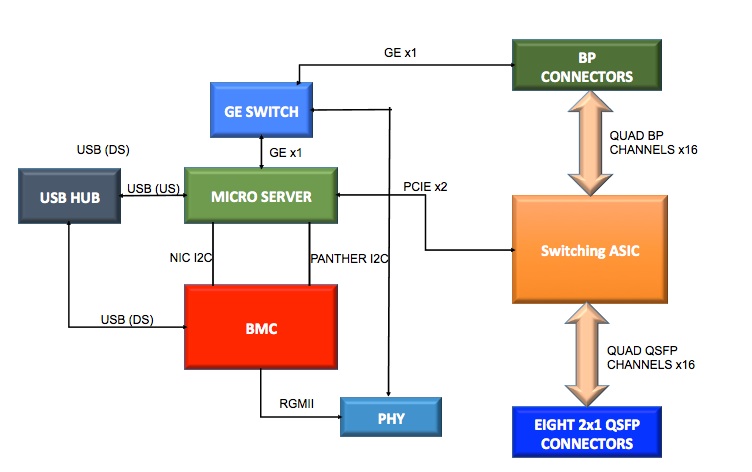

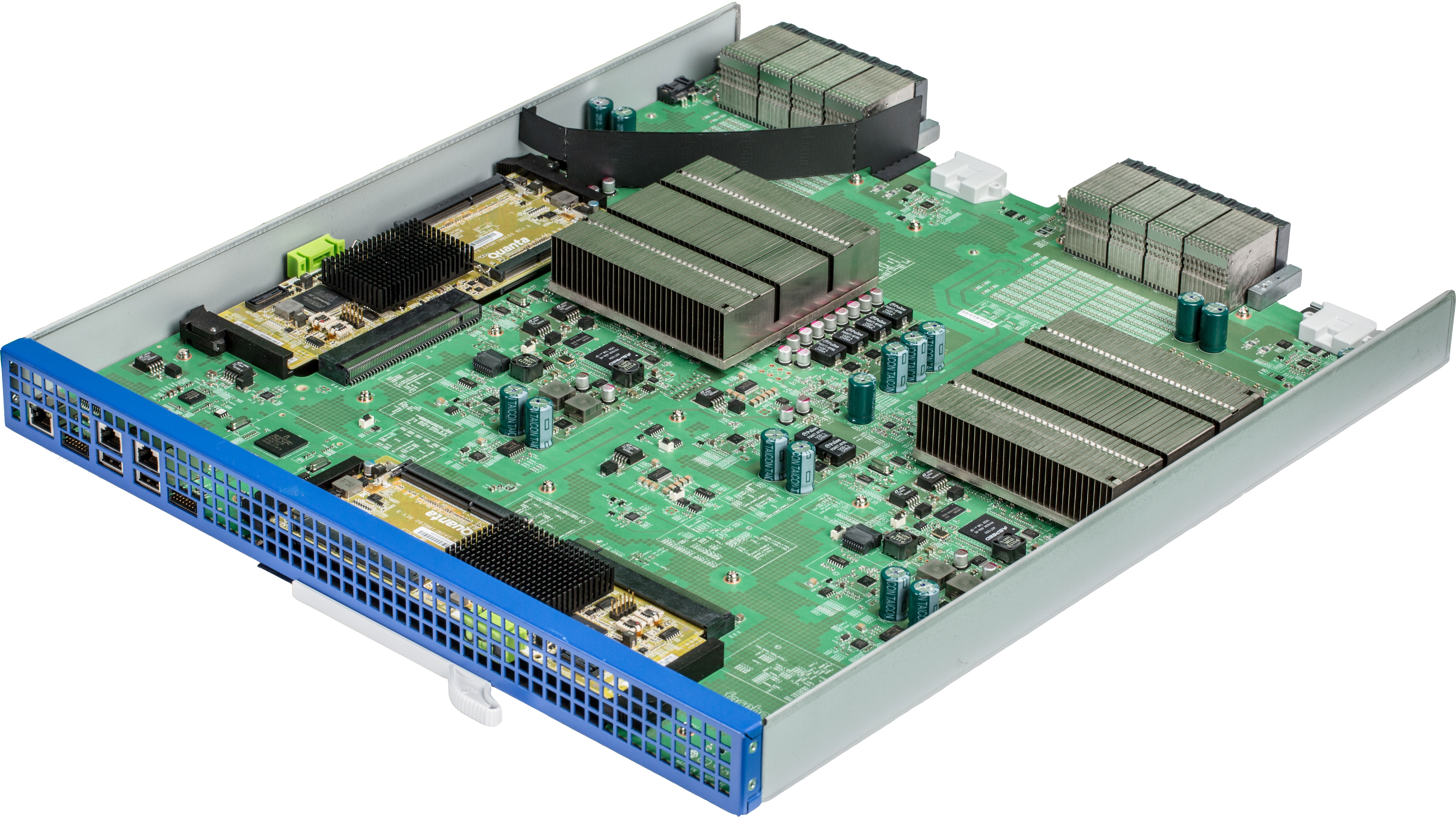

The line card

If you’re familiar with “Wedge,” you probably recognize the central switching element used on that platform as a standalone system utilizing only 640G of the switching capacity. On the “6-pack” line card we leveraged all the “Wedge” development efforts (hardware and software) and simply added the backside 640Gbps Ethernet-based interconnect. The line card has an integrated switching ASIC, a microserver, and a server support logic to make it completely independent and to make it possible for us to manage it like a server.

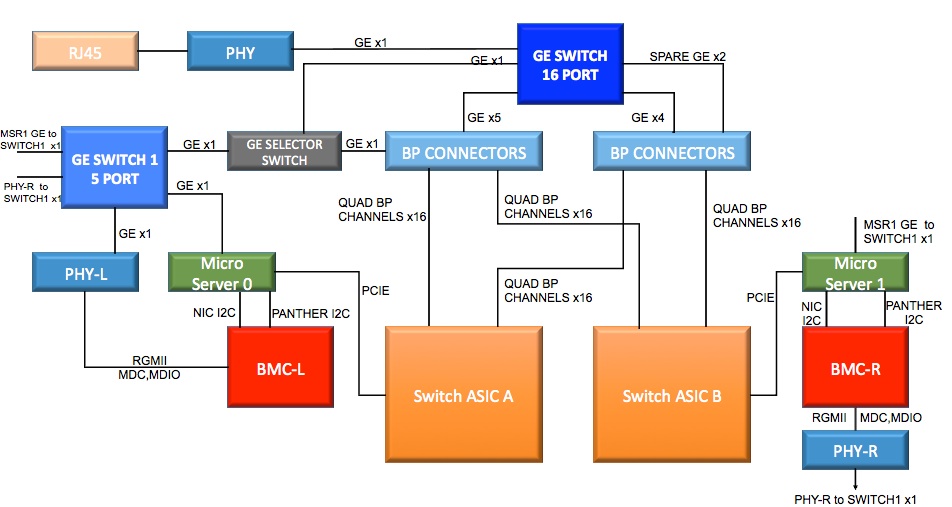

The fabric card

The fabric card is a combination of two line cards facing the back of the system. It creates the full mesh locally on the fabric card, which in turn enables a very simple backplane design. For convenience, the fabric card also aggregates the out-of-band management network, exposing an external interface for all line cards and fabrics.

Bringing it together

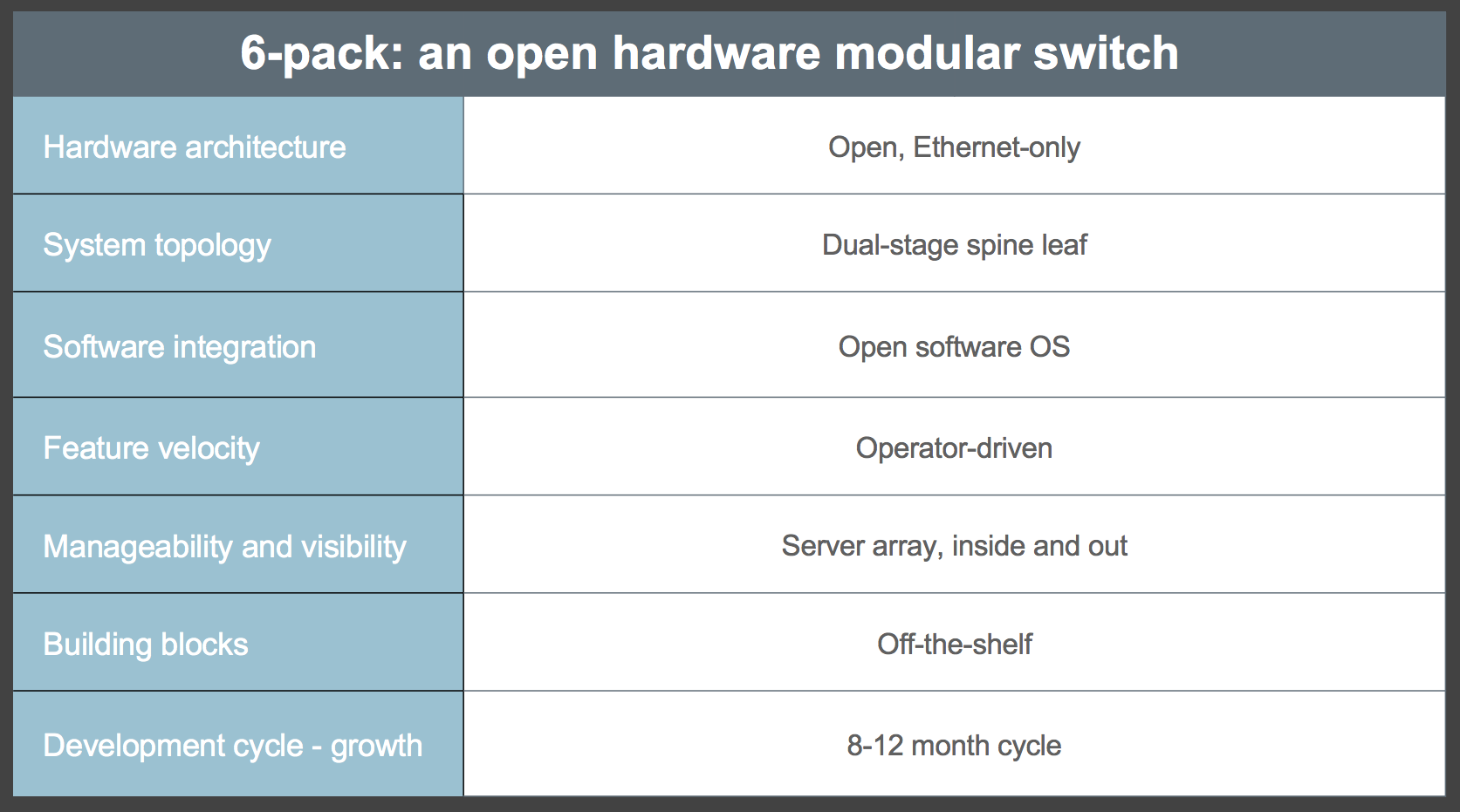

With “6-pack,” we have created an architecture that enables us to build any size switch using a simple set of common building blocks. And because the design is so open and so modular – and so agnostic when it comes to switching technology and software – we hope this is a platform that the entire industry can build on. Here’s what we think separates “6-pack” from the traditional approaches to modular switches:

“6-pack” is already in production testing, alongside “Wedge” and “FBOSS.” We plan to propose the “6-pack” design as a contribution to the Open Compute Project, and we will continue working with the OCP community to develop open network technologies that are more flexible, more scalable, and more efficient.

Thanks to the entire Facebook team who have contributed to the development of “6-pack,” “Wedge,” “FBOSS,” and “fabric.”