Last year at the OCP Summit we released OpenBMC, a low-level board management software that enables flexibility and speed in feature development for BMC chips. Wedge, our open source top-of-rack switch, was the first hardware in our data centers to be powered by OpenBMC, followed by our modular switch 6-pack. Six months ago, we extended OpenBMC support to servers, which changed the way we approached board management for multi-node 1S servers. Today, I’m excited to announce OpenBMC for storage — more specifically, it will support Lightning, our new NVMe-based storage platform — which extends this software across all hardware in Facebook’s data centers.

Why OpenBMC on Lightning?

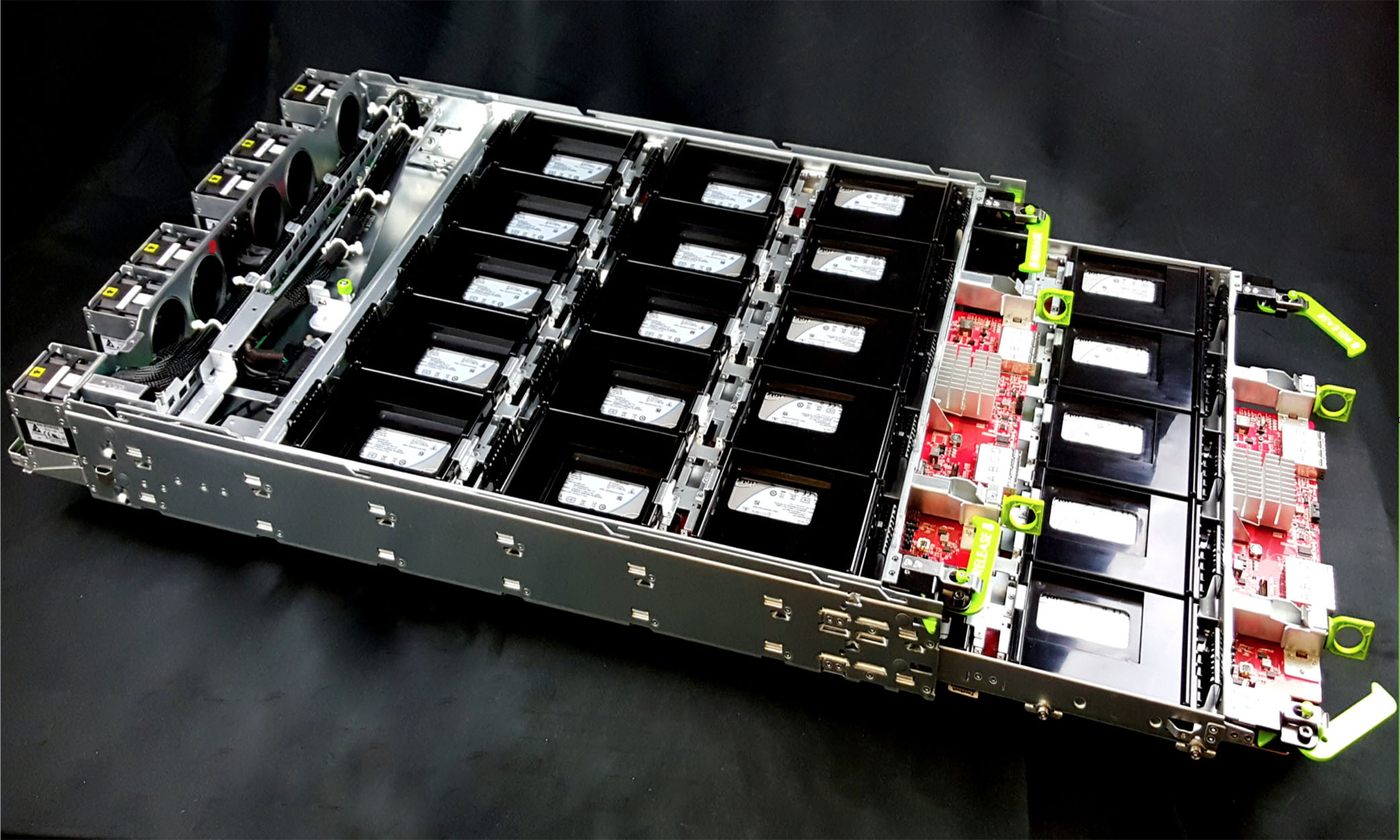

Lightning is a PCIe version of the Open Vault Storage solution that’s built for the Open Compute Project’s Open Rack. It supports up to 60 solid state drives (SSDs) connected via Gen3 PCIe links from an external host or up to four hosts (servers). There is a PCIe switch on each of the two trays that supports up to 2x x16 upstream ports for a total of 32x upstream lanes and up to 30 SSD devices (x2 to each device) downstream of the switch.

We know that the NVMe standard is relatively new and there is not yet a well-defined NVMe enclosure management standard, unlike SCSI Enclosure Management (SES). The Lightning design needed some controller for not only chassis management but also enclosure management. Each tray has one BMC connected to the PCIe Switch via both an I2C interface and a PCIe connection.

OpenBMC interface

The BMC design on the Lightning platform is not a traditional one. A BMC usually feeds on a sideband network connection (NCSI) from the NIC. But the Lighting BMC design does not have any such NIC, and adding one imports challenges at the management end. Some of these challenges are that the Lightning chassis would need to be managed as an individual system and not a part of the server system, all the security and provisioning tools would be running on the Lighting BMC’s low compute CPU. These challenges surface other concerns in the infrastructure.

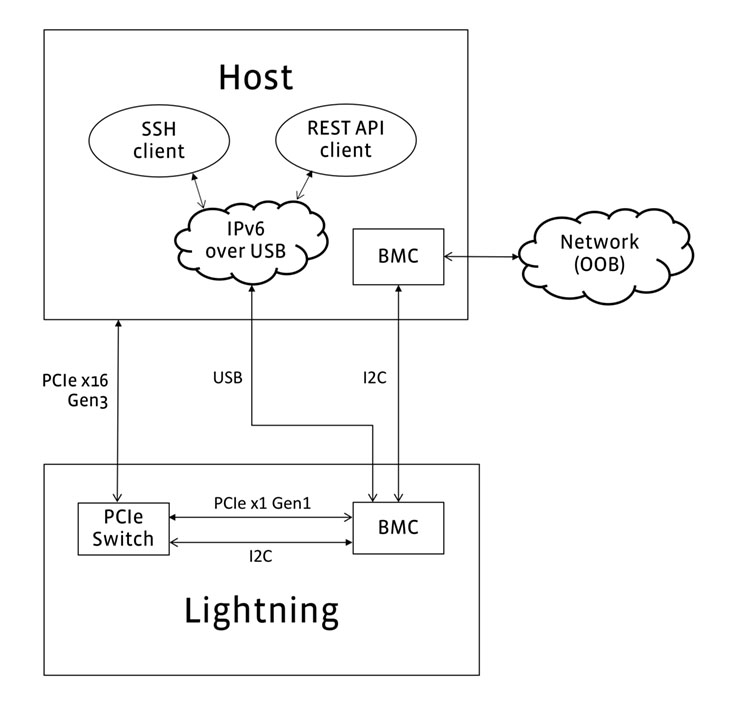

Instead of the network connection sideband interface, we use the following interfaces to access OpenBMC on Lightning:

- USB bus from Host to Lightning BMC (In-band only)

- I2C bus from Host BMC to Lightning BMC

- PCIe x1 (Gen1) from Host to Lightning BMC (In-band only)

USB interface

USB channel run from the Host USB to the Lightning BMC USB over the custom mini SAS HD cable. OpenBMC uses the Ethernet over USB interface to connect Host USB and Lightning BMC USB device. This will enable the Host OS to communicate with OpenBMC via SSH and REST API clients. This connection will be the primary mode of communication between the Host and Lightning BMC.

I2C interface

The Lightning BMC is directly connected to one or more host BMCs via I2C. But in our current Lightning design, we plan to use a single host. The I2C bus between the two BMCs is used as a IPMB channel. The Host BMC software uses the IPMI standard Message Bridging mechanism. This “bridge” function shall be able to receive IPMB commands in-band and/or out-of-band, send them to Lightning BMC on the IPMB channel, and provide response. In this case, Lightning BMC can be accessed in-band from the host and even out-of-band when the host CPU is powered off.

PCIe interface

There is a single PCIe x1 Gen1 link downstream from PCIe Switch to the BMC. We plan to add support for MCTP in OpenBMC.

Features

During the development of previous platforms, we moved all of the common utility code to a common directory (meta-openbmc/common/), which allows us to add support for more platforms in OpenBMC with ease. We have reused most of the common utilities, including ‘fruid-util’, ‘sensor-util’, ‘gpio-util’. We have added support for new features, such as:

- ‘fscd’, a daemon to control the fans that is based on OCP FSC (Fan Speed Control). It supports both linear and nonlinear fsc tables provided by the user.

- ‘log-util’, which captures all the event/error logs from the secondary devices (sensors, SSDs, PCIe switch, etc.) connected to the Lightning BMC.

- ‘flash-util’, which reads the SSDs sideband status information (like temperature, error count, etc.) based on the NVMe Management Interface standard (NVMe-MI), can reset the PCIe switch, and can update its firmware and configuration over I2C.

Note: Lightning hardware and OpenBMC for Lightning features are still in the development phase. Most of the above features are supported by SSH or RESTful API using the IPv6 over USB interface.

Use in Facebook infrastructure

With the addition of OpenBMC for Storage, we will have OpenBMC support for all types of hardware in our infrastructure. Provisioning in the data center has always been a challenge with hardware from different manufacturer because of their proprietary tools. These tools also create limitations on having flexible hardware configurations. OpenBMC, on the other hand, brings uniformity across all the tools and event/error logs, which will open opportunities to improve hardware management and hardware Fault Analysis (FA). Running OpenBMC across network, compute, and storage platforms is another step in building a more flexible and reliable infrastructure.

If you are still curious about OpenBMC or have questions regarding any of the features, you can check out our latest updates on GitHub.

In an effort to be more inclusive in our language, we have edited this post to replace the terms “master” and “slave” with “primary” and “secondary”.