Can a computer distinguish between the many objects in a photograph as effortlessly as the human eye?

When humans look at an image, they can identify objects down to the last pixel. At Facebook AI Research (FAIR) we're pushing machine vision to the next stage — our goal is to similarly understand images and objects at the pixel level.

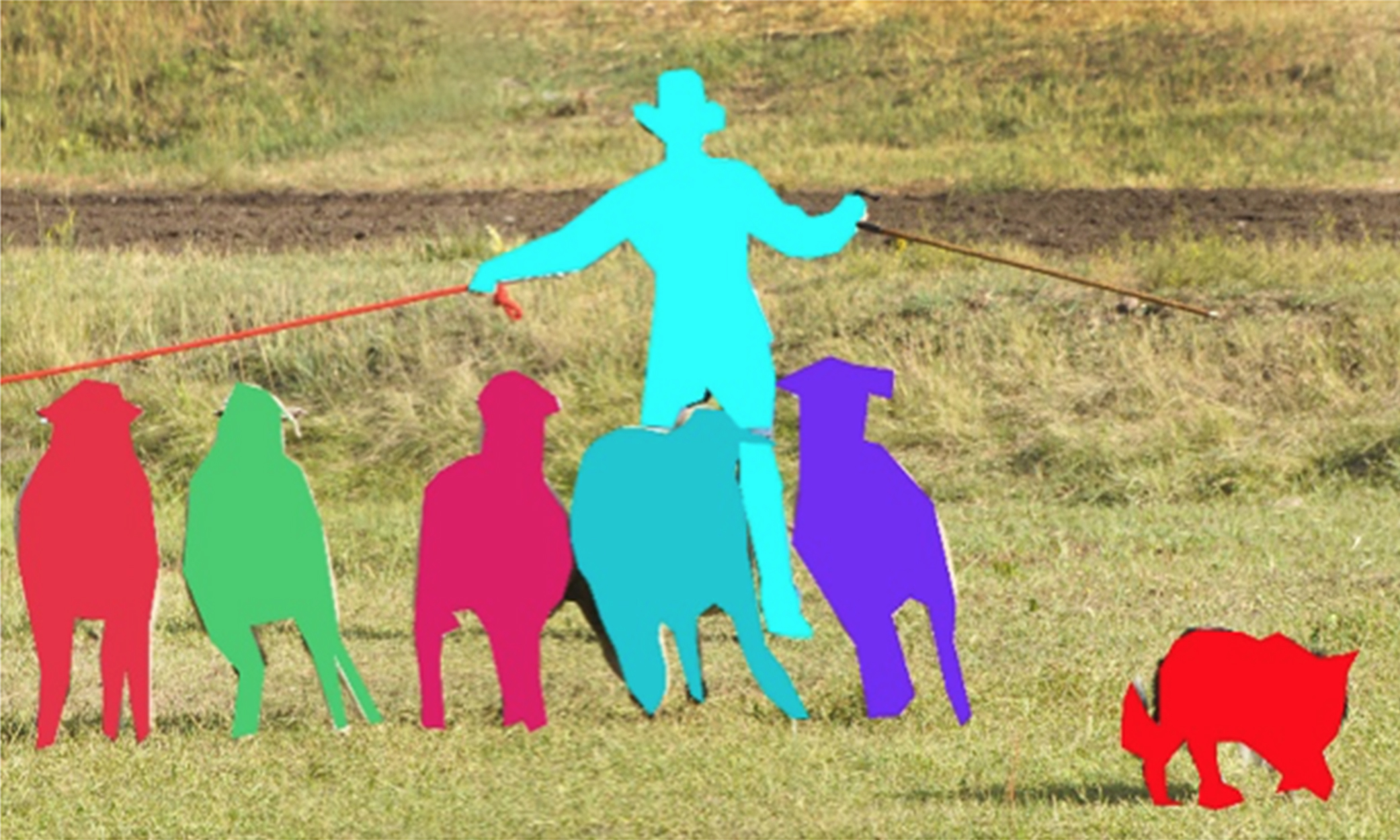

Over the past few years, progress in deep convolutional neural networks and the advent of ever more powerful computing architectures has led to machine vision systems rapidly increasing in their accuracy and capabilities. We've witnessed massive advances in image classification (what is in the image?) as well as object detection (where are the objects?). See panels (a) and (b) in the image below. But this is just the beginning of understanding the most relevant visual content of any image or video. Recently we've been designing techniques that identify and segment each and every object in an image, as in rightmost panel (c) of the image below, a key capability that will enable entirely new applications.

The main new algorithms driving our advances are the DeepMask1 segmentation framework coupled with our new SharpMask2 segment refinement module. Together, they have enabled FAIR's machine vision systems to detect and precisely delineate every object in an image. The final stage of our recognition pipeline uses a specialized convolutional net, which we call MultiPathNet3, to label each object mask with the object type it contains (e.g. person, dog, sheep). We will return to the details shortly.

We're making the code for DeepMask+SharpMask as well as MultiPathNet — along with our research papers and demos related to them — open and accessible to all, with the hope that they'll help rapidly advance the field of machine vision. As we continue improving these core technologies we'll continue publishing our latest results and updating the open source tools we make available to the community.

Finding patterns in the pixels

Let's take a look at the building blocks of these algorithms.

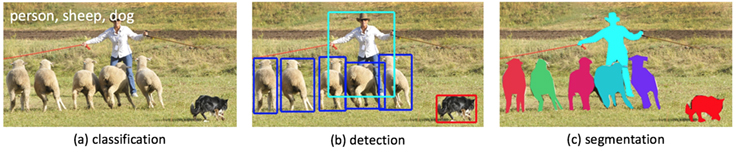

Glance at the first photo below, the one on the left. What do you see? A photographer operating his old-school camera. A grassy field. Buildings in the background. And you probably notice countless other details as well. A machine sees none of this; an image is encoded as an array of numbers representing color values for each pixel, as in the second photo, the one on the right. So how do we enable machine vision to go from pixels to a deeper understanding of an image?

It's not an easy task, due to the near infinite variability of objects and scenes in real-world settings. Objects vary in their shapes and appearances, their sizes and positions, their textures and colors. Couple this with the inherent complexity of real scenes, variable backgrounds and lighting conditions, and the general richness of our world and you see can start to see how the problem can be so difficult for machines.

Enter deep convolutional neural networks. Rather than trying to programmatically define rule-based systems for object detection, deep nets are relatively simple architectures with tens of millions of parameters that are trained rather than designed. These networks automatically learn patterns from millions of annotated examples, and having seen a sufficient number of such examples start to generalize to novel images. Deep nets are particularly adapt at answering yes/no questions about an image (classification) — for example, does an image contain a sheep?

Segmenting objects

So how do we employ deep networks for detection and segmentation? The technique we use in DeepMask is to think of segmentation as a very large number of binary classification problems. First, for every (overlapping) patch in an image we ask: Does this patch contain an object? Second, if the answer to the first question is yes for a given patch, then for every pixel in the patch we ask: Is that pixel part of the central object in the patch? We use deep networks to answer each yes/no question, and by cleverly designing our networks so that computation is shared for every patch and every pixel, we can quickly discover and segment all the objects in an image.

DeepMask employs a fairly traditional feedforward deep network design. In such networks, with progressively deeper network stages information is more abstract and semantically meaningful. For example, early layers in a deep net might capture edges and blobs, while upper layers tend to capture more semantic concepts such as the presence of an animal's face or limbs. By design, these upper-layer features are computed at a fairly low spatial resolution (for both computational reasons and in order to be invariant to small shifts in pixel locations). This presents a problem for mask prediction: The upper layer features can be used to predict masks that capture the general shape on an object but fail to precisely capture object boundaries.

Which brings us to SharpMask. SharpMask refines the output of DeepMask, generating higher-fidelity masks that more accurately delineate object boundaries. While DeepMask predicts coarse masks in a feedforward pass through the network, SharpMask reverses the flow of information in a deep network and refines the predictions made by DeepMask by using features from progressively earlier layers in the network. Think of it this way: To capture general object shape, you have to have a high-level understanding of what you are looking at (DeepMask), but to accurately place the boundaries you need to look back at lower-level features all the way down to the pixels (SharpMask). In essence, we aim to make use of information from all layers of a network, with minimal additional overhead.

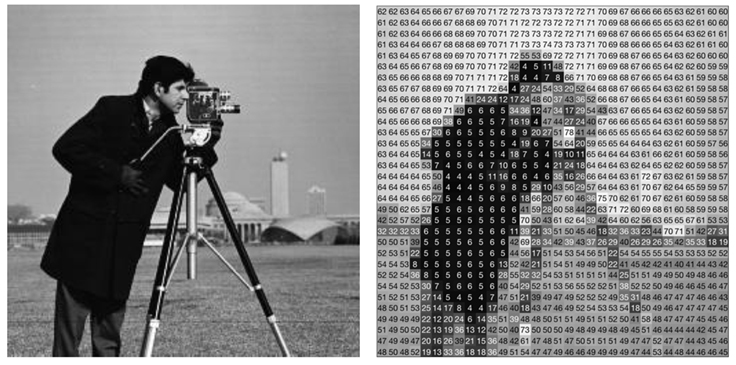

Below are some example outputs generated by DeepMask and refined by SharpMask. To keep the visualizations simple we only show predicted masks that best align to the objects actually present in the images (as annotated by humans). Note that the system is not perfect yet, objects with red outlines are those annotated by humans but missed by DeepMask.

Classifying objects

DeepMask knows nothing about specific object types, so while it can delineate both a dog and a sheep, it can't tell them apart. Plus, DeepMask is not very selective and can generate masks for image regions that are not especially interesting. So how do we narrow down the pool of relevant masks and identify the objects that are actually present?

As you might expect, we turn to deep neural networks once again. Given a mask generated by DeepMask, we train a separate deep net to classify the object type of each mask (and “none” is a valid answer as well). Here we are following the foundational paradigm called Region-CNN, or RCNN for short, pioneered by Ross Girshick (now also a member of FAIR). RCNN is a two-stage procedure where a first stage is used to draw attention to certain image regions, and in a second stage a deep net is used to identify the objects present. When developing RCNN, the first stage of processing available was fairly primitive. By using DeepMask as the first stage for RCNN and exploiting the power of deep networks we get a significant boost in detection accuracy and also gain the ability to segment objects.

To further boost performance, we also focused on using a specialized network architecture to classify each mask (the second stage of RCNN). As we discussed, real-world photographs contains objects at multiple scales, in context and among clutter, and under frequent occlusion. Standard deep nets can have difficulty in such situations. To address this, we proposed a modified network that we call MultiPathNet. As its name implies, MultiPathNet allows information to flow along multiple paths through the net, enabling it to exploit information at multiple image scales and in surrounding image context.

In summary, our object detection system follows a three stage procedure: (1) DeepMask generates initial object masks, (2) SharpMask refines these masks, and finally (3) MultiPathNet identifies the objects delineated by each mask. Here are some example outputs of our complete system:

Not perfect but not too shabby given that the technology to do this simply didn't exist a few short years ago!

Wide-ranging uses

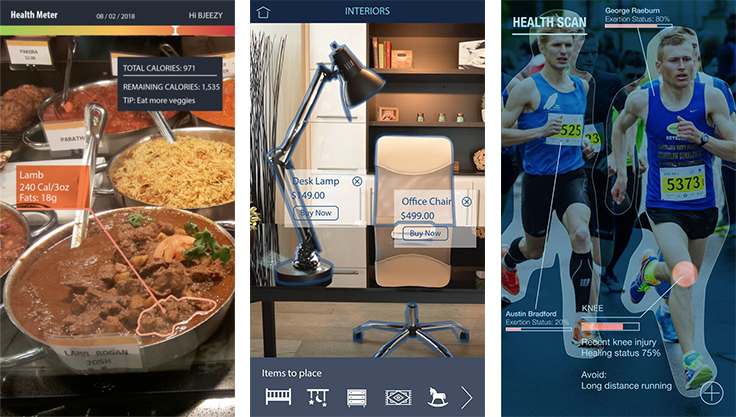

There are wide ranging potential uses for visual recognition technology. Building off this existing computer vision technology and enabling computers to recognize objects in photos, for instance, it will be easier to search for specific images without an explicit tag on each photo. People with vision loss, too, will be able to understand what is in a photo their friends share because the system will be able to tell them, regardless of the caption posted alongside the image.

Most recently we demonstrated technology we're developing for blind users that will assess photos and describe their content. Currently, visually impaired users browsing photos on Facebook only hear the name of the person who shared the photo, followed by the term “photo,” when they come upon an image in their News Feed. Instead we aim to offer richer descriptions, such as “Photo contains beach, trees, and three smiling people.” Furthermore, leveraging the segmentation technology we've been developing, our goal is to enable even more immersive experiences that allow users to “see” a photo by swiping their finger across an image and having the system describe the content they're touching.

As we move forward, we will continue to improve our detection and segmentation algorithms. You can imagine one day this image detection, segmentation, and identification capability applied to augmented reality in areas like commerce, health, or others.

In addition, our next challenge will be to apply these techniques to video, where objects are moving, interacting, and changing over time. We've already made some progress with computer vision techniques to watch videos and understand and classify what's in them in real time. Real-time classification could help surface relevant and important Live videos on Facebook, while applying more refined techniques to detect scenes, objects, and actions over space and time could one day allow for real-time narration. We're excited to continue pushing the state of the art and providing better experiences on Facebook for everyone.

[1] DeepMask: Learning to Segment Object Candidates. Pedro O. Pinheiro, Ronan Collobert, Piotr Dollár (NIPS 2015)

[2] SharpMask: Learning to Refine Object Segments. Pedro O. Pinheiro, Tsung-Yi Lin, Ronan Collobert, Piotr Dollàr (ECCV 2016)

[3] MultiPathNet: A Multipath Network for Object Detection. Sergey Zagoruyko, Adam Lerer, Tsung-Yi Lin, Pedro O. Pinheiro, Sam Gross, Soumith Chintala, Piotr Dollár (BMVC 2016)