This week we’re publishing a series of posts looking back at the technologies and advancements Facebook engineers introduced in 2017. Read the first part of the series about data centers here, and check back each day to learn about a different part of the stack.

Part of what makes our data centers some of the most advanced facilities in the world is the idea of disaggregation — decoupling hardware from software and breaking down the technologies into their core components. This allows us to recombine the technologies in new ways or upgrade components as new versions become available, ultimately enabling more flexible, scalable, and efficient systems.

We took this approach with the storage and compute servers we’ve designed and contributed to the Open Compute Project (OCP) over the years, and followed that by breaking open our networking stack the same way. This year we saw the broader networking community adopt the idea of disaggregation, as an ecosystem of software solutions grew around open network technologies. Companies that used our validation lab space to test their software on open hardware announced several solutions around our Wedge, Wedge 100, and Backpack platforms.

Wedge 100 and Backpack are two of the main components we deployed as we upgraded our data centers to run at 100 gigabits per second. The final piece was 100G optical connections for the networking fabric between the switches. This year we developed a 100G single-mode optical fiber with a transceiver optimized to fit our data center requirements, which saves on both cost and power consumption and supports future technology upgrades. We contributed the design to OCP and have begun laying the fiber in some of our data centers.

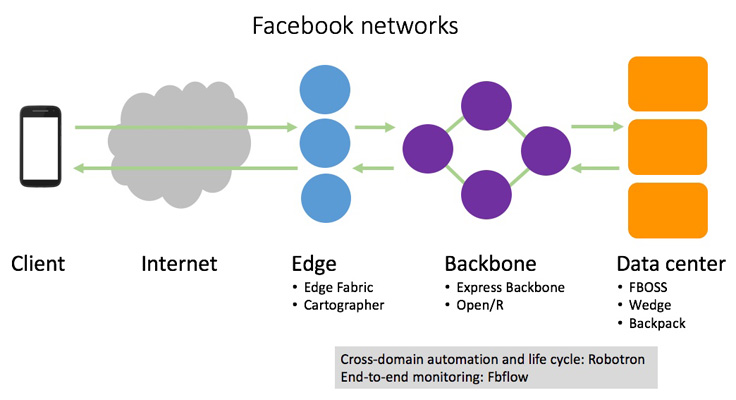

While serving 100G traffic will help increase the capacity of our data centers, we still faced growing bandwidth demands from both internet-facing traffic and cross-data center traffic as people share richer content like video and 360 photos. We decided to split our internal and external traffic into two separate networks and optimize them individually, so that traffic between data centers wouldn’t interfere with the reliability of our worldwide networks.

We built Express Backbone, a new central network for cross-data center traffic. Its hybrid traffic engineering approach controls some traffic flow centrally through intelligent path computations, while staying nimble enough to redirect traffic during an outage or congestion. We also shared details about our Edge Fabric, which steers user traffic from our points of presence (PoPs) all over the world, and FCR, a service that monitors the health of our network devices. Together, these technologies complete Facebook’s software-centric approach to its networking stack.

In addition to improving the capacity and reliability of our global networking infrastructure, Facebook’s connectivity teams this year reached new milestones toward our goal of bringing connectivity to people with little or no internet access. Our connectivity efforts are focused on business solutions, partnerships, and long-term technology initiatives designed to accelerate the expansion and improvement of connectivity around the world.

Through our connectivity programs and partnerships, we collaborate with internet service providers, carriers, and local entrepreneurs. In 2017, we launched or expanded Express Wi-Fi hotspots with partners in India, Indonesia, Kenya, and Nigeria. In addition, Free Basics is live with mobile operators around the world offering over 1,000 available services.

The Telecom Infra Project (TIP) community continued its strong early momentum, growing to more than 500 member companies and 11 project groups. At the second annual TIP Summit in November, we saw active participation from leading operators including BT, Deutsche Telekom, Telefonica, and Vodafone.

We also announced that we are working with Airtel Uganda and the Bandwidth & Cloud Services Group (BCS) to build 770 km of fiber in northwestern Uganda that will provide backhaul connectivity covering more than 3 million people in Uganda and enable future cross-border connectivity to neighboring countries.

On the technology side, we continued our focus on developing next-generation systems that can be used together with partners to create flexible and extensible networks.

We flew the second test flight of our Aquila solar-powered aircraft, building on the lessons we learned from the first flight in 2016 to further demonstrate the viability of high-altitude connectivity. We set new records in wireless data transfer, achieving data rates of 36 Gb/s with millimeter-wave technology and 80 Gb/s with optical cross-link technology between two terrestrial points 13 km apart. We demonstrated the first ground-to-air transmission at 16 Gb/s over 7 km. These technologies are designed to ultimately help connect people in remote regions where traditional infrastructure is too difficult or costly to install.

In dense, urban environments, connectivity challenges involve speed and capacity. We’ve been working with the city of San Jose, California, to conduct an engineering test of Terragraph — our multi-node wireless system that provides high-speed connection by steering signal around buildings and other sources of interference — in the city’s downtown. Our computer vision team helped us map the downtown to figure out the best places to install the nodes and provide the greatest coverage.

We also open-sourced Open/R, the routing platform originally developed for Terragraph that we now use in our backbone and data center networks.

All these advancements set the stage for bringing the benefits of connectivity to more people and delivering richer and more immersive experiences to people who are already online.

Next, we’ll explore some of the advances we made this year in machine learning and video infrastructure to power those experiences.