Virtual reality is an immersive technology that lets you experience anything, anywhere. However, it’s been difficult to share these experiences with people who aren’t physically with you—until now. We’ve created an easy way for you to capture and share your PC VR experiences through 360 photos and videos.

We solved the problem by rethinking the way 360 content is created. Typically, the process starts by capturing various photos, stitching them together, and then finally encoding them. Previously, we needed to capture the content within a game engine, while ensuring we could produce a high-quality image quickly and on baseline hardware for VR. Now, all that’s possible with the 360 Capture SDK. With the new SDK, VR experiences can be captured in the form of 360 photos and videos instantly and then uploaded to be viewed in News Feed or a VR headset.

The 360 Capture SDK uses cube mapping versus stitching, the traditional method for creating a 360-degree spherical or gigapixel panoramic image. This results in three main differences:

- Accessibility: People no longer need a supercomputer to capture their VR experience. Because cube mapping requires less computing power to capture 360 content, the 360 Capture SDK works on baseline recommended hardware for VR without compromising the experience. That is, it maintains the requirement of 90 frames per second (fps) for VR. Developers will also find the SDK plugin to be compatible with multiple game engines like Unity and Unreal, and even native engines.

- Quality: We maintain a high-quality viewing experience for people viewing captured 360 content in VR or on News Feed. For example, the minimum resolution for a quality viewing experience on News Feed is 1080p and 4K for VR. Our SDK is capable of capturing both resolutions.

- Speed: We maintain 90 fps performance on virtual reality systems like Rift, while capturing VR-quality 360 video at 30 fps in a single second.

This lets you do things like capture game content in 360, easily create marketing videos through 360 experiences of rendered content, and make VR experiences more social with things like VR 360 selfies.

Capturing photos and videos in 360 cameras

By using cube mapping, we successfully made VR experiences accessible and viewable in high quality—with the additional benefit that content can be captured quickly. To get to this point, we focused on the three stages of a 360 camera capture for VR:

- Capturing a variety of photos that cover all 360 degrees of the view; this leads to overlapping images.

- Using a computer vision process (stitching) to combine those photos into one panoramic file by detecting the overlap of features across various images and then blending them together into a final panoramic frame.

- Encoding and saving the final photo at a high resolution for maximum viewing quality.

The challenge

We knew capturing 360 content while in VR would be difficult. VR games and apps already push some general-purpose computers to their limit to maintain a high-quality experience, since VR needs to render content at 90 fps. We weren’t sure if a computer could capture multiple photos, stitch, and encode in near real-time for a 360 photo.

The investigation

We started by studying plugins that mimicked the three stages of a 360 camera capture inside a game engine. The plugins would capture multiple photos, stitch them together, and output a 360 frame. Unfortunately, it took 20 to 40 seconds to capture and stitch 360 photos, and sometimes even longer. We wanted a solution that could capture 360 content from within VR almost instantaneously.

Reversing the problem

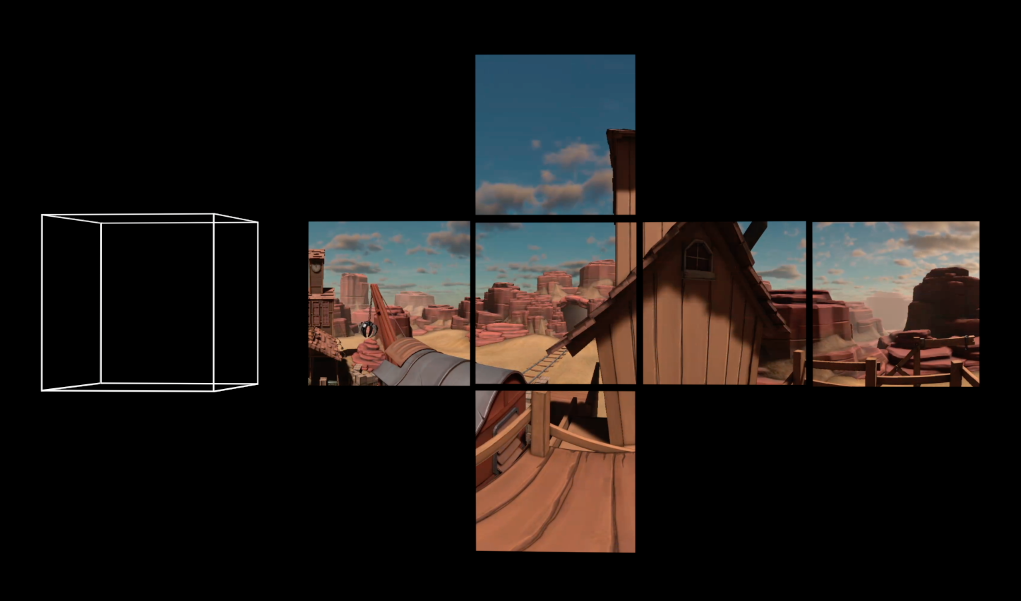

When building 360 video for News Feed, we found cube mapping led to better delivery and streaming quality. Cube map projection is a combination of six faces of the cube. Cube maps have been used in computer graphics for a long time, mostly to create skyboxes (six-sided cubes that are drawn behind all graphics) and reflections. There are a few benefits of using cube maps:

- They don’t have geometry distortion within the faces, so each face looks exactly like you were looking at the object head-on with a perspective camera, which warps or transforms an object and its surrounding area. This is important because video codecs assume motion vectors as straight lines. That’s why it encodes better than with bended motions in equirectangular format.

- Their pixels are well-distributed—each face is equally important. There are no poles as in equirectangular projection, which contains redundant information.

- They’re easier to project. Each face is mapped only on the corresponding face of the cube.

We realized we could skip the stitching process and instead use the game engine to natively capture a cube map—saving us on performance and speeding up our efforts. As an added bonus, the cube map content was actually higher quality compared to stitch content, because we didn’t lose quality in stitching and converting to equirectangular.

Results of cube map capture

We decided to leverage cube mapping to capture the VR experience instead of replicating the 360 camera process. As a result, we could maintain 90 fps performance on Rift, while being able to capture VR-quality 360 video at 30 fps without any noticeable compromise of experience—all in less than one second.

We could also maintain a high-quality image for people viewing the VR capture; the SDK is able to capture real-time 360 video at resolutions as high as 4K. By capturing the cube map directly, the raw source quality is higher than in the stitching method, where the cube map is the final output and loses quality from the stages of conversion. In fact, the perceptual quality of the cube map format at 720p is almost equivalent to that of the 1080p equirectangular format.

Note that for photos, we don’t use the 2×3 cube map format. Instead, we use a 6×1 cube strip, which is effectively the cube map in a different layout.

We could even use a shader to convert the cube map output to the more commonly supported equirectangular format that most 360 platforms support—again, all while maintaining our advanced cube map or cube strip format for maximum quality and Facebook 360 compatibility.

Ecosystem compatibility

360 Capture SDK was designed for broad compatibility. For developers, our SDK supports multiple game engines like Unity and Unreal, and even native engines to get mass developer adoption. Unity support is available with our capture script and DLL, which handles encoding 360 photos and videos on PC as well as injecting metadata. For people capturing their VR experience, they can do so on hardware like NVIDIA and AMD GPUs.

Developers wanting to integrate 360 Capture SDK into their game title or virtual reality app can find a sample SDK here.

We’re excited to help people explore the world of VR with 360 Capture SDK, and we look forward to seeing VR 360 selfies, experiences, e-sports, and more—all in 360.