At Facebook AI Research (FAIR), we wanted to investigate further how artists and professionals typically trained to work with CGI special effects can use these types of tools. Specifically, we set out to answer the following question: Can AI-generated imagery be relevant in the context of directing and producing an immersive film in VR?

To that end, we partnered with the production company OKIO Studio, the Saint George VFX studio, and director Jérôme Blanquet on the virtual reality experience “Alteration.” The goal of our collaboration was to study whether we could generate special effects with AI techniques that were good enough to be part of the final film.

Evaluating the quality of generated images and effects by AI models is a complicated task for researchers, since there is no clear metric of success. This collaboration was a challenging experiment but afforded us the chance to get feedback on our latest AI models from video and VFX professionals.

Before shooting, we showed the director and the crew what AI models were capable of doing. These discussions helped the director decide how and when he wanted to use the AI effects. We took multiple approaches to building visual samples, experimenting with both with GAN-based auto-encoders and style transfer. However, the auto-encoders, despite being good quality from a research standpoint, did not generate effects that fit with what the film crew was looking to achieve, so we decided to use style transfer.

But adapting style transfer for this task involved two challenges — one technical and one more subjective. A VR film is high-definition, shot in 360, and stereoscopic (there is one video for each eye to produce the 3D effect). Applying style transfer at this scale introduced memory and time constraints, and we were unsure what the effect would look like in 3D. Another challenge was “optimizing” our algorithm not against a quantifiable metric but against the aesthetic opinions of the director. We addressed these challenges in three ways.

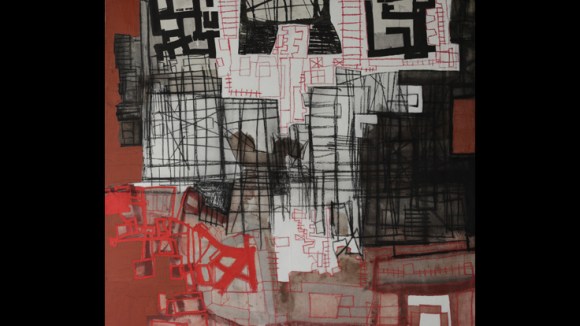

First, we needed to train the neural networks that would be used for altering the film frames. We selected 17 target styles in total, modeled after paintings by Julien Drevelle — whose artwork also appears in the film — and corrupted noise images. Our style transfer algorithm was compact enough to work on a mobile phone, but we needed to scale style transfer to a professional VR setting. We had trained the original style transfer neural networks on images with 128×128 resolution, but the frames we needed to apply them to in VR were much bigger than images on a phone. If the training images were too small, the network wouldn’t see enough of the global structure to create the style effects on a larger scale. So we had to retrain the networks with much larger images (768×768). Overall, training the models took 120 GPU-hours.

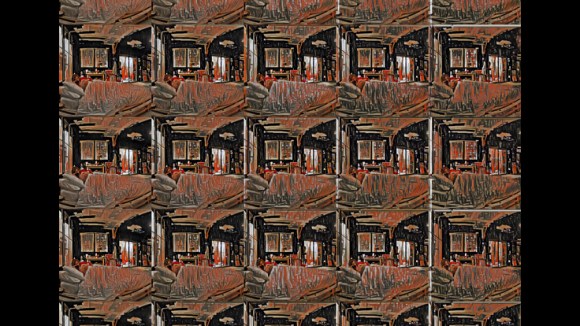

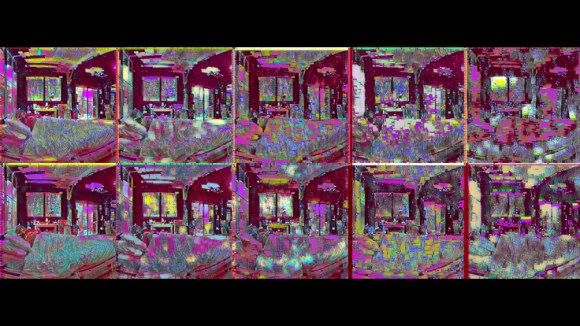

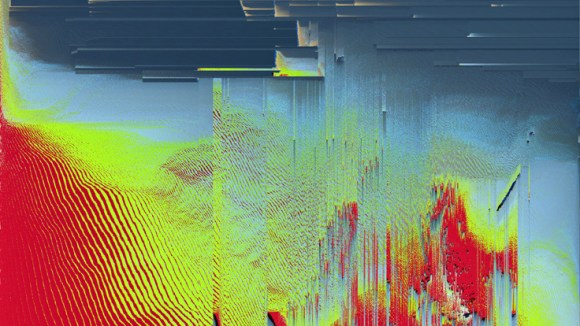

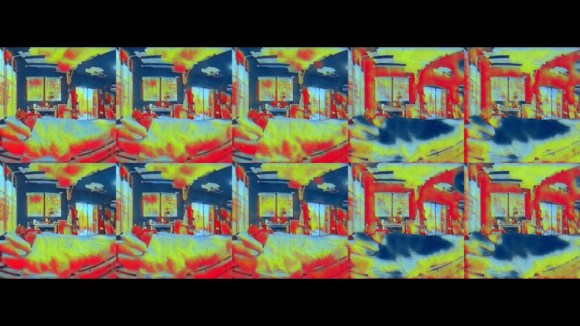

Once the models were trained, we generated high-resolution test images by applying each target style to a single frame, and then tuning the strength of the style effect to create 25 different high-resolution output frames. This process was useful both for the director to pick which style or combination of styles were most interesting to him, and for us at FAIR to determine what a good style transfer is from a video professional standpoint. Below are some examples of paintings and noise images paired with high-resolution frames, depicting various applications of the transfer. We can see how style transfer can generate infinite variations of effects and be a creative gold mine for directors.

Finally, we had to apply the models to the video frames for the scene. The models required around 100 GB of RAM each on GPUs, so we used CPUs instead, for a total of about 550 CPU-hours to encode all the frames with the selected styles. We also had to take stereoscopy into account and make sure that the effect was consistent for both eyes. We used disparity maps created with software provided by our VFX partner, then mapped the style transfer rendering from the left eye to the right eye. The encoded scenes were then handed off to the VFX company that would combine them to create the final rendering.

With this film, we adapted style transfer to VR for the first time and demonstrated that AI is now mature enough to be used as a creative tool for filmmakers. We are happy to have opened up a new channel for visual storytelling. The virtual reality experience is available on the Oculus Store for both Rift and Samsung Gear VR, powered by Oculus. It has been acclaimed by critics since it premiered at the Tribeca Film Festival in May, winning the Jury Special Prize at the Paris Virtual Film Festival and being selected for several other festivals including Barcelona Sónar and Cannes Next.