We recently announced that Facebook will be building its twelfth data center and will be expanding the Papillion, Nebraska location from two buildings to six. As our community continues to grow and we create more immersive experiences through live video, 360 photos and videos, and virtual reality that require additional capacity, our ongoing challenge is scaling the network that interconnects more than 2 billion people.

To keep up with this growth, we designed the Fabric Aggregator, a distributed network system made up of simple building blocks already available – Facebook’s Wedge 100 switch. Taking a disaggregated approach allows us to accommodate larger regions and varied traffic patterns, while providing the flexibility to adapt to future growth.

A distributed network system with a building block approach

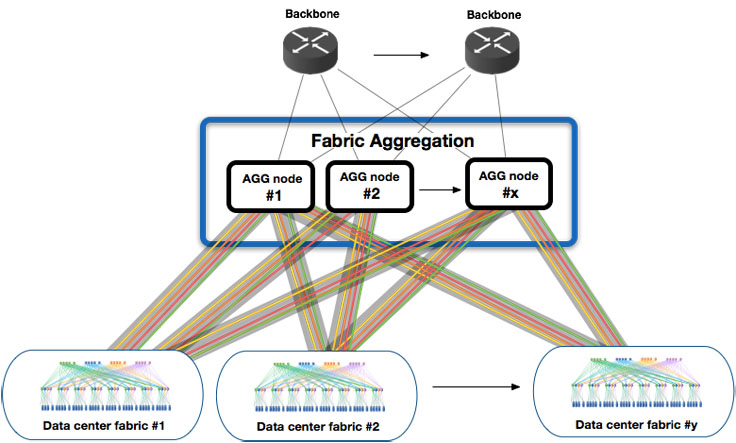

All traffic that leaves or enters Facbook’s data centers is handled by the fabric aggregation layer. Traffic that flows between buildings in a region is referred to as east/west traffic, while traffic exiting and entering a regions is known as north/south traffic. As we scale our regions and introduce more immersive experiences, both types of traffic continue to grow, although at different rates. This large scale growth puts pressure on the fabric aggregation tier in terms of port density and capacity per node.

Unfortunately using a large, general purpose network chassis no longer met our needs in terms of scale, power efficiency, and flexibility.

We chose to build a distributed network system with well known, simple, and open building blocks like Wedge 100 and FBOSS, and developed a cabling assembly unit to emulate the backplane that addressed some of the challenges of an electrical backplane of a classic chassis. With this approach, we can scale the capacity, interchange the building blocks, and change the cable assembly quickly as our requirements change. This, in turn, results in significant efficiencies, power savings, and increased flexibility.

Implementation of a Fabric Aggregator Node

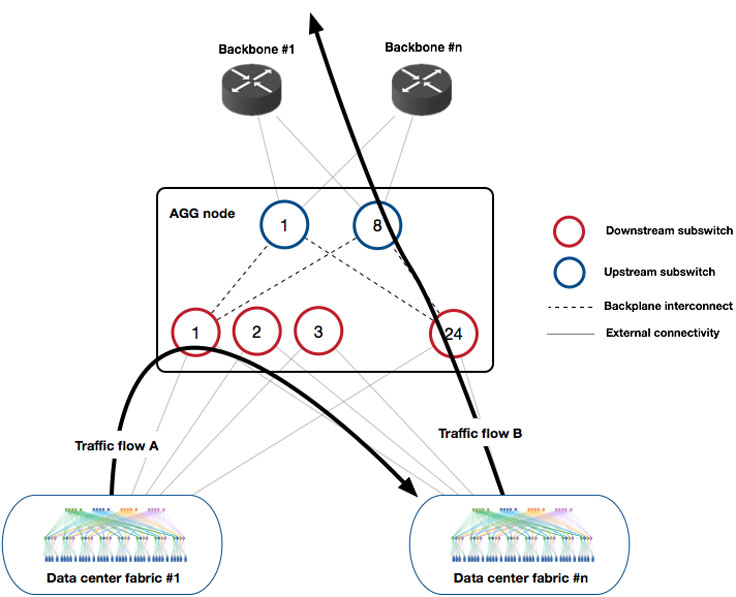

A Fabric Aggregator node is a unit of bandwidth that we stamp out to meet the overall bandwidth demand of a particular network tier.

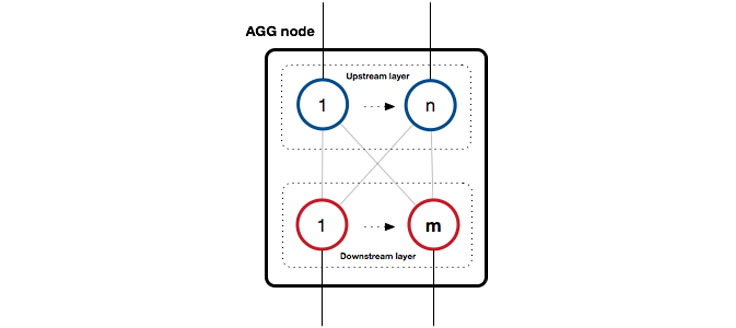

In this case, each Fabric Aggregator node implements a 2-layer cross-connect architecture. Each layer serves a different purpose:

- The downstream layer is responsible for switching regional traffic (fabric to fabric inside the same region, designated as east/west).

- The upstream layer is responsible for switching traffic to and from other regions (north/south direction). The main purpose of this layer is to compress the interconnects with Facebook’s backbone network.

The downstream and upstream layers can contain a quasi-arbitrary number of downstream subswitches and upstream subswitches. Separating the solution into two distinct layers allows us to grow the east/west and north/south capacities independently by adding more subswitches as traffic demands change.

Today, we use Wedge100S with Facebook Open Switching System (FBOSS) as our base building blocks for this solution, running Border Gateway Protocol (BGP) between all subswitches. As with our previous chassis switches, Backpack and 6-pack, we opted for a distributed design with no central controller. Each subswitch operates independently, sending and receiving traffic without any interaction or dependency on other subswitches in the node. There is no differentiation between whether it is operating as a downstream or upstream subswitch, which means all subswitches are interchangeable at the hardware and software level.

The ability to tailor different Fabric Aggregator node sizes in different regions allows us to use resources more efficiently, while having no internal dependencies keeps failures isolated, improving the overall reliability of the system.

Operational aspects

A building block approach gives us the ability to operate the solution at either the subswitch or node level. For example, if we detect a misbehaving subswitch inside a particular node, we can take that specific subswitch out of service for debugging. If there is a need take all downstream and upstream subswitches out of service in a node, our operational tools abstract all the underlying complexities inherent to multiple interactions across many individual subswitches. We also implement redundancy at the node level so that we can take many nodes out of service simultaneously in a single region. The Fabric Aggregator layer can suffer many simultaneous failures without compromising the overall performance of the network.

Backplane cabling solution

The Fabric Aggregator node provides the unit of bandwidth that we replicate to meet the overall demand of this aggregation tier. The physical footprint of this unit of bandwidth in terms of space, power, and cooling, may or may not fit in a rack. The backplane cabling unit provides the necessary interconnection of the building blocks inside each Fabric Aggregator node.

Deployment types

Early into our design, we recognized that the most flexible designs would need to be larger than a single rack. However, we also saw potential optimizations that could be made by aggregating into a single rack. We decided that we wanted to have the capability to do both while understanding the tradeoffs. We classify these into deployment types that can be chosen based on space, power, capacity, and failure constraints.

- Single-Rack

- Well defined space constraints

- User defined tight power parameters

- Rack and building block defined capacity

- Well defined failure constraints

- Multi-Rack

- Flexible space constraints

- User defined flexible power parameters

- Room and building block defined capacity

- Room defined failure constraints

From there, we developed the following cabling configurations based on needs:

- CWDM4 + Single Pair Single Mode Fiber

- Single-Rack or Multi-Rack

- PSM4 + Parallel Single Mode Fiber

- Single-Rack or Multi-Rack

- Pig-Tail Active Optical Cable (Combined Optic with Fiber)

- Single-Rack Only

- Requires Sideplane Topology

- Direct Attach Copper Cable Sideplane Assembly

- Single Rack Only

- Integrated into Sideplane Topology

Specifications for all of the above backplane options have been submitted to OCP.

Conclusion

Fabric Aggregator redefines the way we scale the network capacity between data center fabrics in our regions. Fabric Aggregator outlines a unique design and architecture that leverages simple, open, and readily available network building blocks, like Wedge 100 and FBOSS, to create large scale network solutions. The disaggregated design provides the flexibility, capacity, and speed to address our current needs and our future growth.

This solution is a product of a tight collaboration between many teams with different areas of expertise. Many thanks for all the people involved.