The full article is available at research.fb.com.

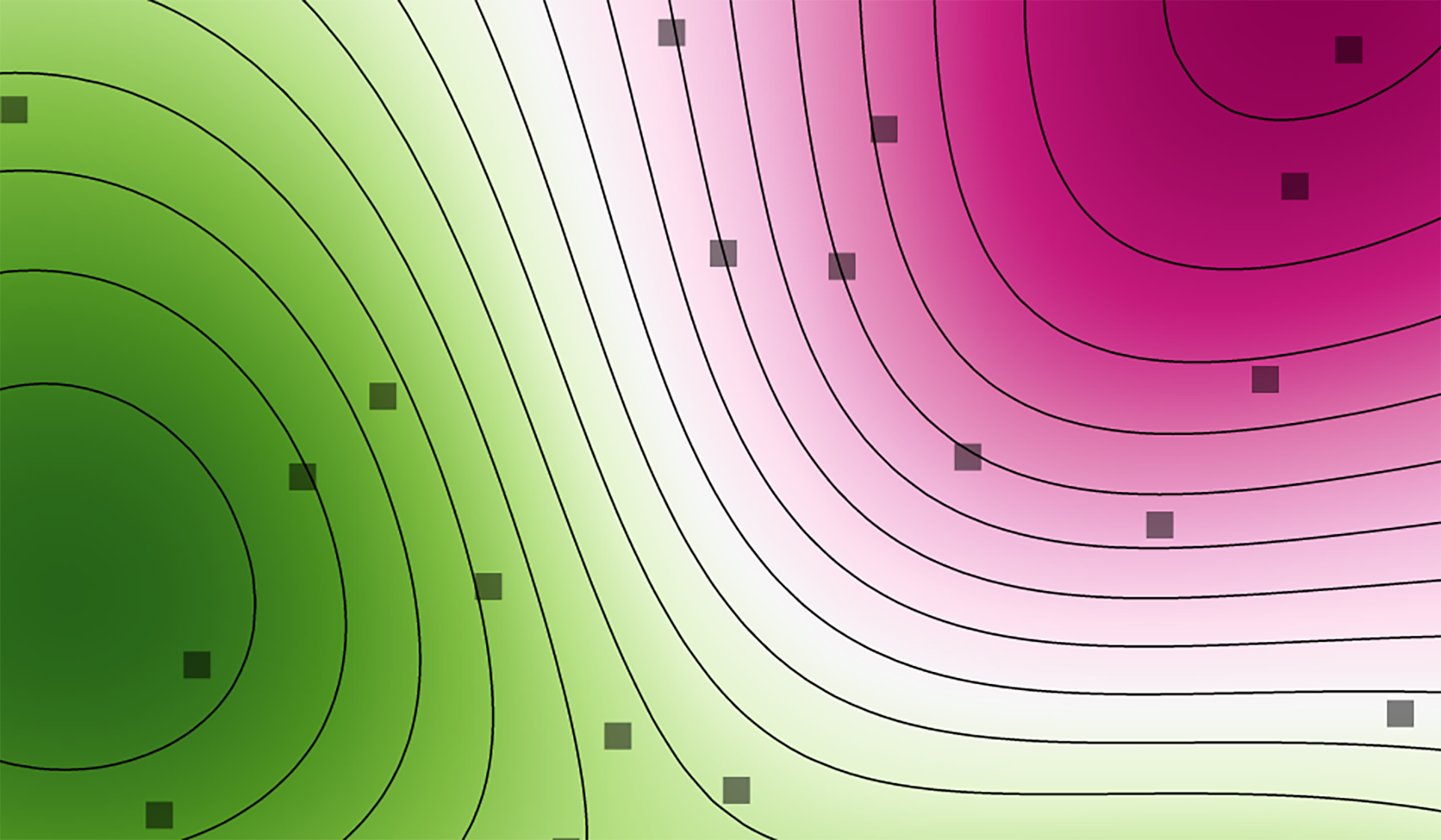

Facebook relies on a large suite of backend systems to serve billions of people each day. Many of these systems have a large number of internal parameters. For example, machine learning systems are used for a variety of prediction tasks. These systems typically involve multiple layers of predictive models, with a large number of parameters for determining how the models are linked together to yield a final recommendation. Such parameters must be carefully tuned through the use of live, randomized experiments, otherwise known as A/B tests. Each of these experiments may take a week or longer, and so the challenge is to optimize a set of parameters with as few experiments as possible.

A/B tests are often used as one-shot experiments for improving a product. In our paper Constrained Bayesian Optimization with Noisy Experiments, now in press at the journal Bayesian Analysis, we describe how we use an AI technique called Bayesian optimization to adaptively design rounds of A/B tests based on the results of prior tests. Compared to a grid search or manual tuning, Bayesian optimization allows us to jointly tune more parameters with fewer experiments and find better values. We have used these techniques for dozens of parameter tuning experiments across a range of backend systems, and have found that it is especially effective at tuning machine learning systems.