We spend a lot of time thinking through the best ways to preserve privacy for the people using our services, while also combatting fraud across our platforms. We handle thousands of different types of events reported by client apps for various purposes, including investigating crashes, assessing performance, and monitoring product and advertising metrics. These events help us understand our apps and improve the experience for the people using them. There is a careful balance we look to achieve between reporting enough information to accurately monitor our services and minimizing data collection.

Today at @Scale 2019, we shared research we’re doing to explore a new approach to reporting events with greater anonymity — while still combatting fraud — by using cryptographic techniques like blind signatures. We believe that if browser makers and application developers work together to define a new set of APIs that leverage these techniques, combatting fraud will require less information to be collected from the people who use these browsers and apps. In this post, we’ll talk about the challenges of private fraud-resistant reporting and suggest a possible industry-wide solution using anonymized logging and blind signatures.

How we handle this now

We provide APIs that third parties use to report events. These events are actions taken by people off Facebook (e.g., clicks). Fraud occurs when an entity tries to pass off an event as legitimate even when it’s not — like when a bot makes automated clicks that are meant to look like they were made by a real person. How can we determine which events are legitimate and which are fraudulent?

To date, most companies have approached this problem by collecting hard-to-fake signals from a device, like the accelerometer reading, the battery charge level, and other high-entropy signals. A fraudster who has to fake these values will struggle to approximate real-world signals and might stand out as a statistical anomaly. But these hard to fake device signals are also hard for people to stay aware of and informed about. Because of that, the collection of this type of information can raise questions about privacy, even when it is used to combat fraud.

To mitigate the potential concerns, we approached this with data minimization in mind; what’s the least information we could collect while simultaneously being able to prevent fraud at scale? If we approach this problem from the perspective of “what extra parameters could we send?” then the absolute minimum would be parameters that add no extra information beyond “this was an authentic event.” But how do we make these unforgeable?

Digital signatures

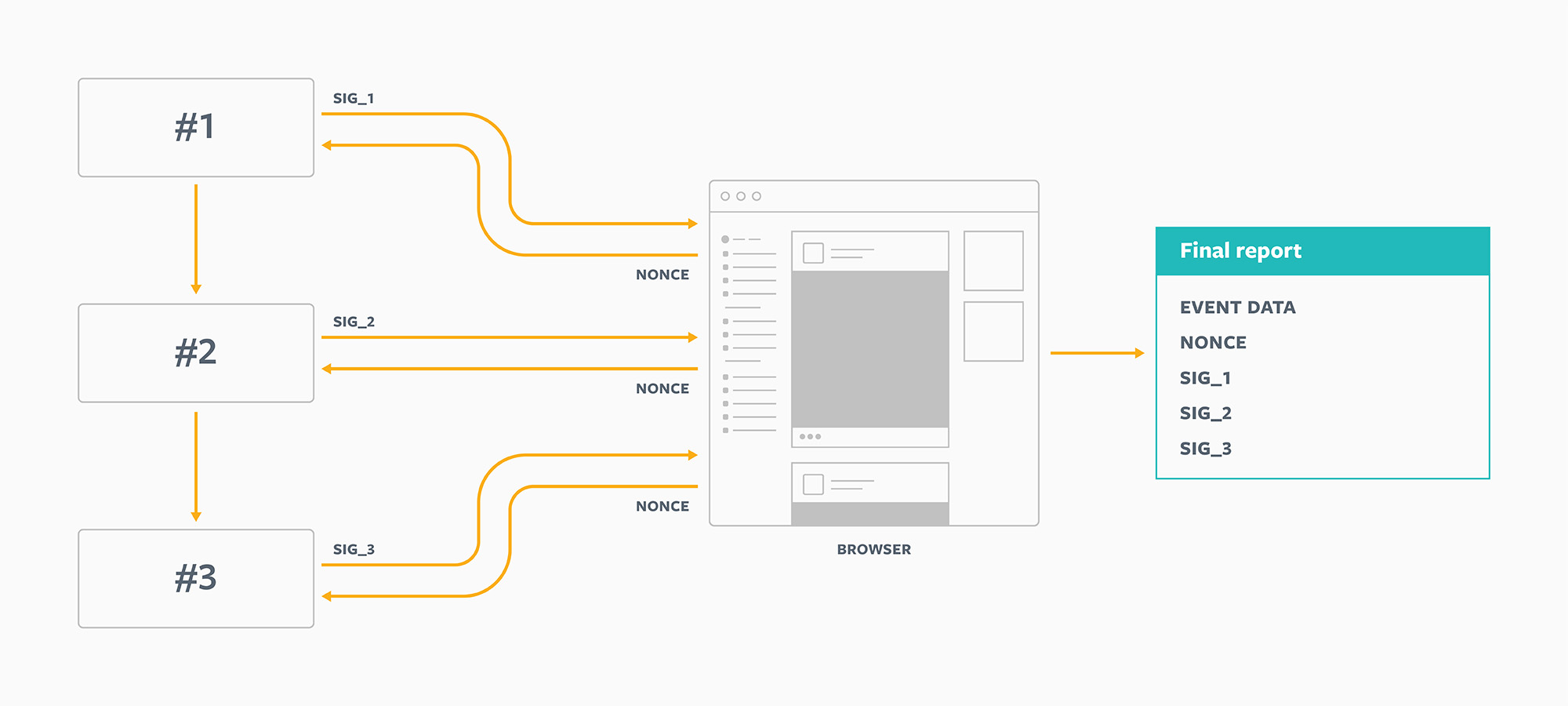

Digital signatures (based on public/private key cryptography) provide a good solution here, because they are very hard to forge. In any product interaction, a user might visit several touchpoints. We call this a user journey. A touchpoint might be from different domains or even different devices. The entity that operates each touchpoint along a user journey can use its own private key to sign some piece of data.

For an N-step user journey, we propose sending N+1 extra parameters:

- A nonce (a randomly generated string)

- 1st step signature

- 2nd step signature

- …

- Nth step signature

Each signature along the way should be a valid signature of the nonce. This can be verified by consulting each entity’s public key.

Linkability

Digital signatures are pretty effective, but they still don’t fully minimize the amount of information these parameters convey. The problem is linkability. Each of these N entities can link up the conversion report to its own internal log and line up which signature request corresponds to the report. So, how can we make the conversion report unlinkable to the signature request?

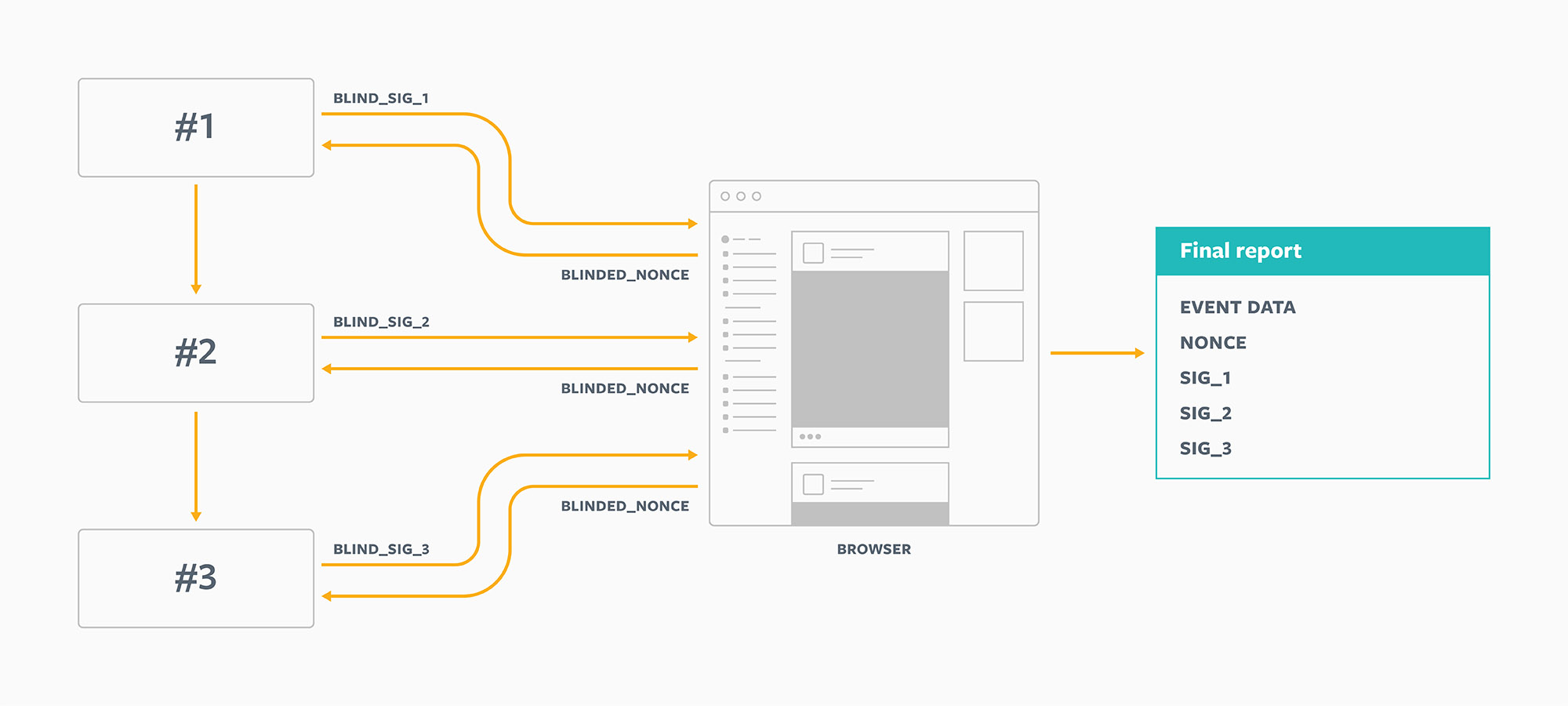

Introducing blind signatures

This is where blind signatures come in. They reduce the ability to forge parameters without increasing the prospect that data could be linked back to individuals. Instead of asking each entity to sign nonce, we ask it to sign something else instead:

blinded_nonce = Blind(nonce, blinding_factor)The entity would sign this value as normal:

blind_signature = ComputeSignature(blinded_nonce, private_key)At this point, this entity has seen only two values: blinded_nonce and blind_signature.

Now, one can “unblind” this signature:

signature = Unblind(blind_signature, blinding_factor)The great property of blind signatures is that this unblinded signature is a valid signature for the original data, prior to blinding, and the signer has not seen the original data at all.

VerifySignature(nonce, signature, public_key) = trueLater on, we can send this entity two values it has never seen before, nonce and signature, and it can verify that the signature is valid. Since most entities receive many different signature requests, this process makes it much harder for them to connect this one with a request they received previously. Blind signatures help obscure the individual because a specific individual’s request could have been any one of thousands of requests.

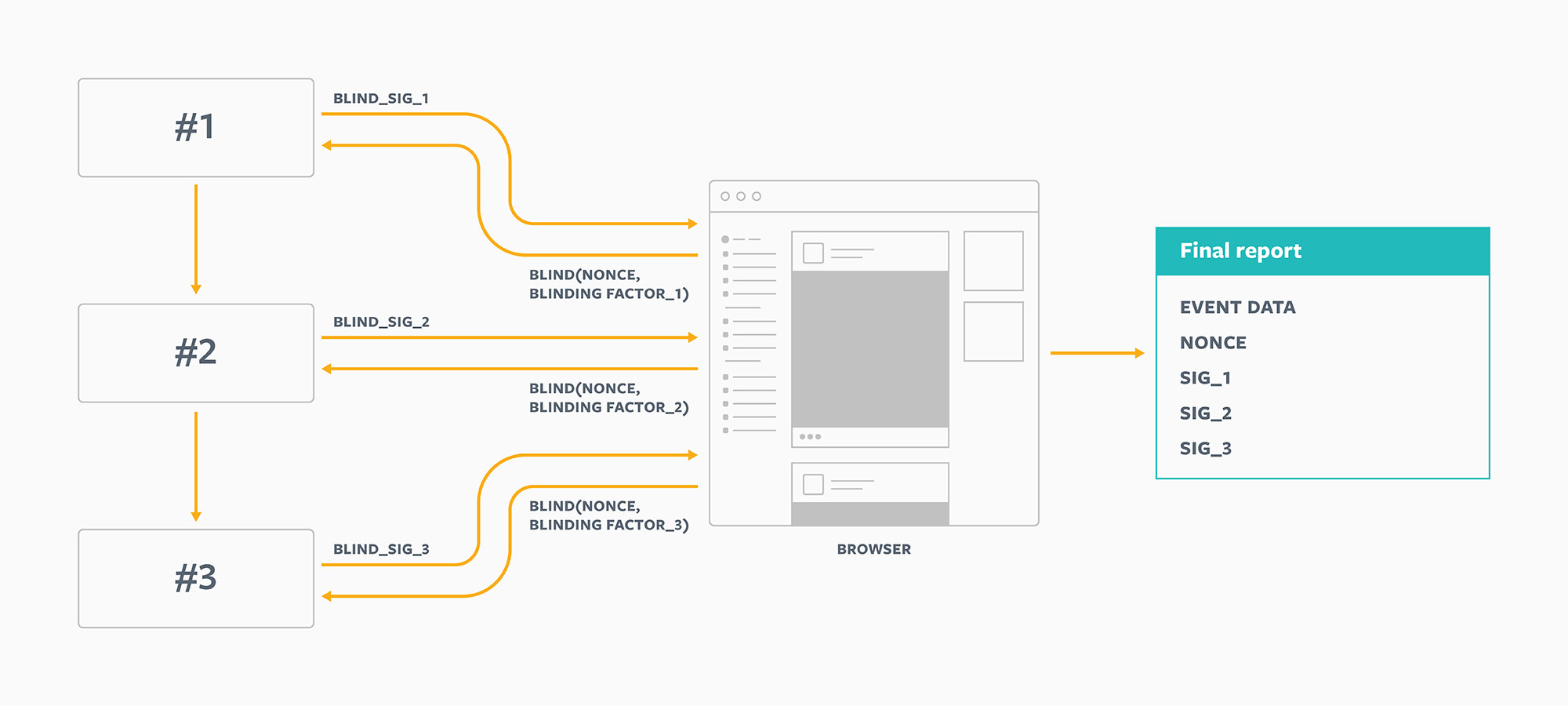

Linkability across requests

If we send the same blinded_nonce to each of the N parties, they could potentially share data with one another and link up each of the N signature requests. To prevent this behavior, we can use a different blinding_factor for each step of the user journey. That way, even if all N entities pooled their data together, they couldn’t link them up, because each would have received a different value for blinded_nonce.

This approach achieves our goal of conveying the absolute minimum amount of extra information, above and beyond a certificate of validity. To recap:

- The final report contains only N+1 extra parameters.

- None of the N entities has ever seen

noncebefore. - None of the N entities gave out any of the signatures in the report.

- Even if they all shared all their internal data, they couldn’t link up the signature requests, because each signed a different value.

What facilitates this?

The best solution, the one that does the most to prevent fraud, is to have the browser or operating system facilitate this signature collection.

- The browser or operating system can generate a totally random value for

nonce, one that never leaves the device prior to the sending of the final report. - The browser or operating system can generate N totally random values for

blinding_factor, one unique value per touchpoint. None of these values ever needs to leave the device. - The actual signature requests can happen out-of-band, directly from internal browser code to blind-signature endpoints, outside of the reach of the application (or even the browser extension) code, making it harder for malware to observe and collect the signatures.

- The browser or operating system can add random variation to the timing of when it sends the final conversion report, to further anonymize it.

The value of having the browser or operating system facilitate this is that the code can be fully open source and scrutinized by the security and privacy community. It means that private data (like the values used for blinding_factor) never needs to leave the device and hit any server. Finally, placing this logic outside the reach of normal application code makes it much more difficult to tamper with.

This does not solve every case, and some attackers will go to extreme lengths to compromise people’s accounts. But if it is the browser or operating system that facilitates this, it becomes much more difficult for fraudsters to interfere with this logic. This would help protect against attacks like stealing cookies or installing bad apps or browser extensions on a person’s device.

How hard is it to fake an event?

Let’s put ourselves in the position of an attacker and attempt to generate a convincing report. We can generate any value we want for nonce, but we cannot generate the N signatures without access to the N private keys.

The first thing an attacker would try would be a replay attack, or obtaining one valid set of report parameters and resending them over and over. This is fairly easy to prevent. We just maintain a record of all the reports we have received in the past and ensure that each has a unique value for nonce. If we use enough bits here, there should be very few collisions. Any repeat values for nonce can just be ignored.

The next thing an attacker might try is to create fake accounts or compromise the accounts of real users, and then use them to generate as many sets of signatures as possible. This is also fairly easy to mitigate. In the case where a touchpoint represents something like a click on a specific part of the user interface, the touchpoint should give out only one signature per click. If an entity requests multiple signatures for a single click or requests signatures when there was no click at all, these signature requests should be denied.

This effectively limits the maximum rate at which the attacker can obtain signatures. If the touchpoint observes irregular behavior (e.g., a person’s account doing an unusually large amount of clicking), it can just rate limit the account, rejecting all requests for signatures for a period of time.

Assuming we’ve implemented all the mitigations listed above, the attacker would probably move on to trying to find the touchpoint with the weakest protections, where it can generate the maximum number of signatures. Perhaps one touchpoint hasn’t implemented proper throttling or rate limiting. One of the use cases for this proposal could be advertising, which might be a higher value target for fraud. For example, we can call the first touchpoint A and assume it represents a click on an ad shown on a website. Then we can assume that touchpoint B represents a purchase event on an advertiser website, but also that this advertiser has not implemented appropriate throttling or rate limiting for its blind signature endpoint.

Given how easy it is to get signatures from touchpoint B, an attacker just needs to have fake or compromised accounts click any ad on Facebook, get a valid signature, then generate a signature from touchpoint B. What if the signatures they received from Facebook indicated more than just an ad click, so we could better identify the weak point? Can we make those signatures somehow indicate the destination the ad led to?

Partially blind signatures

We can do this with partially blind signatures. There is a shared piece of information that’s available both at the time that the signature is created and in the final report. That piece of information is the order of the touchpoints.

Let’s adjust the proposal slightly to ensure that a report of a user journey from touchpoint A to B has signatures that validate only for this ordering. The easiest way to implement a partially blind signature is by generating multiple public-private keypairs. Managing several keys could be operationally difficult. There are other ways to perform partially blinded signatures without multiple keys, however they involve more advanced cryptography such as pairing based curves which lack common implementations on platforms. We’d love to work with the community to make it possible to make these more practical, but until then we could use multiple keys for the sake of simplicity.

Continuing our previous example of advertising, let’s assume that the user journey involves a person clicking on an ad, which takes them to a destination page on an advertiser’s site where they might purchase a product. Several advertisers could run ads, which could redirect users to different websites. With a partially blinded signature, Facebook would generate one public-private keypair for each destination URL. For example, if clicking on an ad takes someone to a page on the site amazingtoasters.com, we would use the public-private keypair for that destination website to generate the first signature (the signature that represents a click on an ad on Facebook). Later, if a purchase occurs, the browser sends Facebook a report with both Facebook’s URL-specific signature and the advertiser’s signature. When we are validating the report, we can grab the corresponding public key associated with amazingtoasters.com and use that to validate. This process implicitly binds the destination url to the blind signature.

This makes fraud significantly harder

This minor update to the proposal makes fraud significantly harder. In our advertising example, if the attacker uses fake or compromised accounts to click on random ads they are served, they’ll get signatures that are valid only for user journeys leading to specific touchpoints. Being able to cryptographically bind metadata to blind signatures has applicability to a wide set of problems involving multiple touchpoints. It would prevent attackers from massively exploiting the weakest link touchpoint. They would first have to have their fake or compromised accounts be served ads leading to that website. Since the attacker cannot control which ads they are served, this makes things much harder for them. We could also choose to serve no ads to suspicious users or to serve only ads directing to well-secured destinations.

Let’s work together

What’s become clear to us throughout this early research is that we cannot do this work alone. This proposal requires browser and operating-system manufacturers to work together with application creators and website owners to fight fraud. We believe this approach would not only be a more effective fraud deterrent than current approaches, but also provide data-minimization advantages.

We’re looking for input from the academic and business communities to continue improving and refining this proposal. We invite you to submit comments and input to the W3C and help us move this work forward. Please let us know: What aren’t we considering? Can we provide even better anti-fraud guarantees? How can we implement partially blind signatures in a simpler way? How can we make it as easy as possible for website owners to implement blind signature endpoints? We’d love to hear your thoughts.

Several people contributed significantly to this work. We’d like to thank Andrew Knox, Kevin Lewi, Payman Mohassel, Scott Renfro, and Erik Taubeneck.