It isn’t enough for virtual assistants to offer a rote response to your voice or text. For AI systems to become truly useful in our daily lives, they’ll need to achieve what’s currently impossible: full comprehension of human language.

One strategy for eventually building AI with human-level language understanding is to train those systems in a more natural way, by tying language to specific environments. Just as babies first learn to name what they can see and touch, this approach — sometimes referred to as embodied AI — favors learning in the context of a system’s surroundings, rather than training through large data sets of text (like Wikipedia).

Researchers in the Facebook Artificial Intelligence Research (FAIR) group have developed a new research task, called Talk the Walk, that explores this embodied AI approach while introducing a degree of realism not previously found in this area.

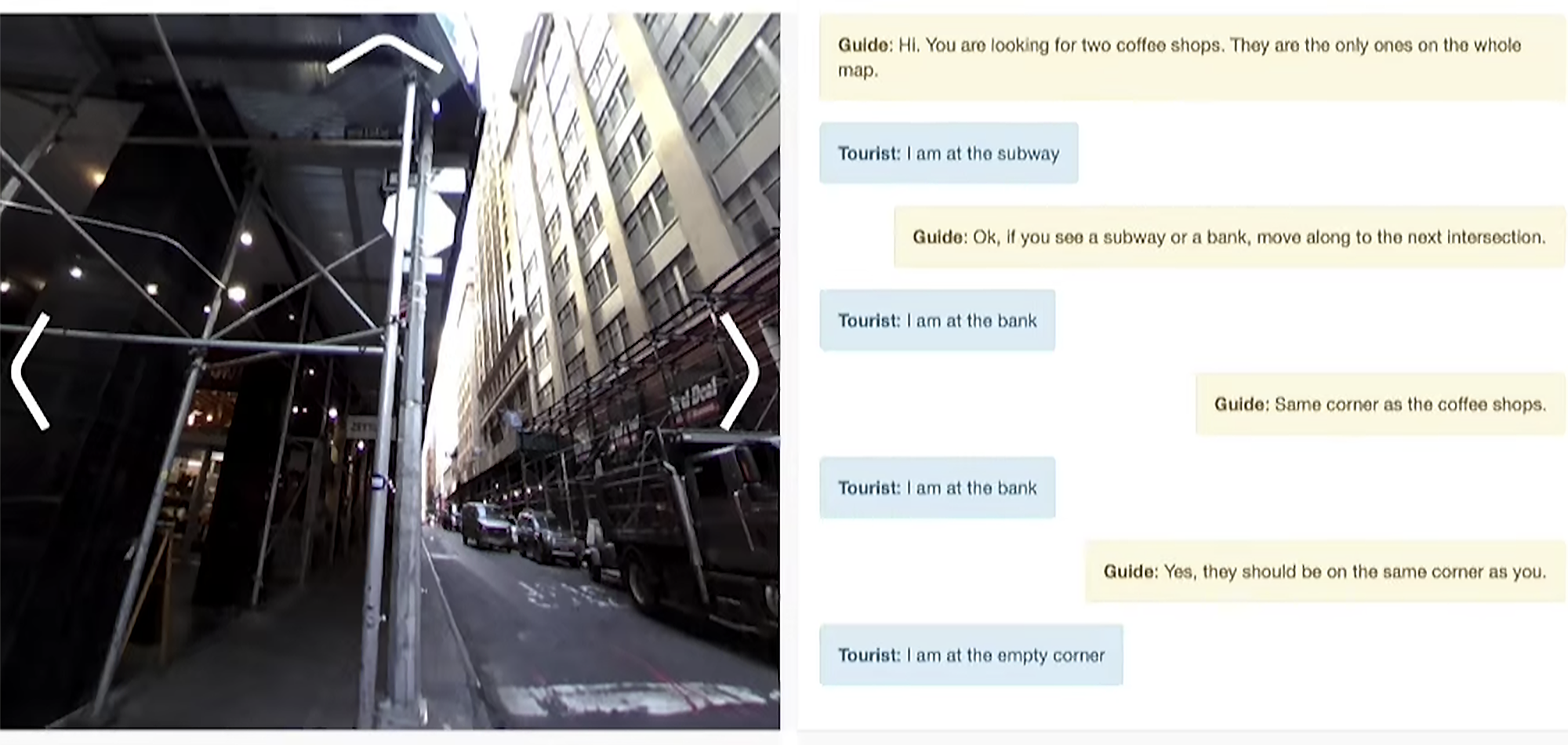

In the task, a pair of AI agents have to communicate with each other to accomplish the shared goal of navigating to a specific location. But rather than presenting the AI agents with a simplified, game-like setting, the goal of the task is for the tourist bot to navigate its way through 360-degree images of actual New York City neighborhoods. This is done with the help of the guide bot who sees nothing but a map of the neighborhood. Using a novel attention mechanism we created, called MASC (Masked Attention for Spatial Convolution), we helped the guide bot focus on the right place on the map. This in turn produced results that were, in some cases, more than twice as accurate on the test set.

The goal of this work is to improve the research community’s understanding of how communication, perception, and action can lead to grounded language learning, and to provide a stress test for natural language as a method of interaction. As part of FAIR’s contribution to the broader pursuit of embodied AI, we are releasing the baselines and data set for Talk the Walk. Sharing this work will provide other researchers with a framework to test their own embodied AI systems, particularly with respect to dialogue.

Pairing AI tourists with AI guides

To provide environments for their systems to learn and demonstrate grounded language, FAIR researchers used a 360-degree camera to capture portions of five New York City neighborhoods — Manhattan’s Hell’s Kitchen, East Village, Financial District and Upper East Side, and Williamsburg in Brooklyn. These selected areas featured uniform, grid-based layouts with typical four-cornered street intersections, and served as the first-person perception of the environments for one half of each pair of AI agents — the “tourist” — to operate in.

The AI “guide,” on the other hand, had access to only a 2D overhead map with generic landmarks, such as “restaurant” and “hotel.” Neither bot could share its view with the other. So navigating to a specific location required communication. Each experiment in this task concludes when the guide makes a prediction that the tourist has arrived at the goal location. If the prediction is correct, the episode is marked as successful; a failed prediction is marked as incorrect. The task doesn’t put a limit on the number of moves or communications.

The reliance on realism is new for this field. Entirely simulated environments are the norm. FAIR researchers also created the natural language interaction between the agents. Rather than generating carefully worded messages for the bots to use, such as “Go to the next block, then turn right to get to the restaurant,” the team collected real interactions from human players. These participants were assigned the same guide and tourist roles as the bots, with the same shared-navigation goals and information constraints (either first-person views or overhead maps).

This emphasis on using real environments and real-life language made the overall problem more difficult. A simulated environment is usually less cluttered and more predictable than 360-degree imagery of an actual city block. A series of carefully scripted responses isn’t likely to capture the nuanced inaccuracies and muddled messaging inherent to genuine, person-to-person conversations. Raising the difficulty of the task makes its results more relevant. To be truly effective at interacting with humans, future AI systems will understand text that’s embedded in messy visual environments and language that doesn’t line up with a limited, predetermined list of phrases.

Exploring natural and synthetic communication

Though natural language communication was the primary focus of this research, the FAIR team put those efforts into context by including two additional “emergent communication” settings, where the agents use a different communication protocol rather than mimic human language.

In the first setting, agents communicated via continuous vectors, meaning they transferred raw data to one another. Those continuous vectors included, for example, representations of what the tourists were observing and doing, to help the map-based guides localize their counterparts.

The second emergent communication setting took a different approach, using what the researchers refer to as synthetic language. In this setting, communication was far more simplified than natural language, using a very limited set of discrete symbols to convey information. By giving the bots the option of communicating in the simplest form possible, the interactions are fast and precise and give us a good idea of how well we could perform with natural language.

The AI tourists and guides performed better when they used one of the two emergent protocols, rather than natural language. This wasn’t a surprise to the researchers, since the natural language used wasn’t optimized for unambiguous information exchange. The best results came when the bots exchanged raw data while being provided with “perfect perception,” meaning the assumption of a perfect ability to identify a restaurant or other landmark based on visual data. (In real situations, computer vision is far from perfect at such tasks.) In these best-case-scenario experiments, both the image recognition and language elements were set aside in order to create benchmarks for use by FAIR or researchers who experiment with the Talk the Walk task. This approach also beat the performance of human players, highlighting the fact that, for certain kinds of tasks, communication through natural language can be challenging even for people.

Grounding AI in its environment

It’s important to put these results, and FAIR’s research, in the proper context: Talk the Walk isn’t a competition between natural language and synthetic interactions but rather an attempt to offer clarity and quantifiable results related to the ultimate goal of creating machines that can effectively “talk” to humans and to one another.

To demonstrate the value of language grounding, our researchers built MASC, the novel attention mechanism, which lets the guide interpret the tourist’s messages with respect to the overhead map in order to predict its location. Attention mechanisms are often used in deep learning to allow the system to attend to certain things, similar to how humans focus their attention. MASC translates landmark embeddings (representations of, for example, “restaurant,” “bar,” etc.) according to the tourist’s state transitions (such as moving left, right, and, from an overhead perspective, up and down), and expresses these as a 2D convolution over the map embeddings. This spatial mask predicts where the tourist currently is, based on tracking its likely progress.

What’s significant about MASC is that it was so broadly effective. The mechanism boosted localization performance across all communication protocols, for natural language and for both emergent communication methods. By grounding the tourist system’s utterances in relation to the guide system’s map, MASC offered large improvements to accuracy, making it more than twice as accurate as the test set when using synthetic communication.

Talk the Walk revealed other, more specific results related to language grounding, including the fact that the paired AI systems often performed better when the tourist communicated the actions it was taking (which way it was moving, for example), and not just what it was observing (such as a restaurant directly ahead).

The AI agents also performed better at the task when they had been trained to generate their own natural language messages — with the express purpose of communicating about this task — rather than simply using verbatim examples of text drawn from the human players’ interactions. This bump in performance had to do, at least in part, with the extraneous chitchat that’s often an upside for natural language interactions but that can get in the way of a communication-based mission. For example, one human participant, after seeing two coffee shops and a Chipotle located close to one another, messaged, “man this is where I need to go if I visit ny,” eliciting the response “heaven!” from the other player. By fine-tuning and optimizing AI agents to generate natural language that only related to the task, those interactions avoided these (and less colorful) diversions.

Finally, the task showed that humans using natural language are worse at localizing themselves than AI agents using synthetic communication. Like Talk the Walk’s other comparisons between human and machine performance, this is an important result, since it helps establish a baseline for further study of the challenges related to developing AI systems that rely on natural language, as well as possible opportunities. Can future systems take advantage of the versatility of natural language while avoiding the ambiguities and other inefficiencies inherent to the way people communicate? Or does dialog impose restrictions that can’t be circumvented? As foundational research, this work frames those questions, rather than attempting to answer them. Of course, the downside of simple synthetic communication is that it is not directly useful for humans, so the challenge Talk the Walk poses is achieving similar or better performance with natural language. And while we’re confident that natural language can eventually be married with more machine-like efficiencies, it will take further research — whether by FAIR or by others — to make that a reality.

Natural language isn’t easy, but it might be necessary

The Talk the Walk task is hard. It’s so hard that some aspects — such as using computer vision to reliably understand text in signage — had to be sidestepped in these initial experiments in order to focus on communication. But the task helps reveal the full nature of the challenges associated with embodied AI, including perceiving a given environment, navigating through it, and communicating about it. By establishing initial baselines, and presenting a novel convolutional masking mechanism to ground communicated actions, this research shows the potential of grounded language to make interactions between conversational AI systems more accurate while also revealing just how large the gap remains between natural and synthetic communication. The raw efficiency of transferring pure data and using emergent synthetic language may have a well-known accuracy advantage over natural language, in ways that this research further defines and quantifies. Natural language still has a key benefit, though — it’s understandable to most people, without requiring extra steps or knowledge to decipher its meaning. For that reason, our ultimate goal is to achieve that high degree of synthetic performance through natural language interaction, and to challenge the community to do the same.

Along with the observations in this paper, FAIR is releasing its Talk the Walk data set — the first large-scale dialogue data set grounded in action and perception — to assist in related research by the AI community. Our own experiments shed light on the sub-problems of localization and communication, but our hope is that others will use the task to better understand goal-oriented dialogue, navigation, visual perception, and other challenges. After all, the potential applications for this kind of grounded language learning are wide-ranging. From AI assistants that can use casual conversation exchanges to offer navigation assistance and descriptions of surroundings to the visually impaired, to robots and virtual assistants that can carry out errands by communicating with people, bots, or some combination of the two, grounded language could fundamentally improve the way we interact with AI-based systems.

We would like to express our gratitude to our colleagues Kurt Shuster, Dhruv Batra, Devi Parikh, and to our intern Harm de Vries, for their work on this research and the resulting paper. In keeping with FAIR’s open source mission, the Talk the Walk task is meant to be a challenge for the wider AI research community, both in terms of the individual sub-problems that need to be addressed and in finding ways to combine the different components for perception, action, and dialogue. We look forward to seeing how the community tackles these important challenges.