One of the long-term goals in AI is to develop intelligent chat bots that can converse with people in a natural way. Existing chat bots can sometimes complete specific independent tasks but have trouble understanding more than a single sentence or chaining subtasks together to complete a bigger task. More complex dialog, such as booking a restaurant or chatting about sports or news, requires the ability to understand multiple sentences and then reason about those sentences to supply the next part of the conversation. Since human dialog is so varied, chat bots must be skilled at many related tasks that all require different expertise but use the same input and output format. To achieve these goals, it is necessary to build software that unifies these tasks, as well as the agents that can learn from them.

Recognizing this need, the Facebook AI Research (FAIR) team has built a new, open source platform for training and testing dialog models across multiple tasks at once. ParlAI (pronounced “par-lay”) is a one-stop shop for dialog research, where researchers can submit new tasks and training algorithms to a single, shared repository. Integration with Mechanical Turk for data collection, training, and evaluation also allows bots in ParlAI to talk to humans. This works toward the goal of unifying existing dialog data sets with learning systems that contain real dialog between people and bots.

ParlAI complements existing FAIR text research efforts like FastText, our quick and efficient text classification tool, and CommAI, our framework for developing artificial general intelligence through increasingly complex tasks.

Tasks in ParlAI

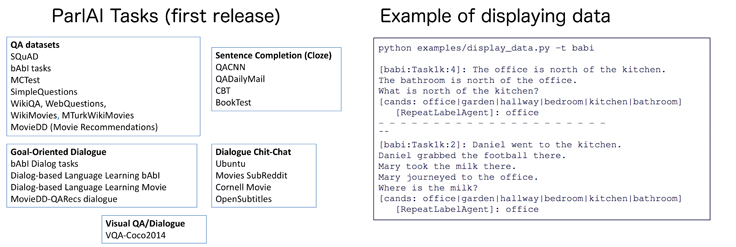

More than 20 public datasets are included in this release, shown in the left panel below.

The tasks are separated into five categories:

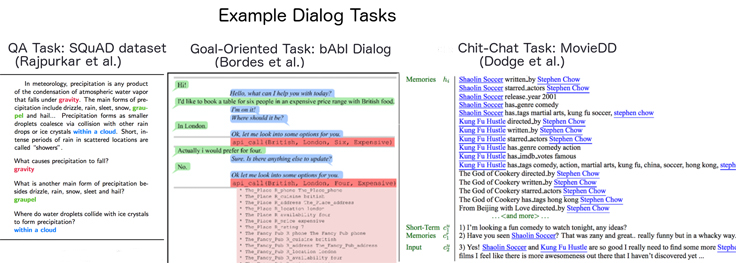

- Question answering: This is one of the simplest forms of dialog, with only one turn per speaker. Question answering is particularly useful because evaluation is simpler than in other forms of dialog: If the answer to the question is known (i.e., the data set is labeled), then we can quickly check whether the answer is correct.

- Sentence completion (cloze test): In this test, the agent has to fill in a missing word in the next utterance in a dialog. While this is another specialized dialog task, the datasets are cheap to make and the evaluation is simple.

- Goal-oriented dialog: A much more realistic class of dialog involves having to achieve a goal; for example, a customer and a travel agent discussing a flight, a speaker recommending a movie to another speaker, two speakers agreeing when and where to eat together, and so on.

- Chit-chat dialog: Some tasks don’t necessarily have an explicit goal but are more of a discussion; for example, two speakers discussing sports, movies, or a mutual interest.

- Visual dialog: These are tasks that include images as well as text. In the real world, dialog often is grounded in physical objects. In the future, we plan to add other sensory information such as audio.

Choosing a task in ParlAI is as easy as specifying it on the command line. If the dataset has not yet been used, ParlAI will download it automatically. As all datasets are treated the same way in ParlAI (with a single dialog API), a dialog agent can switch between training and testing among any of them. You can also specify many tasks at once (multitasking) by providing a comma-separated list; e.g., the command line -t babi,squad to use those two data sets; all the QA data sets at once -t #qa; or every task in ParlAI at once -t #all. The aim is to make it easy to build and evaluate rich dialog models.

Worlds, agents, and teachers

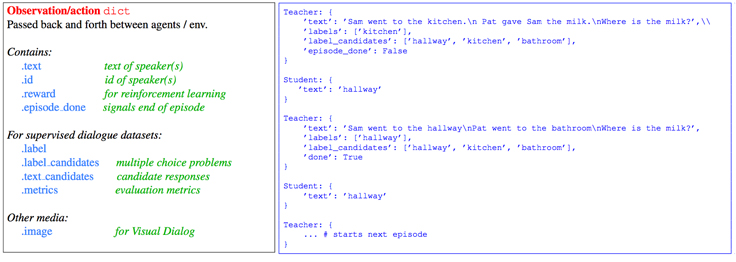

The main concepts (classes) in ParlAI are worlds, agents, and teachers. A world is the environment the speakers live in, and can vary from a simple two-way conversation to more complex scenarios such as a reactive game environment. Agents are things that can act and speak in the world. An agent can be a learner, such as a machine learned system, or a hard-coded bot designed to interact with learners, such as non-player characters in a game. Finally, teachers are a type of agent that talk to the learner, for example, one that implements a task listed previously.

All agents use a single format — the observation/action object (a Python dict) — to exchange text, labels, and rewards with other agents.

Agents send this message to speak, and receive a message in the same form to observe other speakers in the environment. This allows us to tackle all kinds of dialog problems, from reinforcement learning to fully supervised learning, while guaranteeing that all data sets conform to the same standard. When researchers build new models, they can easily apply them across many tasks.

After defining a world and the agents in it, a main loop can be run for training, testing, or displaying, which calls the function world.parley(). The left panel below shows the skeleton of an example main loop, and on the right is the actual code for parley().

The first release of the toolbox contains implemented agents such as simple IR baselines, as well as two full neural network examples: an end-to-end memory network implemented in Lua Torch and an attentive LSTM model DrQA implemented in PyTorch that has strong results on the SQuAD data set among others. We look forward to adding new tasks and agents in future releases.

Mechanical Turk

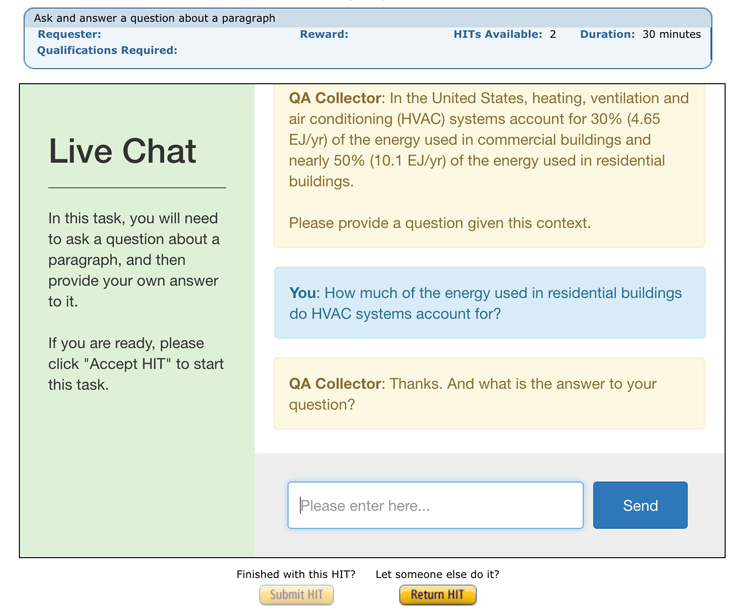

Dialog with humans is an important part of the training process for building chatbots. That’s why ParlAI supports integration with Mechanical Turk for data collection, training, and evaluation. This also allows research groups’ Turk experiments to be compared, which has been historically difficult.

Human Turkers are also viewed as agents in ParlAI. They can easily talk to the bots to help train and evaluate them. Subsequently, person-person, person-bot, or multiple people and bots in group chat can all converse within the standard framework, switching out the roles as desired, with no code changes to the agents. This is because Turkers also receive and send via a pretty, printed version of the same interface, using the fields of the observation/action dict.

We provide two examples in the first release:

- qa_collector: an agent that talks to Turkers to collect question-answer pairs given a context paragraph to build a QA data set.

- model_evaluator: an agent that collects ratings from Turkers on the performance of a bot on a given task.

For the first time, ParlAI provides a place for researchers to collect all the significant dialog tasks in one place. Not only does this allow for much easier iteration on any of these tasks individually, but also makes it possible to easily train a bot on all of them (which eventually should lead to better bots) and to evaluate bots across all those skills. It is also a place for researchers to share their AI learning agents by checking their code into the repository. This allows researchers to reproduce each other’s results and to build on top of each other’s work, pushing advances in the field forward. Finally, the integration of Mechanical Turk means that humans can easily be put in the loop to talk to the bots to help train and evaluate them. In the end, dialog with humans is necessary to build chatbots that can talk to humans.

Solving dialog remains a long-term challenge for AI, and any progress toward that goal will likely have short-term benefits in terms of products that we can build today or the development of technologies that could be useful in other areas. ParlAI is a platform we hope will bring together the community of researchers working on AI agents that perform dialog and continue pushing the state of the art in dialog research.