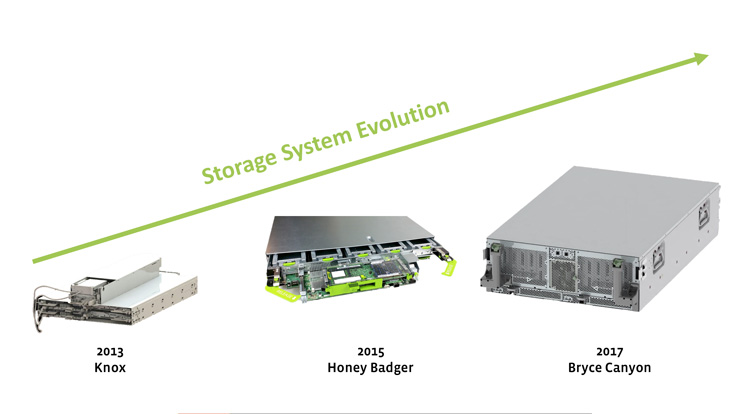

At Facebook, our storage needs are constantly evolving. To be more efficient when running workloads, we are always working to optimize our storage server designs. In 2013, with our design partner Wiwynn, we contributed the first storage enclosure Open Vault (Knox) to the Open Compute Project, and then leveraged that design in 2015 to create our storage server Honey Badger, followed by our Lightning NVMe enclosure in 2016.

With a new focus on video experiences across our family of apps, our workloads are increasingly requiring more storage capacity and density. We set out to design our next generation of storage with a focus on efficiency and performance, and are announcing today that the design specification for our newest storage platform, Bryce Canyon, is now available via the Open Compute Project.

Bryce Canyon will primarily be used for high-density storage, including photos and videos, and provides 20 percent higher hard disk drive (HDD) density than Open Vault. It is designed to support more powerful processors and more memory, and it improves thermal and power efficiency by taking in air underneath the chassis. Our goal was to build a platform that would not only meet our needs today, but also scale to accommodate new modules for future growth. Bryce Canyon provides a powerful disaggregated storage capability with easy scalability compared with other storage platforms.

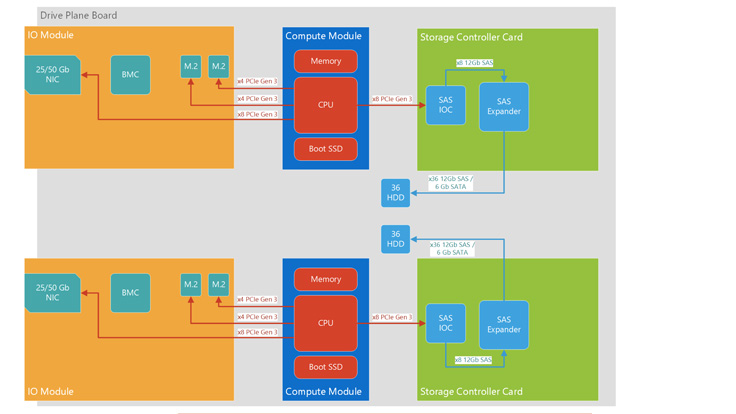

The Bryce Canyon storage system supports 72 3.5” hard drives (12 Gb SAS/6 Gb SATA). The system can be configured as a single 72-drive storage server, as dual 36-drive storage servers with fully independent power paths, or as a 36/72-drive JBOD. This flexibility further simplifies and streamlines our data center operations, as it reduces the number of storage platform configurations we will need to support going forward.

When configured as a storage server, Bryce Canyon supports single or dual Mono Lake CPU modules. We discovered that for certain workloads, such as web and storage, a single-socket architecture is more efficient and has higher performance per watt; we previously moved our web tier to utilize this architecture and have implemented the same Mono Lake building blocks in the Bryce Canyon platform.

Drive connectivity is provided via storage controller cards (SCCs), which in this iteration provide a 12 Gb SAS/6 Gb SATA connection interface. For front-end connectivity, there is an input/output module (IOM) containing the OCP mezzanine NIC, which supports both 25 Gb and 50 Gb NICs. There are currently two variants of the IOM: one with 2x four-lane M.2 modules and one with a 12 Gb SAS controller. By populating different modules, Bryce Canyon can be configured in several ways to optimize for various use cases such as JBOD, Hadoop, cold storage, and more.

Bryce Canyon is fully Open Rack v2 compliant, and it leverages many building blocks from the OCP hardware offerings. The system is managed via OpenBMC, allowing for a common management framework with most new hardware in Facebook data centers. The CPU module is a Mono Lake card, and the NIC support includes the 25 Gb and 50 Gb OCP mezzanine NICs.

Modular and flexible platform

Due to the modular design, future generations of the platform can adopt next-generation CPU modules to increase performance as new technologies arrive. If other input/output interfaces are needed, a new I/O module can be designed to meet the needs. With 16 lanes of PCIe going to the IOM, plenty of bandwidth is available to support a variety of possibilities. The system is designed to be protocol agnostic, so if an NVMe system is desired, the storage controller card can be swapped out for a PCIe switch-based solution.

Flexibility to support different workloads

Performance-hungry workloads

For a high-power configuration, such as the one used for our disaggregated storage service, Bryce Canyon is populated with two Mono Lake CPU modules to create two separate 36-drive storage servers within the same chassis. The I/O module used in this configuration supports two PCIe M.2 slots with four lanes of PCIe Gen 3, in addition to the OCP mezzanine NIC. The M.2 slots can be leveraged for caching or small write coalescing.

Balanced workloads

For storage systems needing less CPU performance per drive (due to large capacity and low read/write rates), a single Mono Lake can be used to connect to all 72 drives in the system.

Maximum capacity

For applications needing more than 72 drives' worth of storage — for example, an archival use case — you can daisy-chain multiple Bryce Canyon JBODs behind a Bryce Canyon head node or an external server.

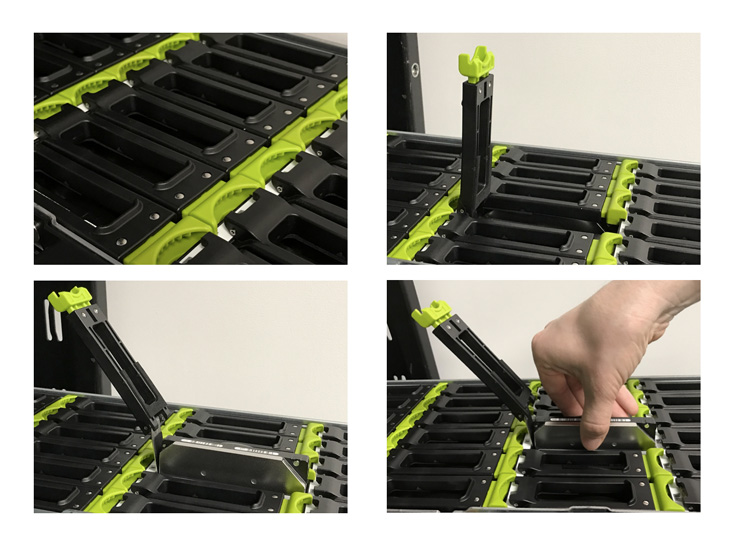

Designed for the data center — deployment @scale

The Bryce Canyon system is quick and simple to service thanks to a toolless design. Every major FRU in the system can be replaced without the use of tools — they are contained with latches or thumbscrews. One of the most unique aspects of the design is the toolless drive retention system that doesn't require any carriers: The system uses a latch mechanism to retain the bare drive. For removal, the latch assists the user by pulling the drive partially out of the system for easier handling. This system significantly simplifies deployment and maintenance, as there is no need to use carriers when adding drives.

The design specification for Bryce Canyon is publicly available through the Open Compute Project, and a comprehensive set of hardware design files will soon follow.